A Causal Approach to the Early, Heterogeneous Stopping of Clinical Trials

Randomized experiments are the gold standard for determining causal effects in a variety of scenarios, from the evaluation of medical treatments via clinical trials to the assessment of online product offerings through A/B testing. In some cases, randomized experiments must end prematurely due to ethical or financial reasons, e.g., if the treatment yields an unintended harmful effect. For example, if early clinical trial data suggests that a drug increases the risk of mortality, then the trial is immediately stopped to protect participants. Our research [1] asks the following question: Can we detect harm if it only affects a minority group of participants?

While many existing methods help determine whether an experiment should end ahead of schedule, these techniques are typically applied to the collected data in aggregate (i.e., homogenously) and do not account for the heterogeneity of treatment effects for diverse patient or user populations. To address this shortcoming, we have used causal machine learning to develop Causal Latent Analysis for Stopping Heterogeneously (CLASH): a broadly applicable method for heterogeneous early stopping that outperforms baselines on both real and simulated data.

The Harm of Homogeneous Stopping

A variety of existing statistical methods determine when to stop an experiment due to harm, ranging from frequentist methods like the O’Brien-Fleming approach [3] to Bayesian methods like the sequential probability ratio test (SPRT) and mixture SPRT [2, 5]. Investigators in both clinical trials and A/B tests will often utilize a subset of these methods—collectively called “stopping tests”—to identify harmful effects from early data and limit the probability of mistakenly stopping early when the treatment is not actually harmful. However, the homogenous application of stopping tests is problematic when the treatment in question has heterogeneous effects, e.g., a drug that is safe for younger patients but hurts those who are over the age of 65. In a hypothetical situation where younger and older patients comprise equally sized groups who experience equal but opposite treatment effects, the true average treatment effect (ATE) is zero; therefore, a traditional homogeneous stopping test with null hypothesis ATE \(\le 0\) will continue to completion at least \((1-\alpha)\) percent of the time. This failure to stop means that half of the trial participants will be harmed.

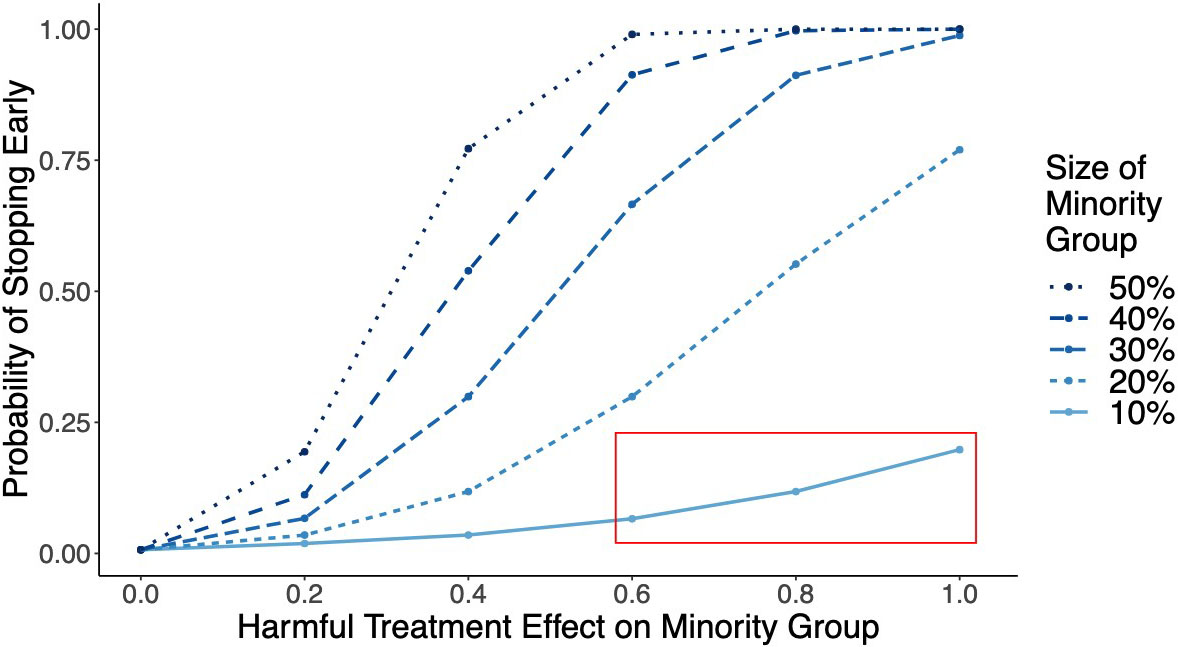

Consider a clinical trial for a drug like warfarin: an anticoagulant that has no adverse effects on the majority of the population, but an increased risk of adverse effects in elderly patients [4]. We use a simple simulation to show that if elderly patients comprise less than 20 percent of the trial population, then the homogenous application of a stopping test would rarely stop the trial for harm (see Figure 1). And if elderly patients comprise only 10 percent of trial participants, then the probability of ending the trial early via homogeneous stopping is less than 20 percent — even if the treatment has very large adverse effects. In most cases, the trial would continue to recruit elderly individuals—many of whom would be harmed by their participation—until its scheduled end. This outcome violates the bioethical principle of nonmaleficence (i.e., do no harm) and is clearly undesirable.

CLASH Method for Heterogenous Stopping

While a growing body of literature explores the inference of heterogeneous effects [6], few researchers have attempted to adapt common stopping tests to respond to heterogeneity. We developed CLASH for this purpose and ensured that it does not require prior knowledge of the source of heterogeneity, makes no parametric assumptions, and works with any data distribution.

At each interim checkpoint of the trial, CLASH operates in two stages. Stage 1 involves the use of causal machine learning to estimate the probability that each participant belongs to a group that is harmed by the treatment. This “harmed group” is not directly observable, but it can be defined based on observed patient characteristics such as race, gender, and age. For each participant \(i\), we use patient characteristics \(x_i\) to estimate the heterogeneous treatment effect \(\tau(x_i)\). Let \(\hat{\tau}(x_i)\) denote the estimated effect and \(\hat{\sigma}(x_i)\) denote the standard error of this estimate. The CLASH weight \(w_i\) then captures the probability that participant \(i\) is harmed:

\[w_i=1-\Phi\bigg(\frac{\delta-\hat{\tau}(x_i)}{\hat{\sigma}(x_i)}\bigg).\]

Here, \(\delta\) represents the minimum effect size of interest—i.e., the minimum amount of non-negligible harm—which is determined based on the specific details of the experiment.

In stage 2, CLASH uses these inferred probabilities to reweight the test statistic of any chosen stopping test, thereby adapting the existing stopping test to better detect heterogeneous treatment harms on participants (since those that are likely to belong to a “harmed group” will be weighted more heavily).

CLASH Outperforms Baselines in Early Stopping

CLASH has many positive impacts on experimentation. First, its flexibility allows practitioners to utilize their stopping test of choice. It is easy to use and requires minimal extra code as compared to existing early stopping implementations.

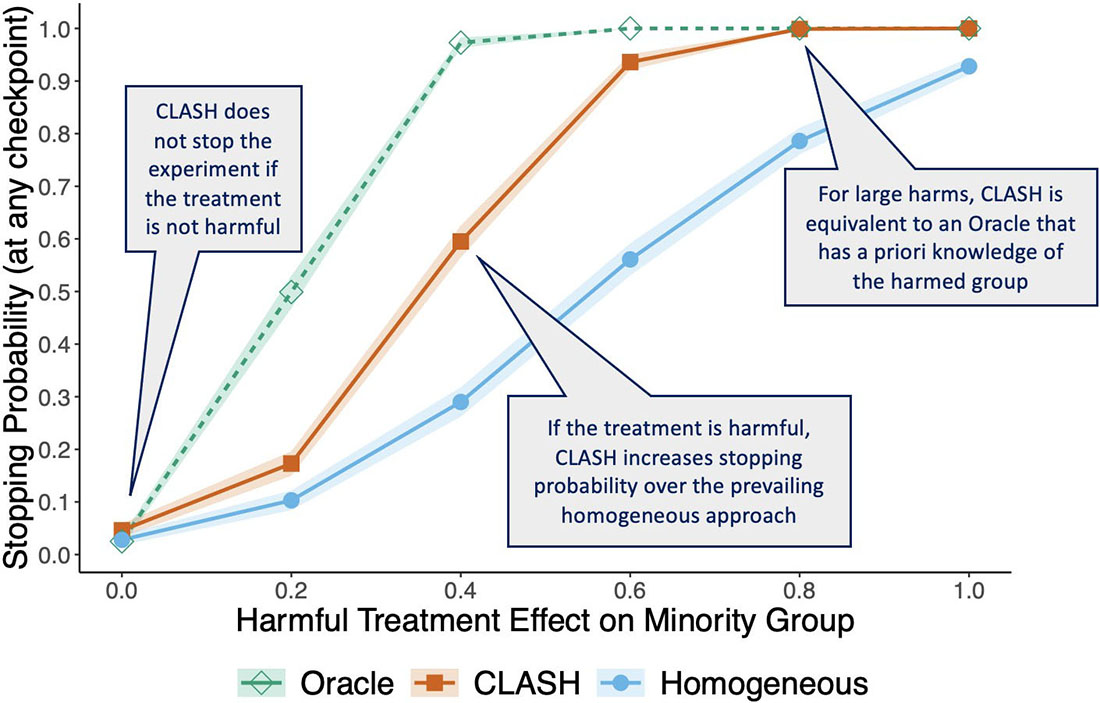

Second, we theoretically establish that for sufficiently large samples, CLASH stops trials faster than the traditional homogeneous approach if a treatment harms only a subset of trial participants [1]. Figure 2 illustrates CLASH’s performance benefit over homogeneous stopping in simulation experiments. If the minority group is harmed, CLASH (indicated by the orange line) significantly increases the stopping probability over the homogeneous approach (indicated by the blue line). And for large effect sizes, CLASH is as effective as an oracle that has prior knowledge of the harmed subgroup (indicated by the green line).

Third, CLASH does not stop trials unless a subset of patients is harmed — meaning that it results in faster early stopping without stopping unnecessarily. If the treatment has no negative effects, CLASH does not prematurely end the trial more frequently than either baseline. Its improvements over baseline methods are robust across parameters, including the type of stopping test, majority and minority group sizes, treatment effect size, and number of covariates.

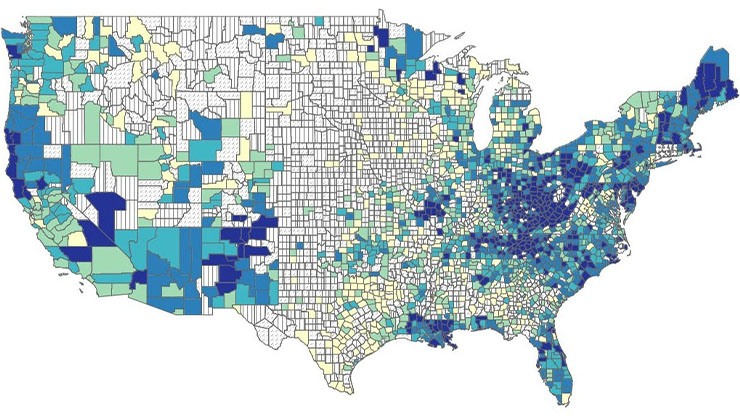

To illustrate CLASH’s applicability outside of clinical-trial-based simulations, we experimented with real-world data from a technology company that ran a large-scale A/B test with 500,000 participants to evaluate the effect of a software update on user experience. On these data, CLASH successfully detected user harms in certain geographic regions (where the software update would have a significant negative impact on relevant metrics) and appropriately stopped early. Overall, our method leads to effective heterogeneous early stopping across a range of randomized experiments, outperforming baselines and nearing oracle-level performance for large sample sizes.

To conclude, we emphasize that early stopping is a nuanced decision. For example, if a treatment harms only a subset of participants, it may be desirable to stop the experiment on the affected group but continue for the rest of the population. In other situations, it may make more sense to end the trial altogether. Such decisions are influenced by the treatment's potential benefits, the nature of harm, and other ethical considerations. CLASH, while powerful, is not intended to replace discussions about trial ethics; instead, it is meant to provide a useful aid for researchers who must make difficult decisions about early stopping. We encourage practitioners to engage in such discussions, apply CLASH when appropriate, and remain aware of bias mitigation for underrepresented groups in experiments.

Allison Koenecke delivered a minisymposium presentation on this research at the 2024 SIAM Conference on Mathematics of Data Science, which took place in Atlanta, Ga., last October.

References

[1] Adam, H., Yin, F., Hu, H., Tenenholtz, N., Crawford, N., Mackey, L., & Koenecke, A. (2023). Should I stop or should I go: Early stopping with heterogeneous populations. In NIPS '23: Proceedings of the 37th international conference on neural information processing systems (pp. 15799-15832). New Orleans, LA: Curran Associates, Inc.

[2] Johari, R., Koomen, P., Pekelis, L., & Walsh, D. (2017). Peeking at A/B tests: Why it matters, and what to do about it. In KDD’17: Proceedings of the 23rd ACM SIGKDD international conference on knowledge discovery and data mining (pp.1517-1525). Halifax, Canada: Association for Computing Machinery.

[3] O'Brien, P., & Fleming, T.R. (1979). A multiple testing procedure for clinical trials. Biometrics, 35(3), 549-556.

[4] Shendre, A., Parmar, G.M., Dillon, C., Beasley, T.M., & Limdi, N.A. (2018). Influence of age on warfarin dose, anticoagulation control, and risk of hemorrhage. Pharmacotherapy, 38(6), 588-596.

[5] Wald, A. (1945). Sequential tests of statistical hypotheses. Ann. Math. Statist., 16(2), 117-186.

[6] Yao, L., Chu, Z., Li, S., Li, S., Gao, J., & Zhang, A. (2021). A survey on causal inference. ACM Transact. Knowl. Discov. Data, 15(5), 1-46.

About the Authors

Hammaad Adam

Ph.D. student, Massachusetts Institute of Technology

Hammaad Adam is a final-year Ph.D. student at the Massachusetts Institute of Technology’s Institute for Data, Systems, and Society. His research focuses on the development of data-driven methods to improve health equity, particularly in the context of clinical trials and randomized experiments.

Allison Koenecke

Assistant professor, Cornell University

Allison Koenecke is an assistant professor of information science at Cornell University. Her research utilizes computational methods like machine learning and causal inference to study societal inequities in domains such as public service, online platforms, and public health.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.