An In-depth Guide to the Methods of Computational Imaging

The following is a brief reflection from the author of Foundations of Computational Imaging: A Model-based Approach, which was published by SIAM in 2022. This innovative book defines a common foundation for the mathematical and statistical methods that are associated with computational imaging and addresses a variety of research techniques with applications in multiple disciplines, including applied mathematics, physics, chemistry, optics, and signal processing.

Over the past 20 years, computational imaging has emerged as a multidisciplinary field that focuses on the creation of useful images from raw sensor data. Foundations of Computational Imaging presents an essential collection of theoretical materials that can serve as a common language for researchers and practitioners in this new field.

It is commonly believed that images come directly from sensors. For example, people often think that the output of the complementary metal oxide semiconductor (CMOS) sensor in their cell phones is a ready-to-use photograph; this is not true. Instead, the CMOS chip only produces raw data. This data is then processed via a series of complex mathematical algorithms that are implemented on high-performance computational hardware and produce the image we see on the screen. Such computational imaging procedures are required for any imaging system, ranging from cell phone cameras to tomographic imaging systems for medical diagnoses and even the Event Horizon Telescope that generated the first image of the supermassive black hole at the center of the galaxy Messier 87 (which is featured on the front cover of the book).

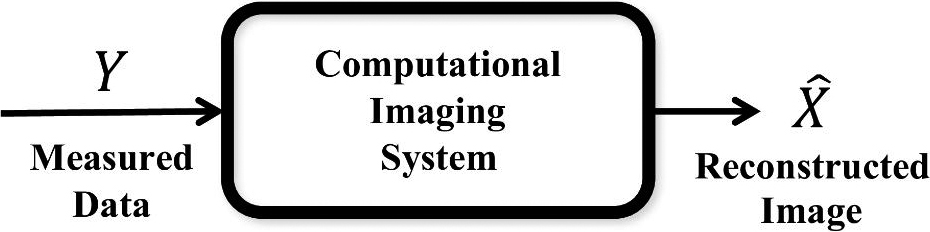

Any modern imaging system must solve an inverse problem that aims to estimate the desired true image from the available sensor data (see Figure 1). Even if a sensor is able to collect measurements for every pixel in an image, the image must still be denoised, deblurred, and corrected for distortions in geometry and color. And frequently the image must first be reconstructed from indirect, sparse, and nonlinearly distorted sensor measurements that make the formation of the final image computationally challenging and ill posed. In order to design the highest-performing imaging system possible, we must co-design the sensor and computational imaging algorithms/hardware to produce \(\hat{X}\): the best estimate of the desired image.

Foundations of Computational Imaging collects a set of algorithms that are commonly used in the implementation of computational imaging systems and arranges them as a single readable reference. The imaging approach is exemplified by the following maximum a posteriori (MAP) estimate:

\[\hat{X}=\arg \min_{x}\{-\log p (Y|x)-\log p(x)\}. \tag1\]

The term \(p(Y|x)\) is called the forward model because it describes the statistical dependency of the measurements \(Y\) with respect to the unknown image \(x\). The term \(p(x)\) is the prior model, which describes the unknown image that will be reconstructed. Minimizing the sum of these two terms yields a reconstruction that fits the measurements and has a high probability of being the solution. The MAP estimate requires that the solution be both “regular” and consistent with the observed data. In practice, we typically compute the estimate with an iterative algorithm that balances the prior cost function with the forward model cost function. This algorithmic structure—known as model-based iterative reconstruction (MBIR)—is a central theme of computational imaging and appears extensively throughout the text.

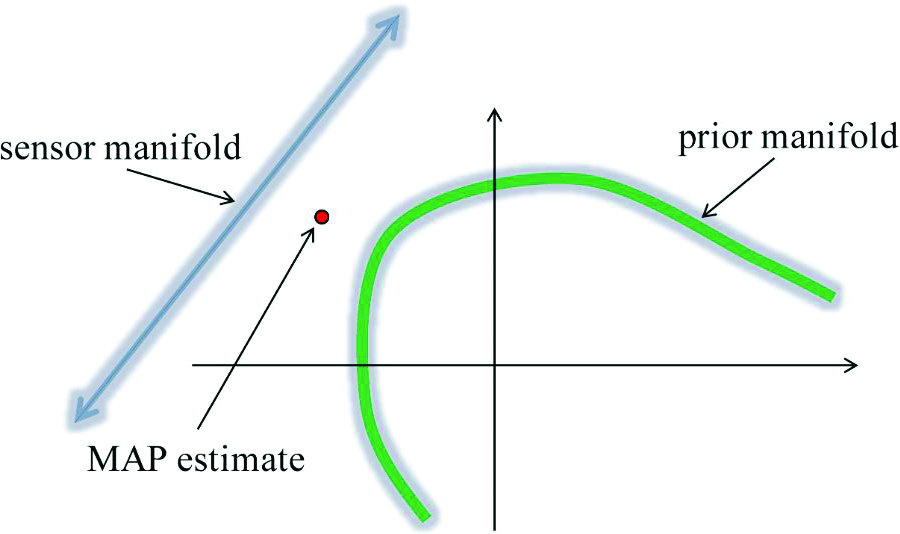

Figure 2 illustrates the goal of Bayesian reconstruction algorithms like MBIR. If the measurements are sparse, then the set of reconstructions that fit the observed data intuitively forms a “thin manifold.” However, many of these solutions may be completely unrealistic for the application in question. After the prior manifold characterizes the set of images that are probable to have occurred, the MAP estimate finds a reconstruction that is close to both manifolds. Because the prior manifold is essentially a probability density on all naturally occurring images, it is often quite difficult to model. Consequently, the statistical modeling of images and the efficient computation of resulting MBIR reconstructions are major themes of Foundations of Computational Imaging.

Chapters 2, 3, and 6 introduce the basic theoretical machinery for image modeling, including autoregressive and Markov random field (MRF) models in both one and two dimensions. The text also generalizes the multidimensional MRF model to the non-Gaussian case to better represent natural images.

Chapters 5 and 7 then explain the basic approaches to high-dimensional quadratic and non-quadratic optimization for MBIR reconstruction. These sections present the strengths and weaknesses of optimization approaches such as gradient descent, coordinate descent, and line search, and outline strategies to achieve the best reconstruction quality with limited computation.

Chapter 8 explores majorization and surrogate functions. We can consider these approaches—which have become mainstays of non-quadratic convex optimization—as a generalization of Newton’s method and interpret them as a form of iterative quadratic reweighting. In practice, surrogate functions can dramatically reduce computation without adversely affecting image quality.

Chapter 9 investigates the machinery of constrained optimization, such as the augmented Lagrangian and the alternating direction method of multipliers (ADMM) — essential tools for the efficient solution of large, complex image reconstruction problems. We can use ADMM to break problems into smaller, more modular pieces, which is an important step for practical implementation. Chapter 10 builds upon this foundation by introducing plug-and-play and advanced prior modeling, which provide machinery for the integration of state-of-the-art machine learning algorithms into MBIR reconstruction.

Moving on, chapter 12 offers a systematic, theoretical, and intuitive treatment of the expectation-maximization (EM) algorithm. This algorithm is very useful in a variety of imaging applications because it enables the estimation of model parameters \(\theta\) even when the reconstruction \(\hat{X}\) is unknown. Foundations of Computational Imaging assesses the EM algorithm from both an intuitive, theoretical perspective and a more pragmatic, algorithmic point of view.

Chapters 13, 14, and 15 collectively develop the theory of ergodic Markov chains, Hastings-Metropolis samplers, and Gibbs samplers. These approaches frequently contribute to the simulation of stochastic systems that can be formulated to obey the statistics of a Gibbs distribution. Next, chapter 17 develops standard probabilistic machinery that helps to model the Poisson processes that are associated with photon counting detectors. And finally, the four appendices briefly review important topics—like convexity, proximal operators, and Gibbs distributions—that appear throughout the book.

Ultimately, Foundations of Computational Imaging can serve as a valuable asset to coursework that centers on model-based or computational imaging, advanced numerical analysis, data science, numerical optimization, and approximation theory. It is also a handy reference for researchers and practitioners who work in medical, scientific, commercial, and industrial imaging.

Enjoy this passage? Visit the SIAM Bookstore to learn more about Foundations of Computational Imaging: A Model-based Approach and browse other SIAM titles.

The 2024 SIAM Conference on Imaging Science will take place from May 28-31 in Atlanta, Ga. Peruse the online program and consider attending the meeting to learn more about computational imaging and additional related areas of study. Bouman’s book and other SIAM texts will be available for purchase at conference discounts.

About the Author

Charles A. Bouman

Showalter Professor, Purdue University

Charles A. Bouman is the Showalter Professor of Electrical and Computer Engineering and Biomedical Engineering at Purdue University and a member of the National Academy of Inventors. His research inspired the first commercial model-based iterative reconstruction system for medical X-ray computed tomography.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.