Behavioral Scoring: Markov Chains and Markov Decision Processes

The following is a short reflection from two of the authors of Credit Scoring and Its Applications (Second Edition), which was published by SIAM in 2017 and written by Lyn Thomas, Jonathan Crook, and David Edelman. The updated, more robust second installment is a follow-up to the first edition, which was released in 2002.

This text serves as an analysis of section 7.4, entitled “Behavioral Scoring: Orthodox Markov Chain Approach.” Portions of this summary have come directly from the book and were modified slightly for clarity.

Researchers first suggested the idea of modeling consumers’ repayment and usage behavior in the early 1960s. They sought to identify the different possible states of a borrower’s account and estimate the chance of the account moving from one state to another between billing periods. These states are primarily contingent upon the account’s current position and recent history, but can also depend on the initial application. Therefore, typical information that one could use to determine an account’s state might include its current balance, the number of overdue time periods, and the number of “reminder” letters in the last six months. The object is to define states in such a way that the probability of moving to any particular state at the next billing period is dependent only on the account’s current state; this is the definition of a Markov chain.

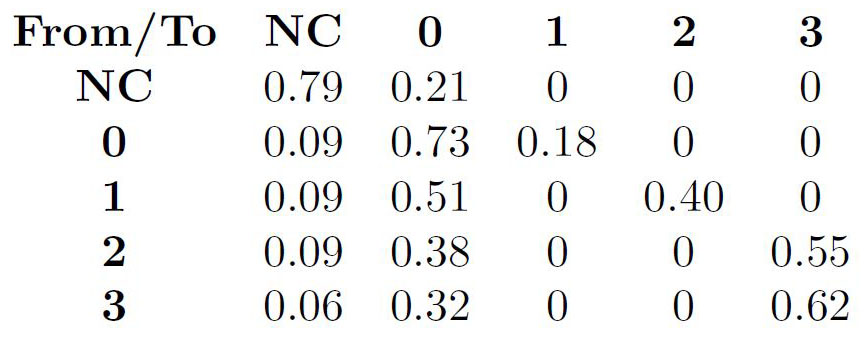

In 1983, Jarl Kallberg and Anthony Saunders employed a simple version of this model [5]. The data in their example led to a stationary transaction matrix (see Figure 1).

Thus, if all of the accounts have no credit—i.e., \(\pi_0=(1,0,0,0,0)\)—at the beginning, the account distribution is \(\pi_1=(0.79, 0.21, 0, 0, 0)\) after one period. After subsequent periods, it becomes

\[\pi_2=(0.64, 0.32, 0.04, 0, 0),\]

\[\pi_4=(0.468, 0.431, 0.070, 0.023, 0.008),\]

\[\pi_{10}=(0.315, 0.512, 0.091, 0.036, 0.046).\]

This is a useful way to estimate the amount of bad debt that will appear in future periods. After 10 periods, the model estimates that 4.6 percent of the accounts will be bad.

One of the first Markov chain models of consumer repayment behavior was a credit card model suggested by Richard Cyert, H. Justin Davidson, and Gerald Thompson [2], wherein each dollar owed would jump from state to state. This model had trouble accounting for conventions and allocating part payments; a different group later addressed these concerns [8]. A. Wayne Corcoran suggested another variant of the model that utilized different transition matrices for accounts with different sized loans [1], and David Edelman proposed a version with different transition matrices for different months of the year [4]. Kallberg and Saunders also investigated models for which the state space depends on the amount of the opening balance and the level of repayments [5].

In more sophisticated Markov chain models, each state \(s\) in the state space has three components: \(s=(b,n,i)\). Here, \(b\) is the outstanding balance, \(n\) signifies the number of current consecutive periods of non-payment, and \(i\) represents the other characteristics of importance. One can use the anticipated one-period reward that the lender makes from a customer in each state to calculate the expected total profit from that customer under any credit limit policy. In fact, it is possible to calculate the credit limit policy that maximizes the profits for a given level of bad debt — or minimizes the bad debt for a given level of profit.

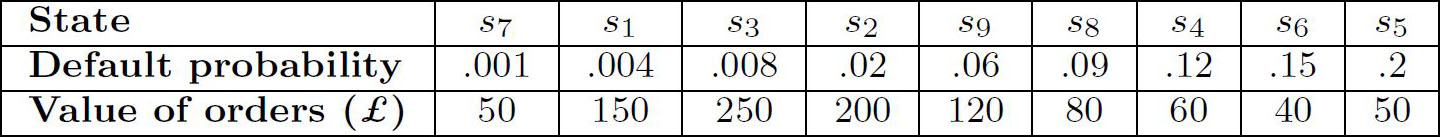

We employ dynamic programming to calculate the chances of defaulting, \(D(b,n,i)\), for a simple example in a mail-order context. Utilizing past data, we develop a table of default probabilities for each state and the expected value of the state’s orders (see Figure 2). The states are ordered by increasing probability of default, where the total expected order value is £1,000. The lenders must then decide on an acceptable default level and value of lost orders. Management has to thus choose which default level \(D^*\) is acceptable. Since \(D(b,n,i)\) is increasing in \(b\), one can solve \(D(L(n, i), n, i) = D^* \) to find the credit limit \(L(n,i)\) for each state \((n,i)\).

This Markov chain model has two elements. The first element is the states, or the consumers’ repayment performance; a transition matrix describes the repayment behavior’s dynamics. The second is a reward function: the value of the consumer’s state to the organization. The addition of a third element—potential decisions of the lender that impact the rewards and transitions between states—yields a Markov decision process, which is an example of stochastic dynamic programming.

We can extend the previous example to solve for the optimal credit limit policy, which serves as a function of the state \(s\) and the number of periods until the end of the time horizon.

More recent Markov decision models that maximize the credit limit policy employ other state descriptions. One research group—which received the Daniel H. Wagner Prize for Excellence in the Practice of Advanced Analytics and Operations Research—utilized states with six components, such as default rate, usage, purchase behavior, and repayments behavior and amount [7]. Each component was split into two, three, or four bands for a total of nearly 600 behavioral states. Actions in this model included setting the credit limit (10 levels) and deciding what interest rate to charge (five levels).

Meko So and Lyn Thomas allowed 10 credit limits and used behavioral score bands—along with “defaulted,” “closed,” and “inactive”—as their model’s eight states [6]. They found that a second-order Markov chain that defines the state based on the current and previous behavioral score bands provides a better fit to the data. They also introduced a method that estimates the transition probabilities from states with minimal data.

One can apply the Markov decision approach to optimize the collection process for defaulted loans. In this case, the lender seeks to recover as much of the defaulted debt as possible; the amount recovered as a fraction of the original defaulted debt is called the recovery rate. Lenders can undertake a number of actions with varying levels of harshness and cost implications, ranging from gentle reminder telephone calls to legal action. They must also decide the length of time for which they will undertake an action and plan the actions’ sequence. The defaulters’ state is described by a lender’s current collection action, the ongoing duration of that action, and the recovery rate that was obtained from the borrower when the current action began. Researchers used this type of Markov decision process model to find the sequence and duration of actions that maximize the expected recovery rate [3].

Enjoy this passage? Visit the SIAM bookstore to learn more about Credit Scoring and Its Applications and browse other SIAM titles.

References

[1] Corcoran, A.W. (1978). The use of exponentially smoothed transition matrices to improve forecasting of cash flows from accounts receivable. Manag. Sci., 24(7), 732-739.

[2] Cyert, R.M., Davidson, H.J., & Thompson, G.L. (1962). Estimation of allowance for doubtful accounts by Markov chains. Manag. Sci., 8(3), 287-303.

[3] De Almeida-Filho, A.T., Mues, C., & Thomas, L.C. (2010). Optimizing the collections process in consumer credit. Prod. Oper. Manag., 19(6), 698-708.

[4] Edelman, D.B. (1992). An application of cluster analysis in credit control. IMA J. Manag. Math., 4(1), 81-87.

[5] Kallberg, J.G., & Saunders, A. (1983). Markov chain approach to the analysis of payment behavior of retail credit customers. Fin. Manag., 12(2), 5-14.

[6] So, M.M.C., & Thomas, L.C. (2011). Modelling the profitability of credit cards by Markov decision processes. Euro. J. Operation. Res., 212(1), 123-130.

[7] Trench, M.S., Pederson, S.P., Lau, E.T., Ma, L., Wang, H., & Nair, S.K. (2003). Managing credit lines and prices for Bank One credit cards. Interfaces, 33(5), 4-21.

[8] Van Kuelen, J.A.M., Spronk, J., & Corcoran, A.W. (1981). Note on the Cyert-Davidson-Thompson doubtful accounts model. Manag. Sci., 27(1), 108-112.

About the Authors

David Edelman

Credit Risk Management

David Edelman, who holds degrees in statistics from the University of Glasgow and Harvard University, embarked on an academic career before moving into banking. He then spent 17 years working in credit risk management for two major Scottish banks. In 2003, Edelman began operating as a freelance consultant, interim manager, and trainer. In the past 17 years, he has served hundreds of clients from over 30 countries and across five continents.

Jonathan Crook

Professor, University of Edinburgh

Jonathan Crook is Professor of Business Economics and Director of the Credit Research Centre at the University of Edinburgh’s Business School. His research concentrates on statistical models to predict credit risk. Crook has published almost 70 journal articles, mostly on credit scoring, and has co-authored or co-edited five books. He has also acted as a credit scoring consultant for a number of banks.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.