Bridging the Worlds of Quantum Computing and Machine Learning

The emergence of machine learning—particularly deep learning—in nearly every scientific and industrial sector has ushered in the era of artificial intelligence (AI). On a parallel trajectory, quantum computing was once considered largely theoretical but has now become a reality. The fusion of these two powerful disciplines has created an unprecedented avenue for innovation, ultimately giving rise to quantum machine learning (QML). This novel concept promises to revolutionize computational science, data analytics, and predictive modeling in a wide variety of areas, from optimization to pattern recognition (see Figure 1).

Quantum computing offers the necessary computational horsepower to speed up complex machine learning algorithms, and machine learning provides a toolkit for the optimization of quantum circuits or the decoding of quantum states. Here, we postulate as to how QML—especially quantum deep learning and quantum large language models (QLLMs)—can redefine the future of machine learning.

How Quantum Computing Can Benefit Deep Learning

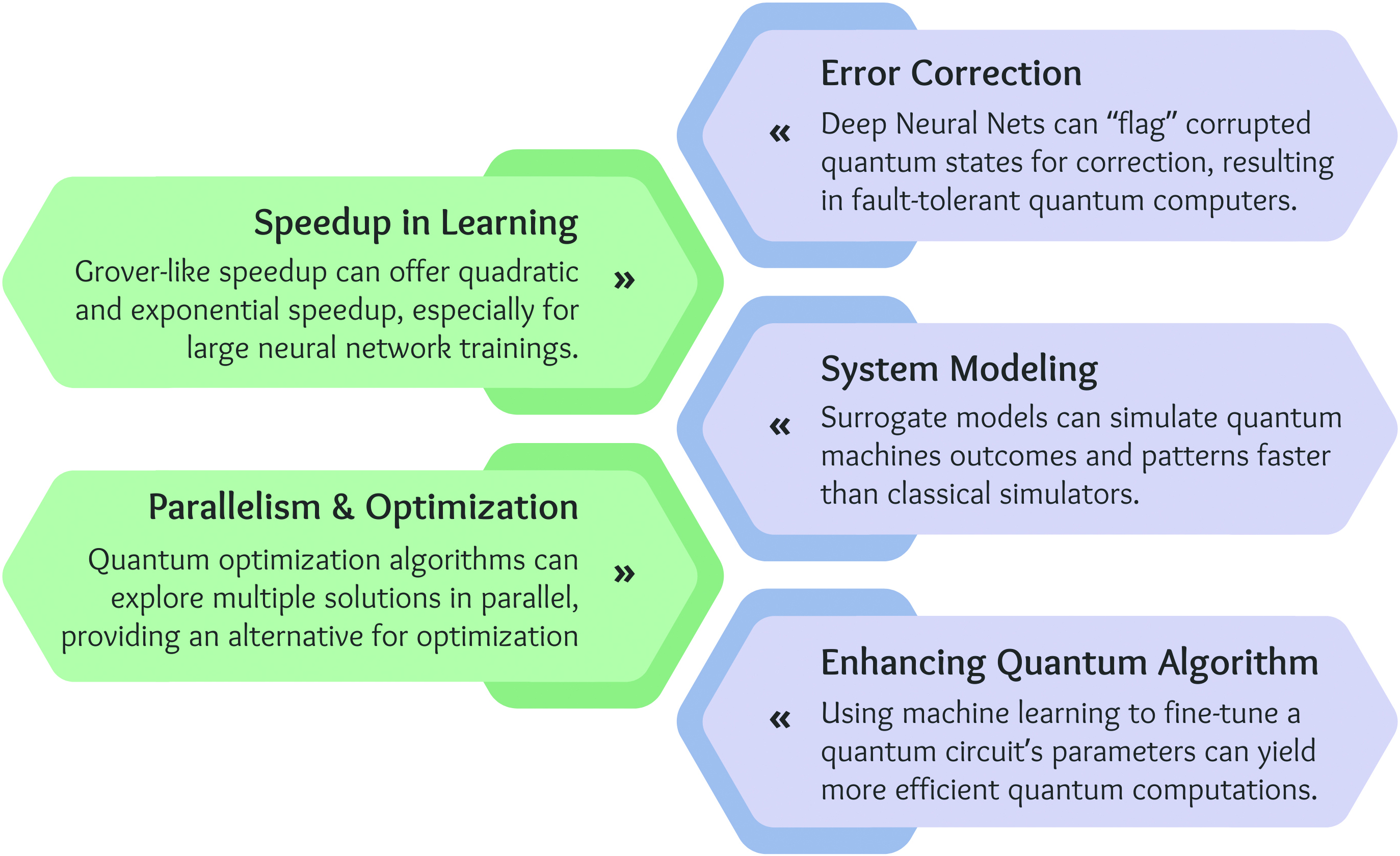

Quantum Speedup in Learning Algorithms: Quantum computing can have an immediate and substantial impact on algorithmic speedup, which is particularly relevant for machine learning and deep learning applications. A number of QML algorithms are modeled after Grover’s algorithm, which offers quadratic and exponential speedup in unstructured search problems; support vector machines and several clustering methods exemplify this improvement [8]. Grover-like speedup could potentially reduce the training time for large neural networks.

Quantum Neural Networks (QNNs): Traditional neural networks face computational limitations, especially as they grow in size and complexity. In contrast, QNNs leverage quantum advantages—such as superposition and entanglement—to carry out computations more efficiently [9]. Hybrid quantum-classical networks have shown promising results in proficiently tackling machine learning tasks despite the initial limitations of QNNs.

Quantum Natural Language Processing (QNLP): Deep learning and large language models like GPT-4 are becoming integral parts of our world, with applications that range from natural language processing to decision-making algorithms. While these models are undeniably transforming various fields, there is a growing but often overlooked concern about their environmental impact. Training extensive AI/machine learning models requires significant computational resources and generates a substantial carbon footprint. In fact, a 2019 study estimated that training a single large neural network could emit the same amount of carbon that five cars produce over their entire lifetimes [10]. This alarming reality, which illuminates the significant environmental costs that are often overshadowed by technological advancements, calls for an immediate reassessment of the sustainability of current machine learning practices — especially in light of the global urgency to combat climate change.

Quantum computing might offer a more energy-efficient way to train and deploy large language models. Preliminary research in QNLP indicates the potential ability of quantum states to capture semantic relationships between words, which could lay the foundation for more advanced natural language processing systems [6]. QLLMs will likely impact this research area, especially when simulating human-like conversation with high accuracy.

Quantum Parallelism and Optimization: A defining feature of quantum computing is its ability to perform parallel computations through superposition — an invaluable property for optimization problems, which are the underlying theme of machine learning algorithms. The Quantum Approximate Optimization Algorithm (QAOA) is potentially able to optimize complex functions that are classically hard to solve [4]. QAOA employs quantum parallelism to simultaneously explore multiple solutions, thus providing a much-needed alternative for the optimization of deep learning models. Another promising quantum algorithm is the variational quantum eigensolver (VQE) [7]. Many machine learning algorithms hinge on the solution of eigenvalue problems, so the VQE could significantly expedite these calculations on quantum hardware.

How Deep Learning Can Benefit Quantum Computing

Machine Learning for Quantum Error Correction: Quantum error correction is vital in the creation of reliable quantum computers. In classical computers, error correction is relatively straightforward; errors usually arise when binary digits (bits) flip from 0 to 1 or vice versa, and several techniques—such as parity checks—can identify and correct them. But in quantum computing, phase errors and other quantum decoherence mechanisms may also disrupt the delicate quantum states. Although traditional quantum error correction techniques—like surface codes and cat codes—are effective, they require extensive resources.

Machine learning methods have shown promise in error detection and correction. We can train deep neural networks to identify quantum errors by learning the intricate patterns through which these errors typically manifest. By doing so, the networks can effectively “flag” corrupted quantum states for correction — even when traditional error correction techniques are computationally expensive or less efficient. This approach could bring fault-tolerant quantum computers closer to reality.

Quantum System Modeling: Deep learning can also assist with the modeling and simulation of complex quantum systems. We can use quantum data to train surrogate models that simulate the original system’s behavior at a faster pace than other classical simulators; analysis of such models may lend insight into behaviors that are difficult to study directly. These surrogate models can pinpoint patterns and properties within quantum systems that otherwise may not be readily identifiable, potentially leading to advancements in molecular science, quantum chemistry, materials science, and other related fields [3].

Quantum Algorithms: Hybrid quantum-classical algorithms that utilize both quantum computers and classical machine learning models are forging new paths for the solution of complex problems in optimization, data analysis, and the like. One major machine learning application in quantum computing is the optimization of traditional quantum algorithms. For example, reinforcement learning can fine-tune a quantum circuit’s parameters and yield more efficient and effective quantum computations [3].

Open Problems in the Era of Noisy Intermediate-scale Quantum Computing

A key challenge that presently impacts quantum computing in general (and QML in particular) is the limitation of existing quantum hardware. Current gated quantum computers are predominantly classified as noisy intermediate-scale quantum (NISQ) devices. These devices often have a limited number of quantum bits (qubits)—ranging from tens of qubits to a few hundred—though machines with several thousand qubits are under development. Computers in this transitional period are not yet fully fault tolerant and are constrained by physical limitations like decoherence and gate errors, which affect their ability to maintain high-quality entanglement and achieve a high circuit depth. Despite these issues, NISQ devices can perform certain computational tasks more efficiently than their classical counterparts. Furthermore, the limited number of qubits and relatively large error rates complicate the implementation of complex QNNs on these machines. Even before the issue of algorithmic design, NISQ computers must handle intrinsic imperfections — such as the aforementioned decoherence and gate errors [1].

While QML in the NISQ era faces unique challenges—especially concerning hardware limitations—it also presents exciting research opportunities for interdisciplinary collaborations between computer scientists, applied mathematicians, and physicists. A variety of techniques are paving the way for increased QNN compatibility with NISQ-era devices, including variational circuits, error mitigation, and hybrid models. As these methods mature, the prospect of QML implementation in near-term quantum computing becomes even more promising.

One of the most popular approaches in this regard is the use of variational circuits: shallow quantum circuits that are adaptable to NISQ-era constraints. This tactic classically optimizes the circuit parameters, while the quantum component of the computation executes specific subroutines [7]. Error mitigation techniques, such as zero-noise extrapolation, also help to reduce the effect of noise in the system. By running the same quantum operation multiple times with varying noise levels, users can estimate and correct for the impact of errors. A third approach for NISQ-era quantum computers integrates quantum computing into classical neural networks as hybrid quantum-classical models [2]. Doing so allows the quantum portions of the model to focus on specific tasks that suit them well—such as complex optimizations—while offloading other tasks to the classical system. Finally, we note that effective methods for quantum data encoding—i.e., encoding classical data into quantum states—remain an open problem. Current approaches either suffer from inefficiencies or lack the ability to capture the richness of classical data [5].

Concluding Thoughts

As we venture deeper into the realms of AI and quantum mechanics, the convergence of these two technologies offers unparalleled potential. The synergistic relationship between quantum computing and machine learning necessitates a concrete interdisciplinary framework wherein quantum physicists, computer scientists, and applied mathematicians can work together to develop robust, scalable, and applicable quantum algorithms for machine learning.

References

[1] Arute, F., Arya, K., Babbush, R., Bacon, D., Bardin, J.C., Barends, R., … Martinis, J.M. (2019). Quantum supremacy using a programmable superconducting processor. Nature, 574, 505-510.

[2] Cao, Y., Romero, J., Olson, J.P., Degroote, M., Johnson, P.D., Kieferová, M., … Aspuru-Guzik, A. (2020). Quantum chemistry in the age of quantum computing. Chem. Rev., 119(19), 10856-10915.

[3] Carrasquilla, J. (2020). Machine learning for quantum matter. Adv. Phys. X, 5(1), 1797528.

[4] Farhi, E., Goldstone, J., & Gutmann, S. (2014). A quantum approximate optimization algorithm. Preprint, arXiv:1411.4028.

[5] Liang, Z., Song, Z., Cheng, J., He, Z., Liu, J., Wang, H., … Shi, Y. (2022). Hybrid gate-pulse model for variational quantum algorithms. Preprint, arXiv:2212.00661.

[6] Meichanetzidis, K., Gogioso, S., de Felice, G., Chiappori, N., Toumi, A., & Coecke, B. (2020). Quantum natural language processing on near-term quantum computers. Preprint, arXiv:2005.04147.

[7] Peruzzo, A., McClean, J., Shadbolt, P., Yung, M.-H., Zhou, X.-Q., Love, P.J., … O’Brien, J.L. (2014). A variational eigenvalue solver on a photonic quantum processor. Nat. Commun., 5, 4213.

[8] Ramezani, S.B., Sommers, A., Manchukonda, H.K., Rahimi, S., & Amirlatifi, A. (2020). Machine learning algorithms in quantum computing: A survey. In 2020 international joint conference on neural networks (IJCNN) (pp. 1-8). Institute of Electrical and Electronics Engineers.

[9] Schuld, M., Sinayskiy, I., & Petruccione, F. (2015). An introduction to quantum machine learning. Contemp. Phys., 56(2), 172-185.

[10] Strubell, E., Ganesh, A., & McCallum, A. (2019). Energy and policy considerations for deep learning in NLP. In Proceedings of the 57th annual meeting of the Association for Computational Linguistics (pp. 3645-3650). Florence, Italy: Association for Computational Linguistics.

About the Authors

Somayeh Bakhtiari Ramezani

Ph.D. Candidate, Mississippi State University

Somayeh Bakhtiari Ramezani holds a Ph.D. in computer science from Mississippi State University. She is a 2023 Southeastern Conference Emerging Scholar and a 2021 Computational and Data Science Fellow of the Association for Computing Machinery’s Special Interest Group on High Performance Computing. Ramezani’s research interests include probabilistic modeling and optimization of dynamic systems, quantum machine learning, and time series segmentation.

Amin Amirlatifi

Associate Professor, Mississippi State University

Amin Amirlatifi is an endowed professor and an associate professor of chemical and petroleum engineering in the Swalm School of Chemical Engineering at Mississippi State University. His research interests include numerical modeling and optimization, quantum computing, and the application of artificial intelligence and machine learning in predictive maintenance and the energy sector.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.