Digital Twins of the Earth System via Hybrid Physics-AI Models

The Earth system is a nonlinear, multiscale, multiphysics dynamical system that includes the atmosphere, ocean, land, cryosphere, and biosphere. Representation of this system requires the resolution of numerous processes at a range of spatiotemporal scales, from micrometers (e.g., cloud microphysics) to thousands of kilometers (e.g., planetary circulation) and seconds to centuries. This breadth necessitates an important modeling design balance: spatiotemporal resolution and physical fidelity versus computational cost and time to solution. Such balance is a central consideration in the development of general circulation models (GCMs) and their more comprehensive forms: Earth system models (ESMs). Collectively, these models comprise mathematical equations that describe the Earth system’s physical processes. Scientists use them to simulate weather and climate, though they inevitably carry the inherent limitations and uncertainties of representing the real world.

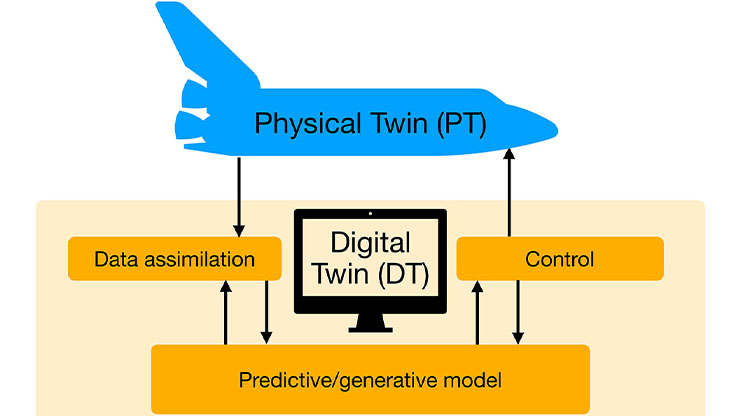

Building upon these foundations, digital twins—virtual replicas of real-world systems that combine models, data, and widely-used decision frameworks from engineering and other applied science disciplines [2]—are an emerging paradigm in the Earth system domain. For example, Destination Earth is a European Commission initiative that applies digital twin technology to simulate weather and climate [10]. Indeed, if we take the formal definition of a digital twin—“a set of virtual information constructs that mimics the structure, context and behavior of an individual/unique physical asset, or a group of physical assets, is dynamically updated with data from its physical twin throughout its life cycle, and informs decisions that realize value” [1]—we can find three key components that are already part of Earth system modeling workflows, especially in the context of weather and climate simulations. These are as follows:

- A model (a set of virtual information constructs)

- Data (from its physical twin counterpart)

- Decisions that realize value.

The underlying models are GCMs and ESMs. They are continuously updated with data from their physical twin—i.e., the Earth—that originate from satellite or air- and ground-based observations. The models’ outputs inform decisions that realize value, from advanced warnings of impending hurricanes to assessments of long-term climate risks that guide policy, adaptation, and mitigation strategies.

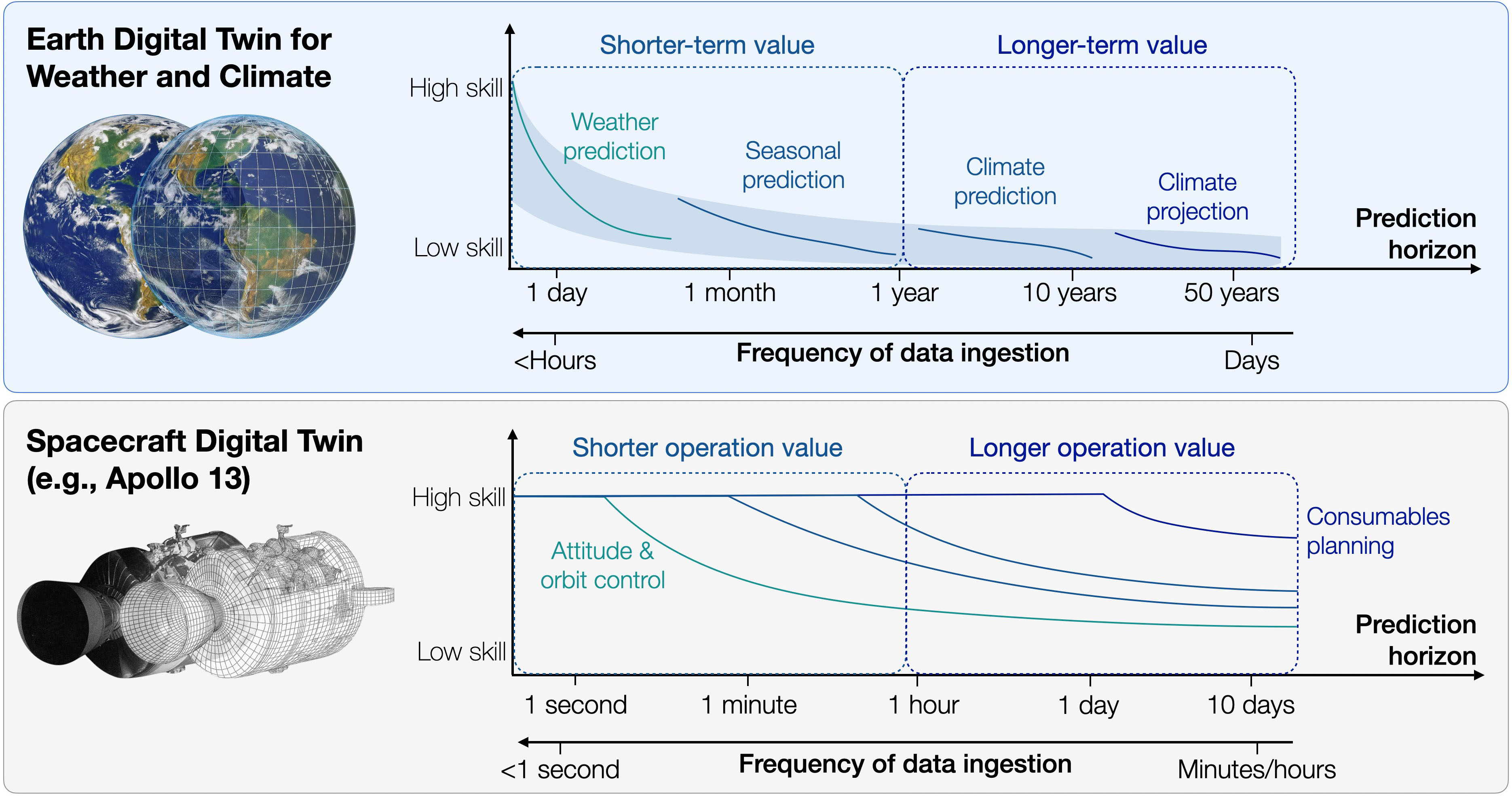

Time is extremely important here, both in terms of (i) dynamical updates of the digital twin with data and (ii) the timeframe that provides information for decisions that realize value. A common assumption is that a digital twin should be continuously updated with data from its physical twin. However, “continuously” can have different meanings depending on the context. The key is then to provide information for decisions that realize value, which occur at different time scales based on the predictability horizon of the application in question and the cost-loss balance of the envisioned action. Time scales can range from minutes to hours (e.g., nowcasting for aviation and flash flood alerts), days to weeks (e.g., evacuations or electricity grid dispatch), season to season (e.g., crop-sowing windows or reservoir operations), or even years to decades (e.g., infrastructure design, insurance, and climate policy). These horizons determine the frequency at which physical twin data should be updated or ingested. Indeed, the prediction horizon and frequency of data ingestion depend on the specific application; for instance, the data update requirements and prediction horizons of a spacecraft are quite different than those of Earth’s weather and climate applications (see Figure 1).

A Matter of Trade-off

As I mentioned, a key consideration in Earth system modeling is the trade-off between a “faithful” representation of the system (i.e., spatiotemporal resolution and physical fidelity) versus computational cost and time to solution. GCMs and ESMs commonly run at relatively coarse resolutions (approximately 50 kilometers), resolving large-scale dynamics but not small-scale processes like turbulence, convection, and cloud microphysics. The unresolved small-scale processes are typically parameterized with relatively simplistic empirical models called subgrid parameterizations—e.g., version 5 of the Community Atmosphere Model [6]—or with more accurate (but more expensive) resolving models called super parameterizations—e.g., the Super-parameterized Community Atmosphere Model (SPCAM) [3]—which are embedded in the coarse GCM/ESM. The trade-off between “faithful” models versus computational cost and time to solution is clear; spatiotemporal resolution is sacrificed to a relatively coarse roughly 50-kilometer resolution, which forces us to represent the unresolved processes via parametrizations. The choice of parametrization—subgrid parametrization or super parametrization—is also a necessary trade-off.

The throughput of a super-parameterized GCM with embedded cloud-resolving models may be as low as approximately 0.05 simulated years per day (SYPD) on a single central processing unit node (24 cores), whereas throughput for a simpler subgrid-parameterized GCM could be roughly 4 SYPD [9]. These costs scale quickly for long climate runs; for instance, a 50-year simulation would take about 1,000 days on one node, or optimistically one day on 1,000 nodes. In addition, single simulations are insufficient in a chaotic system like the Earth system, where prediction must be treated probabilistically [7]. To this end, ensembles (typically between 10 to 100 members) are a critical component of weather and climate simulation workflows, which further increase costs.

While the European Centre for Medium-Range Weather Forecasts now runs operational global ensembles at roughly 9 kilometers and Destination Earth can deliver multi-decadal simulations at 4.4 kilometers with \(>\)1 SYPD—with CMIP Phase 7 “fast-track” simulations at 10 kilometers planned for 2027—computational cost is still a leading challenge for policy-relevant simulations. Global 1-kilometer experiments can only reach approximately 1 SYPD on entire exascale systems [8]; as such, kilometer-scale ensembles that are required for regional climate risk assessment and adaptation planning still demand major efficiency gains in Earth system modeling. Furthermore, models for unresolved small-scale processes contain uncertainties and thus require improvements.

Can Artificial Intelligence Help?

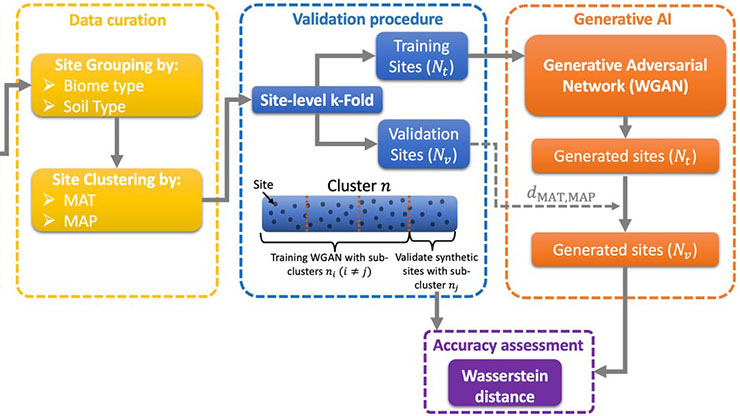

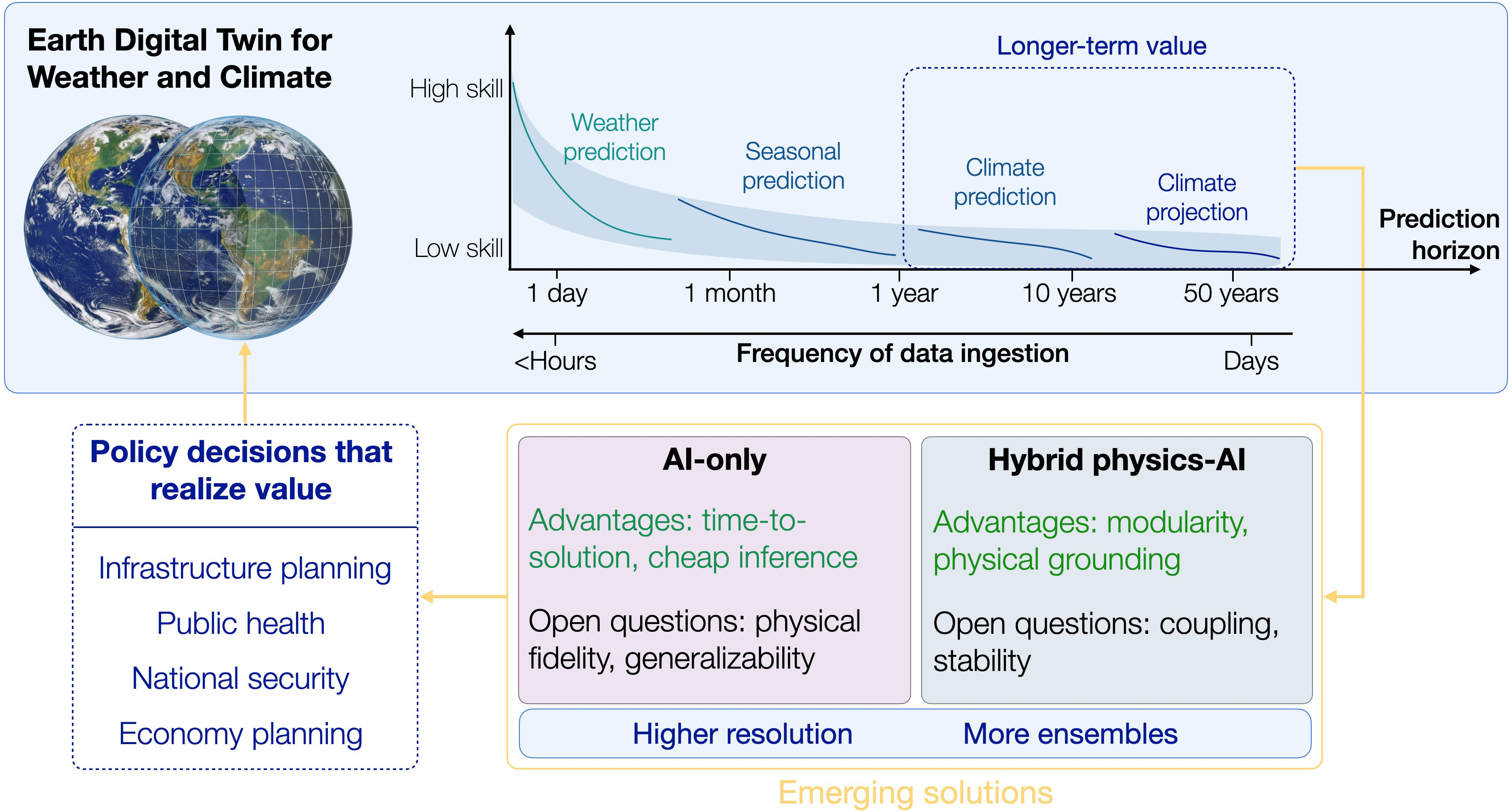

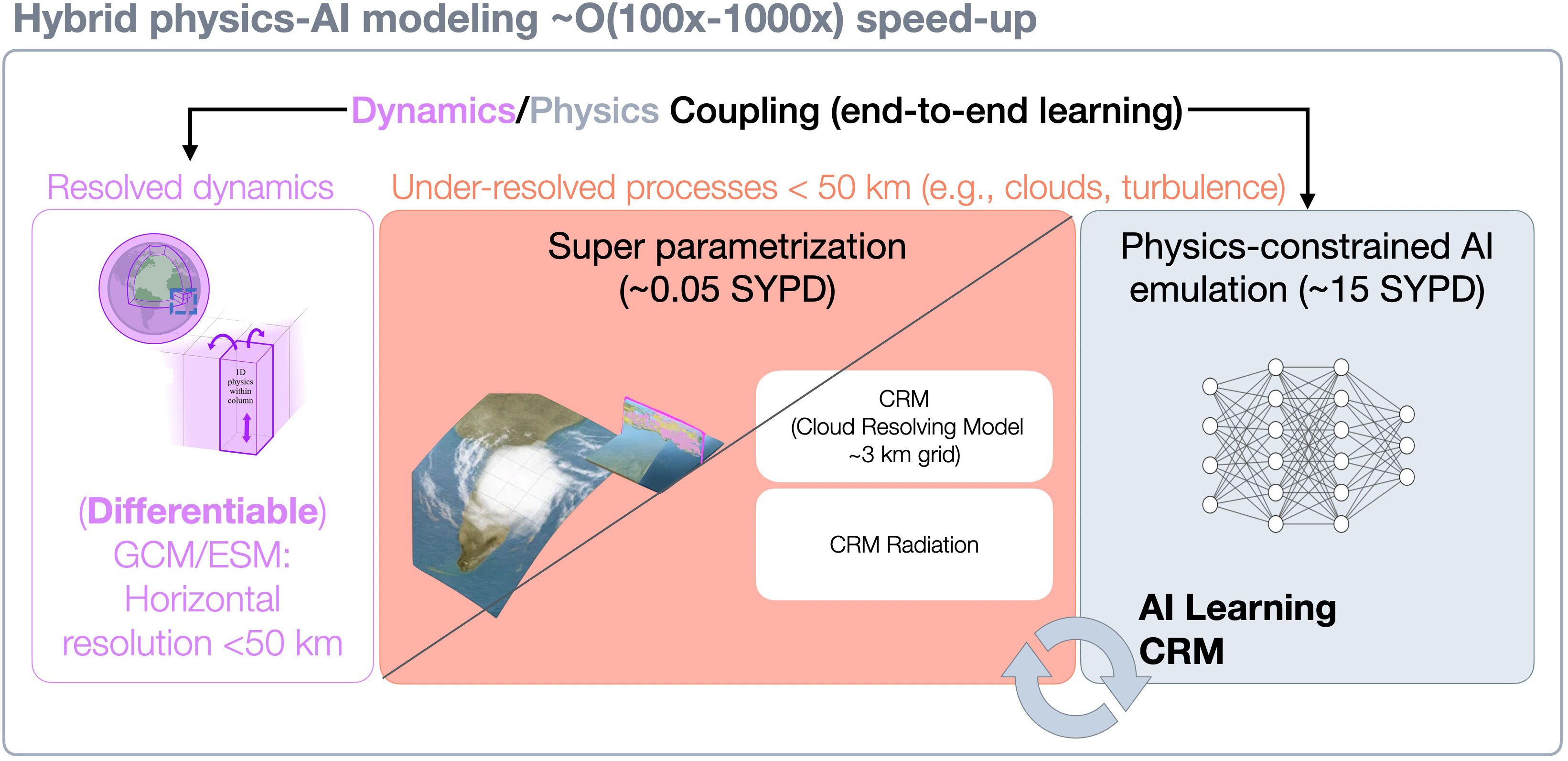

The trade-off between “faithful” models versus computational cost and time to solution, along with the more recent constraint on the amount of energy needed to obtain a solution, has compelled researchers to investigate both artificial intelligence (AI)-only models and hybrid physics-AI models (see Figure 2).

AI-only models are trained on the wealth of data from equation-based physical models—which are commonly blended with observations like reanalysis datasets—but they do not incorporate the governing equations of traditional GCMs or ESMs. Instead, they learn to mimic the entire system’s behavior solely from data in an input-output fashion. This constitutes a form of statistical modeling according to Edward Lorenz’s paradigm on dynamical versus statistical modeling [5].

In contrast, hybrid physics-AI models integrate AI surrogates to emulate unresolved processes—such as cloud microphysics, convection, and turbulence—whereas physics-based equations of traditional GCMs and ESMs continue to govern large-scale dynamics (see Figure 3). In this paradigm, AI emulators replace traditional parameterizations of unresolved processes. Unlike subgrid parametrization schemes—which are overly simplistic—or super parameterizations, which embed resolving models but are computationally prohibitive, AI surrogates can both accelerate and improve the fidelity of super-parameterized representations by exploiting the growing wealth of high-resolution simulation and observational data that pertains to unresolved processes.

Both AI-only and hybrid physics-AI models provide substantial speedups over traditional GCMs and ESMs at the inference phase, though hybrid models typically achieve less acceleration. The challenge with AI-only models is ensuring physical fidelity and generalizability, particularly for long-term climate simulations. Hybrid approaches can help mitigate these issues by learning the underlying fundamental physical principles that govern subgrid processes. By focusing on these building blocks rather than attempting to simultaneously model the entire climate system, such techniques may offer improved generalizability and more interpretable results. According to Lorenz’s aforementioned paradigm, this strategy is more akin to dynamical modeling [5].

The Devil Is in the Details

Although hybrid physics-AI models offer certain important advantages, their use presents a persistent and rather crucial challenge: the coupling between AI emulators of subgrid physical processes and GCM/ESM-driven equation-based dynamics tends to cause long-term simulation stability issues. In other words, long-term climate simulations may fail completely or produce significantly drifted or biased results. A promising hybrid physics-AI model called the physics-constrained neural network GCM (PCNN-GCM) seeks to address this difficulty by leveraging CondensNet, a novel deep learning framework that emulates SPCAM’s cloud-resolving model [9]. Its adaptive physical constraints ensure model stability while retaining physical fidelity. By explicitly correcting unphysical water vapor oversaturation during condensation, CondensNet overcomes the key stability issues in long-term climate simulations. It thus achieves stable multidecadal climate simulations at a fraction of the computational costs of SPCAM—roughly 15 SYPD versus SPCAM’s 0.04 SYPD on a small parallel run—while maintaining comparable accuracy.

Another noteworthy development in hybrid physics-AI modeling is a differentiable hybrid physics-AI model called NeuralGCM [4]. The differentiability of NeuralGCM stems from the equations that drive large-scale dynamics in the host GCM. This feature enables end-to-end training, which improves the coupling between the AI emulation of subgrid processes and large-scale dynamics.

Together, CondensNet and NeuralGCM offer a possible roadmap for the future of hybrid physics-AI modeling. Bespoke physical constraints in the AI emulation of subgrid processes stabilize long-term simulations while retaining accuracy; differentiability improves the coupling representation between AI emulators and large-scale dynamics, allowing for end-to-end training against high-fidelity data.

Marrying Physics and AI

Hybrid physics-AI models, which combine the efficiency and universal approximation properties of AI methods with the physical consistency of traditional Earth system modeling, constitute a promising pathway toward operational digital twins of the Earth system. Recent advances by PCNN-GCM and NeuralGCM enable stable, long-term simulations at a reduced computational cost, allowing for larger ensemble runs to improve climate simulations and potentially finer spatiotemporal resolutions.

The generalizability of hybrid physics-AI models echoes the 1950s paradigm by Lorenz, who distinguished between statistical forecasting—which is effective when the future resembles the past—and dynamical forecasting, which is grounded in equations that describe the underlying physics [5]. By utilizing AI to learn the fundamental physical principles of subgrid processes that may not change under climate forcing, hybrid models inherit the advantages of dynamical forecasting and offer better prospects in out-of-distribution scenarios.

Time will tell whether digital twins of the Earth system will be hybrid physics-AI ESMs or AI-only schemes. I personally believe that coupling physics and AI is still important, especially for critical applications where interpretable models can clarify successes and failures and help scientists improve digital twins of the Earth.

Ultimately, both AI-only models and hybrid physics-AI models may be moving in the same direction. AI-only models will likely require some physical constraints to improve physical fidelity and generalizability, while hybrid models may rely more heavily on AI by incorporating end-to-end learning via differentiable dynamics.

Gianmarco Mengaldo delivered a minisymposium presentation on this research at the 2025 SIAM Conference on Computational Science and Engineering, which took place in Fort Worth, Texas, this past March.

References

[1] AIAA Digital Engineering Integration Committee. (2020). Digital twin: Definition & value (AIAA and AIA Position Paper). Reston, VA: American Institute of Aeronautics and Astronautics.

[2] Ferrari, A., & Willcox, K. (2024). Digital twins in mechanical and aerospace engineering. Nat. Comput. Sci., 4(3), 178-183.

[3] Khairoutdinov, M.F., & Randall, D.A. (2001). A cloud resolving model as a cloud parameterization in the NCAR community climate system model: Preliminary results. Geophys. Res. Lett., 28(18), 3617-3620.

[4] Kochkov, D., Yuval, J., Langmore, I., Norgaard, P., Smith, J., Mooers, G., … Hoyer, S. (2024). Neural general circulation models for weather and climate. Nature, 632(8027), 1060-1066.

[5] Lorenz, E.N. (1956). Empirical orthogonal functions and statistical weather prediction (Scientific Report No. 1: Statistical Forecasting Project). Cambridge, MA: Massachusetts Institute of Technology’s Department of Meteorology.

[6] Neale, R.B., Gettelman, A., Park, S., Chen, C.-C., Lauritzen, P.H., Williamson, D.L., … Taylor, M.A. (2012). Description of the NCAR community atmosphere model (CAM 5.0) (NCAR Technical Notes: NCAR/TN-486+STR). Boulder, CO: National Center for Atmospheric Research.

[7] Palmer, T. (2019). The primacy of doubt: Evolution of numerical weather prediction from determinism to probability. J. Adv. Model. Earth Syst., 9(2), 730-734.

[8] Taylor, M., Caldwell, P.M., Bertagna, L., Clevenger, C., Donahue, A., Foucar, J., … Wu, D. (2023). The simple cloud-resolving E3SM atmosphere model running on the Frontier exascale system. In SC’23: Proceedings of the international conference for high performance computing, networking, storage and analysis. Denver, CO: Association for Computing Machinery.

[9] Wang, X., Yang, J., Adie, J., See, S., Furtado, K., Chen, C., Arcomano, T., … Mengaldo, G. (2025). CondensNet: Enabling stable long-term climate simulations via hybrid deep learning models with adaptive physical constraints. Preprint, arXiv:2502.13185.

[10] Wedi, N., Sandu, I., Bauer, P., Acosta, M., Andersen, R.C., Andrae, U., … Pappenberger, F. (2025). Implementing digital twin technology of the Earth system in Destination Earth. J. Euro. Meteorol. Soc., 3, 100015.

About the Author

Gianmarco Mengaldo

Assistant professor, National University of Singapore

Gianmarco Mengaldo is an assistant professor in the Department of Mechanical Engineering and the Department of Mathematics (by courtesy) at the National University of Singapore, and an Honorary Research Fellow at Imperial College London.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.