Estimating Giant Kelp Populations with Deep Learning

Giant kelp (Macrocystis pyrifera), which grows rapidly at a rate of up to two feet per day, is a tantalizing potential source of biomass for cosmetics, fuel, and food (see Figure 1). Our colleagues at the University of Wisconsin-Milwaukee are working to create a “seed stock” of kelp strains to support emerging commercial algaculture operations. In the laboratory, researchers preserve Macrocystis in its microscopic gametophyte stage (gametophytes are roughly analogous to the seeds of terrestrial plants) because this form is easier to store long-term and ship to commercial growers (see Figure 2).

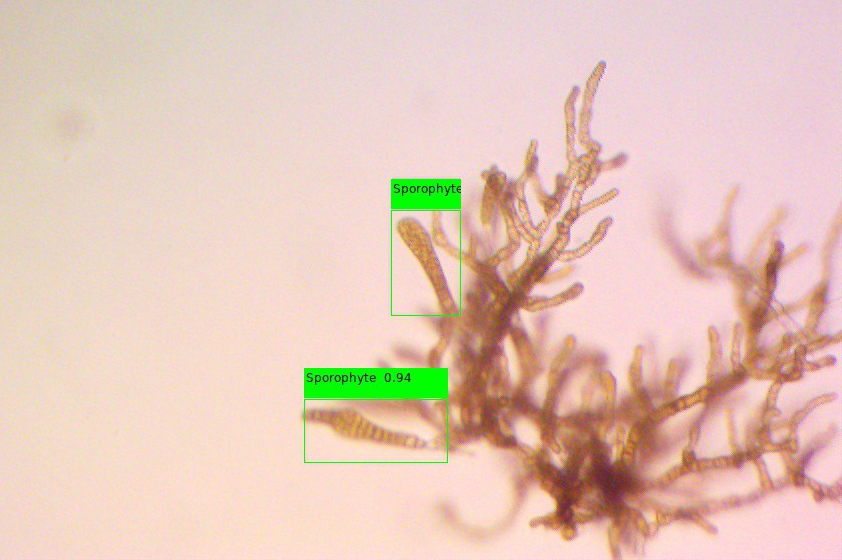

The core of our system is a variation of the YOLOv4 object detection neural network [2] that we trained to classify gametophytes by sex and identify reproductive features in images from microscope cameras. An object detection network typically accepts an image as input, then outputs a variable-length set of detections — each of which consists of a bounding box and a class label.

The YOLO network is a one-stage object detection network that divides an image into a grid and predicts bounding box coordinates and a class label for each region. This approach is generally more computationally efficient than the two-stage architecture of detectors like Region-based Convolutional Neural Networks and their variants; our models perform inference in roughly one second on a modern central processing unit and can process upwards of 30 images per second on a midrange graphics card. We can also scale down the YOLO architecture to run efficiently on constrained hardware with minimal performance loss.

Using pretrained convolutional layers, we fine-tuned YOLO networks on a small dataset that comprises approximately 1,300 annotated kelp images. Although this is not quite enough training data for truly robust performance, our validation tests have identified female gametophytes with about 87 percent sensitivity and a 10 percent false discovery rate, and fertilized eggs with about 83 percent sensitivity and a 30 percent false discovery rate (see Figure 3). While this output is less precise than that of a trained human, we hope that it will prove more useful because the system’s throughput is only limited by available hardware. In contrast, human laboratory workers can only count around 30 structures per culture due to time constraints. A demonstration of the detector is available on the web.

Counting structures of interest in a collection of images is a straightforward procedure that involves passing each image through the detector and aggregating the results. However, in order to identify unique objects in a video, one must solve the tracking-by-detection problem. This unsupervised learning problem groups detections from each video frame that belong to the same object. Doing so can be tricky — in our videos, objects sometimes disappear and reappear when the microscope’s focus changes, or the detector fails to identify an object in some frames but not others.

A typical tracking-by-detection scheme consists of two steps: (i) Using a similarity heuristic (e.g., state or visual appearance) to construct a weighted graph from the detections that are returned by the object detection network, and (ii) applying a clustering scheme to group the graph nodes into tracks. In practice, it is desirable for a tracking scheme to run in real time. We derive inspiration from the SORT (simple online and realtime tracking) algorithm, which is an open-source, straightforward, and computationally efficient system [1]. It employs constant-velocity Kalman filters to model the trajectories of tracked objects and utilizes the Hungarian algorithm—with bounding box intersection-over-unions as weights—to assign new detections to existing tracks in an online fashion.

We use SORT as a starting point but modify it somewhat to take advantage of the simple nature of our tracking problem. In our videos, the kelp gametophytes remain stationary in the culture — the only motion is due to the petri dish sliding beneath (or above, in the case of an inverted microscope) the camera lens. We therefore treat the objects themselves as stationary and attempt to recover the dishes' motion with the Lucas-Kanade optical flow algorithm, which tracks edges and corners between video frames. This approach helps us track objects through changes in microscope focus and missed detections, though it struggles with sudden camera movements and motion blur (which challenge any tracking scheme). We are continuing to work with our partners at the University of Wisconsin-Milwaukee to increase the reliability of our detection and tracking systems.

Liam Jemison presented this research during a contributed presentation at the 2022 SIAM Conference on Mathematics of Planet Earth (MPE22), which took place concurrently with the 2022 SIAM Annual Meeting in Pittsburgh, Pa., last year. He received funding to attend MPE22 through a SIAM Student Travel Award. To learn more about Student Travel Awards and submit an application, visit the online page.

References

[1] Bewley, A., Ge, Z., Ott, L., Ramos, F., & Upcroft, B. (2017). Simple online and realtime tracking. In Proceedings of the 2016 IEEE international conference on image processing (ICIP) (pp. 3464-3468). Phoenix, AZ: IEEE Signal Processing Society.

[2] Wang, C.-Y., Bochkovskiy, A., & Liao, H.-Y.M. (2021). Scaled-YOLOv4: Scaling cross stage partial network. In Proceedings of the 2021 conference on computer vision and pattern recognition (pp. 13029-13038). IEEE Computer Society.

About the Authors

Liam Jemison

Ph.D. Student, University of Wisconsin-Milwaukee

Liam Jemison is a Ph.D. student in mathematics at the University of Wisconsin-Milwaukee. He is interested in computer vision and natural language processing.

Istvan Lauko

Associate Professor, University of Wisconsin-Milwaukee

Istvan Lauko is an associate professor in the Department of Mathematical Sciences at the University of Wisconsin-Milwaukee. His recent research interests include mathematical modeling, differential equations, and the application of neural networks for image and natural language processing.

Gabriel Montecinos Arismendi

Seaweed Cultivation Specialist

Gabriel Montecinos Arismendi is a specialist in seaweed cultivation and a technology enthusiast. He holds a Master of Science in oceanography and marine environments.

Adam Honts

Imaging Scientist

Adam Honts is an imaging scientist and software engineer.

Matthew Stahl

Graduate Student, University of Wisconsin-Milwaukee

Matthew Stahl is a graduate student in mathematics at the University of Wisconsin-Milwaukee.