Ethical Concerns of Code Generation Through Artificial Intelligence

Machine learning models that are trained on large corpuses of text, images, and source code are becoming increasingly common. Such models—which are either freely available or accessible for a fee—can then generate their own text, images, and source code. The unprecedented pace of development and adoption of these tools is quite different from the traditional mathematical software development life cycle. In addition, developers are creating large language models (LLMs) for text summarization as well as caption and prompt generation. LLMs are fine-tuned on source code, such as in OpenAI Codex, which yields models that can interactively generate code with minimal prompting. For example, a prompt like “sort an array” produces code one line at a time that a programmer can then either choose to accept or use to generate a match for an entire sort routine.

Examples of fine-tuned LLMs for code generation include GitHub Copilot and Amazon CodeWhisperer. These models bring exciting possibilities to programmer productivity, bug detection during software development, and the translation of legacy code to modern languages. While the authors of these tools have addressed some potential issues in the codes—including bias and security implications—we want to highlight another pitfall in these fine-tuned models. The machine learning community is generally cognizant of matters like bias, but discussions about copyright and license issues in fine-tuning language models are far less common. In fact, the initial documentation for GitHub Copilot stated that “the code belongs to you” (this wording was recently revised).

SIAM members have invested considerable effort in the design and development of mathematical software packages that collectively build a software ecosystem that enables complex modeling and simulation on high-performance computing hardware. Several such open-source software packages are available at various levels of abstraction, from low-level dense linear algebra operations (i.e., BLAS and LAPACK) to sparse linear algebra libraries (i.e., CSparse, SuiteSparse, and Kokkos Kernels) and large frameworks (i.e., Trilinos) and ecosystems (i.e., xSDK). All of these libraries have well-defined interfaces and work smoothly together. They are also generally available both within their organizational GitHub accounts and through multiple other accounts, as permitted in their licenses. Many developers who release their code as open source also make their software readily accessible for community use (sometimes for profit) with proper attribution and consent of the copyright holders.

Since these codes are public, a human can intentionally copy code while knowingly violating copyright and licenses. If such a person actively asks an artificial intelligence (AI) tool to do so with a prompt like “sparse matrix vector multiply from Kokkos Kernels,” we would prefer that the system block these types of explicit requests. However, a bigger problem is that the AI system can put innocent users unintentionally in violation of copyright and licenses. Here we demonstrate that GitHub Copilot emits CSparse code (copyrighted by Tim Davis of Texas A&M University) almost verbatim, even with a generic prompt.

GitHub Copilot Example

With a very simple sequence of prompts, GitHub Copilot emits 40 of the 64 functions from CSparse/CXSparse—a package that is protected by the GNU Lesser General Public License—which accounts for 27 percent of its 2,158 lines of code. The first prompt is “// sparse matrix transpose in CSC format”, followed by nine repetitions of enter, tab, …, enter, and then control+enter. This prompt has no connection with the CSparse package (CSC is a common acronym for a sparse matrix data structure).

Copilot’s emitted code did not initially reproduce the CSparse copyright and license. But the prompt “// print a sparse matrix” did eventually cause Copilot to emit a near-verbatim copy of the CSparse cs_print function that included an incorrect copyright statement (wrong date, wrong version, wrong institution, and no license). The query “// sparse symbolic QR factorization” emitted a sparse upper triangular solve function from CSparse (the wrong method) that was prefaced with comments that contained an incorrect copyright and license. Copilot does not preserve license and copyright comments; instead, it treats them as mere text. Moreover, users are not warned that the discharged copyright and license statements can be incorrect.

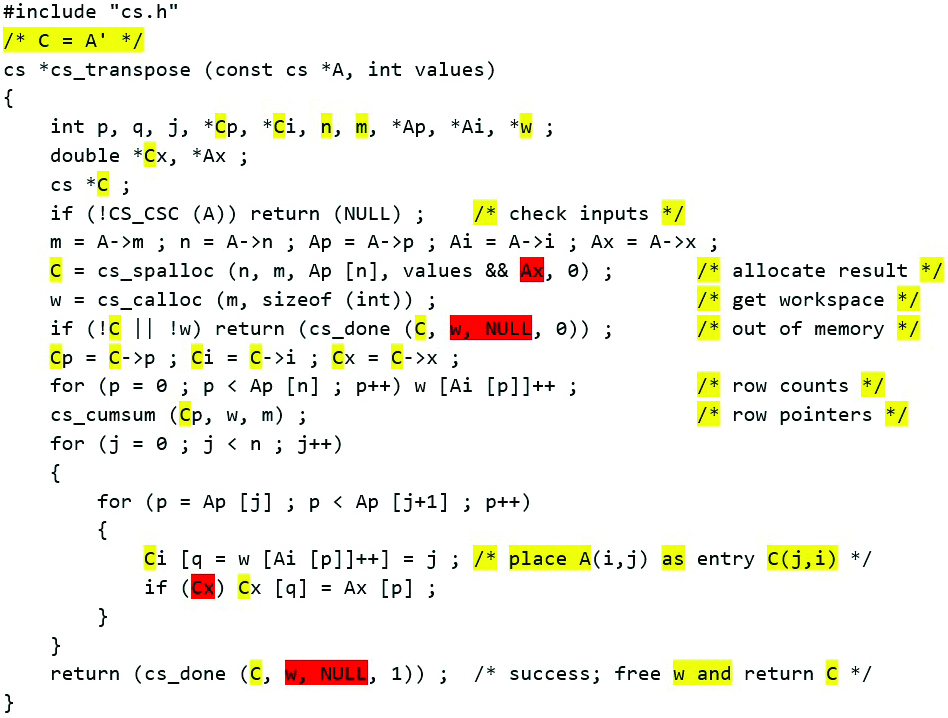

Copilot’s first emitted function was nearly identical to cs_transpose. Figure 1 shows the original code from CSparse v2.0.1 (©2006 Timothy Davis), which uses Gustavson’s algorithm [2]. The code also appears in its entirety with permission in Davis’ Direct Methods for Sparse Linear Systems, which was published by SIAM in 2006 [1].

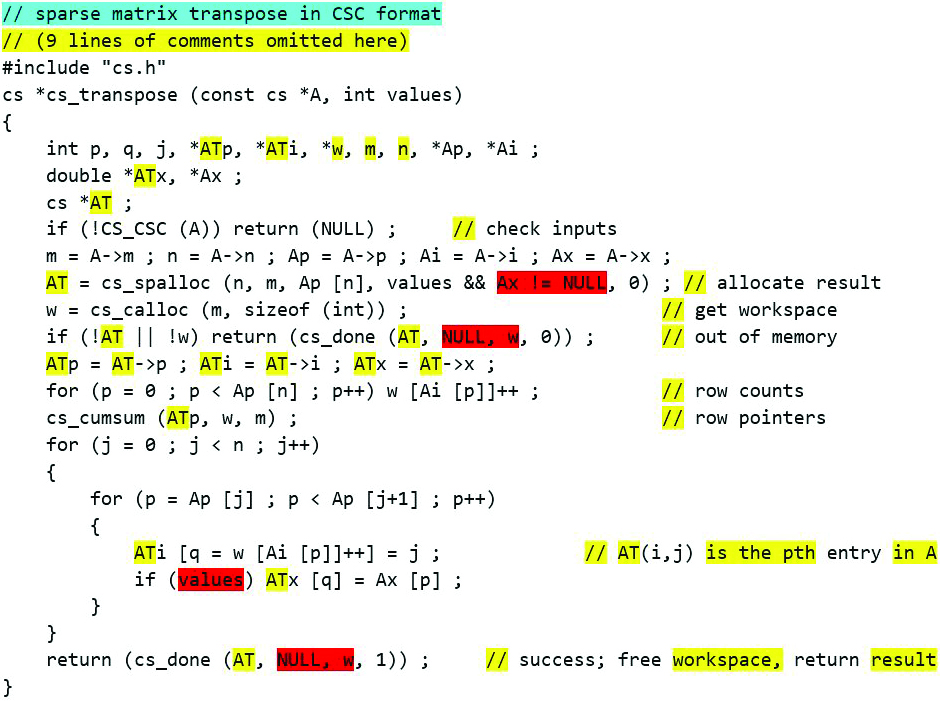

Figure 2 illustrates all of the changes in the code that Copilot produced. Yellow denotes the trivial changes: a few comments, the order of variable declarations (w, m, and n), and a single global replacement of C with AT. However, red indicates four changes in logic that affect the compiled code. The original code in Figure 1 is bug-free, but Copilot inserted a serious bug in Figure 2 that causes a segmentation fault (a security flaw). A naive Copilot user would likely miss this fatal flaw because the code’s execution would not always trigger it.

Copilot refers to many CSparse functions by their original names (the cs data structure as well as functions CS_CSC, cs_spalloc, cs_calloc, cs_cumsum, and cs_done). Entering a subsequent empty prompt (control+enter+tab) often emits these functions verbatim, though doing so sometimes requires a prompt with the name that Copilot has already provided.

Microsoft (the owner of GitHub) distributes CSparse/CXSparse through many channels, respecting the software license and preserving copyright statements in each case. By discarding copyright and license information for CSparse/CXSparse and injecting bugs into a near-verbatim copy, Copilot sows confusion into Microsoft’s own code base.

Davis is communicating with the GitHub team about these issues. While developers are currently addressing certain problems, the inclusion of copyrighted open-source software in training data and its reproduction without the correct license or copyright remains unremedied. GitHub has announced plans for a forthcoming mechanism (arriving in 2023) that will provide links to software packages with attribution and license information when Copilot emits code that is a near-match.

BigCode Project

Unlike GitHub Copilot, the BigCode project excludes copyleft code from its training data and offers an opt-out option, though developers can only remove their own repositories. CSparse and many other widely used packages are often copied verbatim into other GitHub repositories (almost always while preserving copyright and license information), but neither Copilot nor BigCode provide attribution, copyright, or licenses for code that they emit. However, BigCode is actively working to amend this matter.

OpenAI Codex

GitHub Copilot is based on OpenAI Codex. When given a similar prompt, Codex also produces cs_transpose essentially verbatim with no attribution, copyright, or license acknowledgment. In fact, the end user is unwittingly instructed to violate copyright; the OpenAI Sharing & Publication Policy states that “Creators who wish to publish their first-party written content ... created in part with the OpenAI API are permitted to do so,” under the condition that “the published content is attributed to your name or company.”

Furthermore, the OpenAI Content Policy does not list copyright or license violations as one of its hazards, though it did previously offer the following warning (which has since been removed): “Hallucinations: Our models provide plausible-looking but not necessarily accurate information. Users could be misled by the outputs if they aren’t calibrated in how much they should be trusted. For high-stakes domains such as medical and legal use cases, this could result in significant harm.” We feel that stripping a code of its attribution, copyright, and license and injecting security flaws can result in significant harm in any domain, not just medical and legal settings.

Possible Paths Forward

Despite the pitfalls, we do believe that it is possible to address the aforementioned issues and enable the future use of these tools within large, complex science and engineering codes. Most importantly, such fine-tuned models must allow for the correct attribution of the generated code and the proper copyright and license. We are excited by this potential development, as it would permit non-expert users to easily access some of our complex mathematical libraries. We would also like the programs to generate calls to libraries such as CSparse, rather than simply generating the code. Doing so would facilitate the use of these libraries as building blocks—as they were originally intended—instead of replicating the library code itself within multiple user codes. As such, the AI models must be able to distinguish versioning and interface changes. Finally, a true opt-out option that provides code owners with the choice to omit copies of their code in training would give owners more agency over their own work.

Disclaimer

Sandia National Laboratories is a multimission laboratory managed and operated by National Technology & Engineering Solutions of Sandia, LLC, a wholly-owned subsidiary of Honeywell International Inc., for the U.S. Department of Energy’s National Nuclear Security Administration under contract DE-NA0003525. This paper describes objective technical results and analysis. Any subjective views or opinions that might be expressed in the paper do not necessarily represent the views of the U.S. Department of Energy or the U.S. government.

References

[1] Davis, T.A. (2006). Direct methods for sparse linear systems. Philadelphia, PA: Society for Industrial and Applied Mathematics.

[2] Gustavson, F.G. (1978). Two fast algorithms for sparse matrices: Multiplication and permuted transposition. ACM Trans. Math. Softw., 4(3), 250-269.

About the Authors

Tim Davis

Faculty member, Texas A&M University

Tim Davis is a faculty member in the Department of Computer Science and Engineering at Texas A&M University. He is a Fellow of SIAM, the Association for Computing Machinery, and the Institute of Electrical and Electronics Engineers in recognition of his widely-used sparse matrix algorithms and software.

Siva Rajamanickam

Researcher, Sandia National Laboratories

Siva Rajamanickam is a researcher in the Center for Computing Research at Sandia National Laboratories. He leads the development of scientific computing frameworks and portable linear algebra software.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.