Falling Into a Trap

Some years ago, I asked my class to prove that if the solution to the baby problem

\[\dot x = a(t) x , \ \ x(0)=1 \tag1\]

is exponentially small, i.e.,

\[x(t)\leq e^{-t} \ \ \hbox{for all} \ \ t>0, \tag2\]

then with a tiny addition to the right side:

\[\dot y =a(t) y+ e^{-t ^2 }, \ \ y(0)=1, \tag3\]

the solution will remain small: \(y(t) \rightarrow 0\) as \(t \rightarrow \infty\). Surely \(e^{-t ^2}\) cannot make much of a difference.

When reading the first submitted assignment a week later, I found a mistake. The next homework was wrong as well. Prior to reading the third submission, I started to think — something, I soon realized, I should have done more of before assigning the problem. I realized that not only \(y \rightarrow 0\) may fail, but even more surprisingly, \(y(t)\) may be unbounded.

How Can a Tiny Addition Have Such a Big Effect?

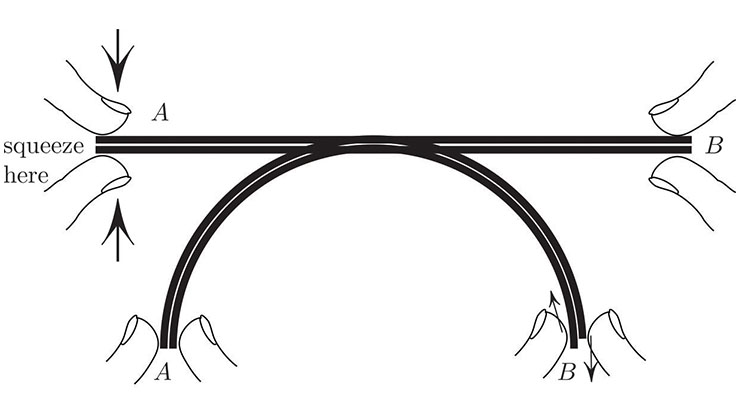

![<strong>Figure 1.</strong> The solution to \((1)\) (dotted line) decays over \([0,1]\) to a very small value over \([1,2]\) and “recovers” over \([2,3]\). An arbitrarily small addition \(b(t)\) during \([1,2]\) increases the solution (solid line) by a large factor. This increase propagates to \(t=3\) so that \(z(3)\) can be made arbitrarily large. Figure courtesy of Mark Levi.](/media/nmtlfhqf/figure1.jpg)

Figure 1 illustrates the mechanism of possible unboundedness; let’s first concentrate on interval \([0,3]\). To simplify the problem, replace \(e^{-t ^2}\) in \((3)\) with a function \(b(t) \leq e^{-t ^2}\), chosen to be constant and positive on \([1,2]\) and vanishing elsewhere. The solution of

\[\dot z = a(t) z+ b(t) , \ \ z(0)=1 \tag4\]

satisfies \(z(t) \leq y(t)\), and so it is enough to produce an example of \(a(t)\) for which \(z\) is unbounded. To that end, let us specify \(a(t)\) by letting it be piecewise constant with values \(-A\), \(0\), and \(A-3\) on the three intervals in Figure 1, showing the graphs of \(x(t)\) and \(z(t)\). This choice of \(a\) is dictated by the wish to make \(x(t)\) approach \(0\) very closely before it returns to \(e^{-t}\). Incidentally, the "\(-3\)" in \(A-3\) guarantees that \(x(t) \leq e^{-t}\).

With a large enough \(A\), \(z(3)\) can be arbitrarily large — this can be seen via an explicit calculation that I omit, or from the discussion in the next paragraph. By extending this construction to the intervals \([3n,3n+3]\), we get \(z(3n) \rightarrow \infty\) for \(n \rightarrow \infty\). And since \(z(t) \leq y(t)\), we have \(y (3n) \rightarrow \infty\). Incidentally, \(y(3n+1) \rightarrow 0\) so that \(y\) oscillates between \(0\) and \(\infty\) as \(t \rightarrow \infty\).

Here is a heuristic argument for the unboundedness of \(z(t)\). Think of a stock market in which one dollar that we invest at \(t=0\) oscillates between nearly \(0\) and \(e^{-t}\). Even a tiny investment when the stock is near \(0\) can increase the value by an arbitrarily large factor if the dip is sufficiently deep; this factor propagates to the time the market “recovers” (see Figure 1). Cyclical repetition leads to times of ever higher rises that alternate with ever deeper declines.

About the Author

Mark Levi

Professor, Pennsylvania State University

Mark Levi (levi@math.psu.edu) is a professor of mathematics at the Pennsylvania State University.

Related Reading

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.