Improving Medical Image Prediction and Segmentation with Neural Differential Equations

Radiation therapy, also known as radiotherapy, is a type of cancer treatment that uses high doses of radiation to kill cancer cells and shrink tumors. Medical practitioners typically visualize tumors via positron emission tomography (PET) scans — imaging tests that utilize a small amount of radioactive tracer to examine the body’s organs and tissues; regions with abnormally high amounts of chemical activity can indicate cancer or other diseases. “In this whole business of radiotherapy, we want to use radiation to kill tumor cells, but we don’t want to kill normal cells along the way,” Hangjie Ji of North Carolina State University said. As such, treatment plans—including the prediction and segmentation of images—must be exceptionally precise.

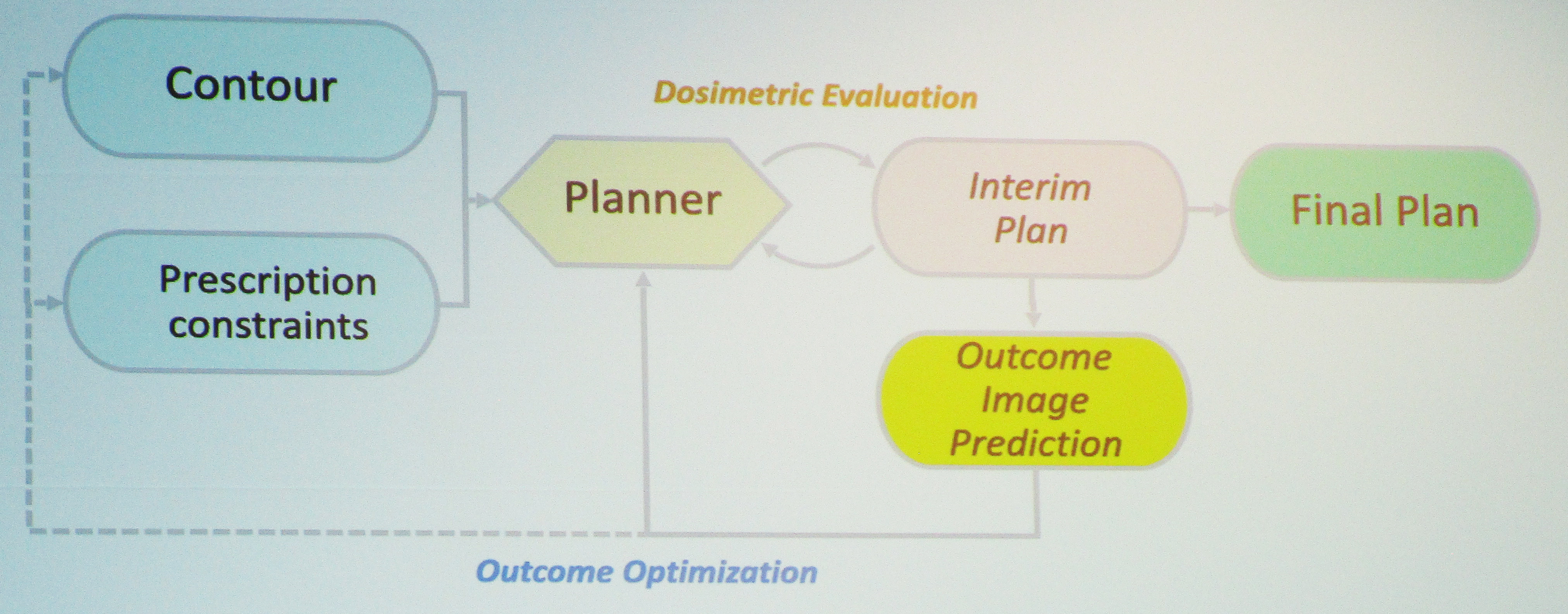

During the 2024 SIAM Conference on Mathematics of Data Science, which is currently taking place in Atlanta, Ga., Ji presented joint work with medical practitioners at Duke University that incorporates ordinary differential equations (ODEs) and partial differential equations (PDEs) into image prediction and segmentation techniques. She began by overviewing the typical treatment plan of radiotherapy (see Figure 1). Practitioners first employ contouring to identify the tumors and consider any prescription constraints, then they generate a tentative treatment plan. After several predictive iterations with this interim plan—during which specialists may adjust plan parameters, contour and prescription constraints, or dose level—they identify a final plan that will deliver the most promising results for the patient.

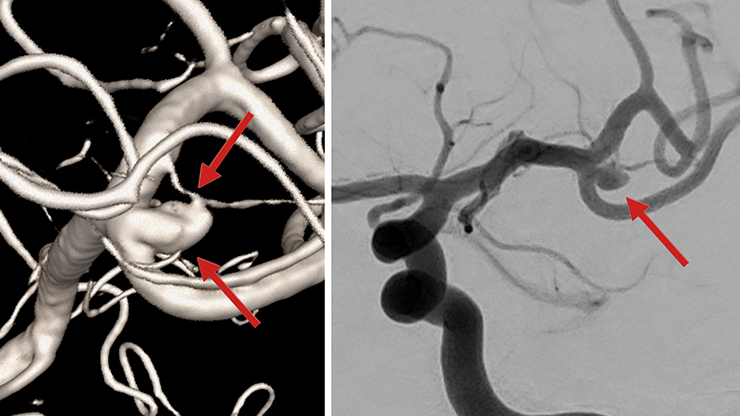

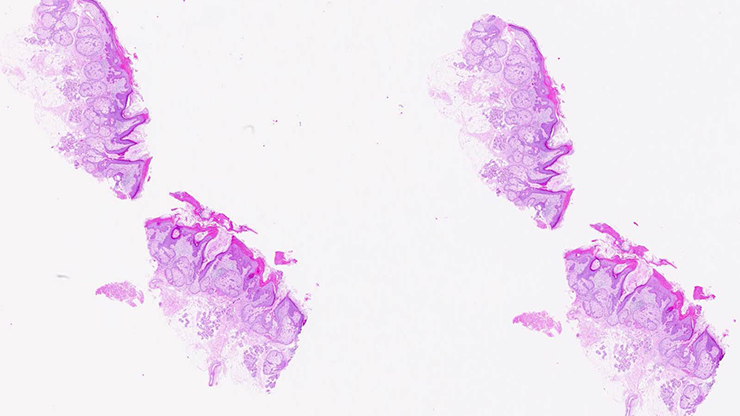

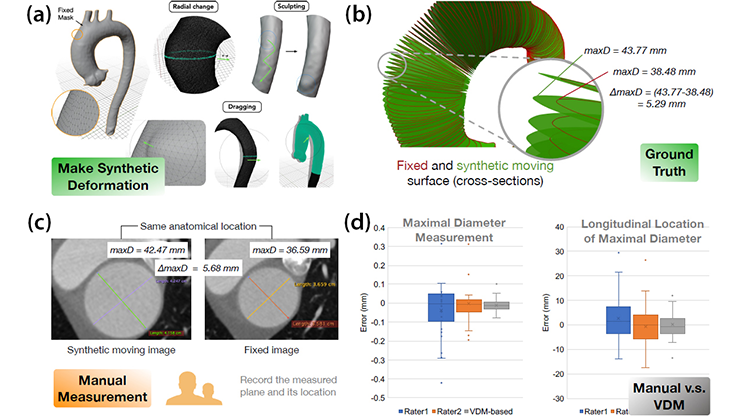

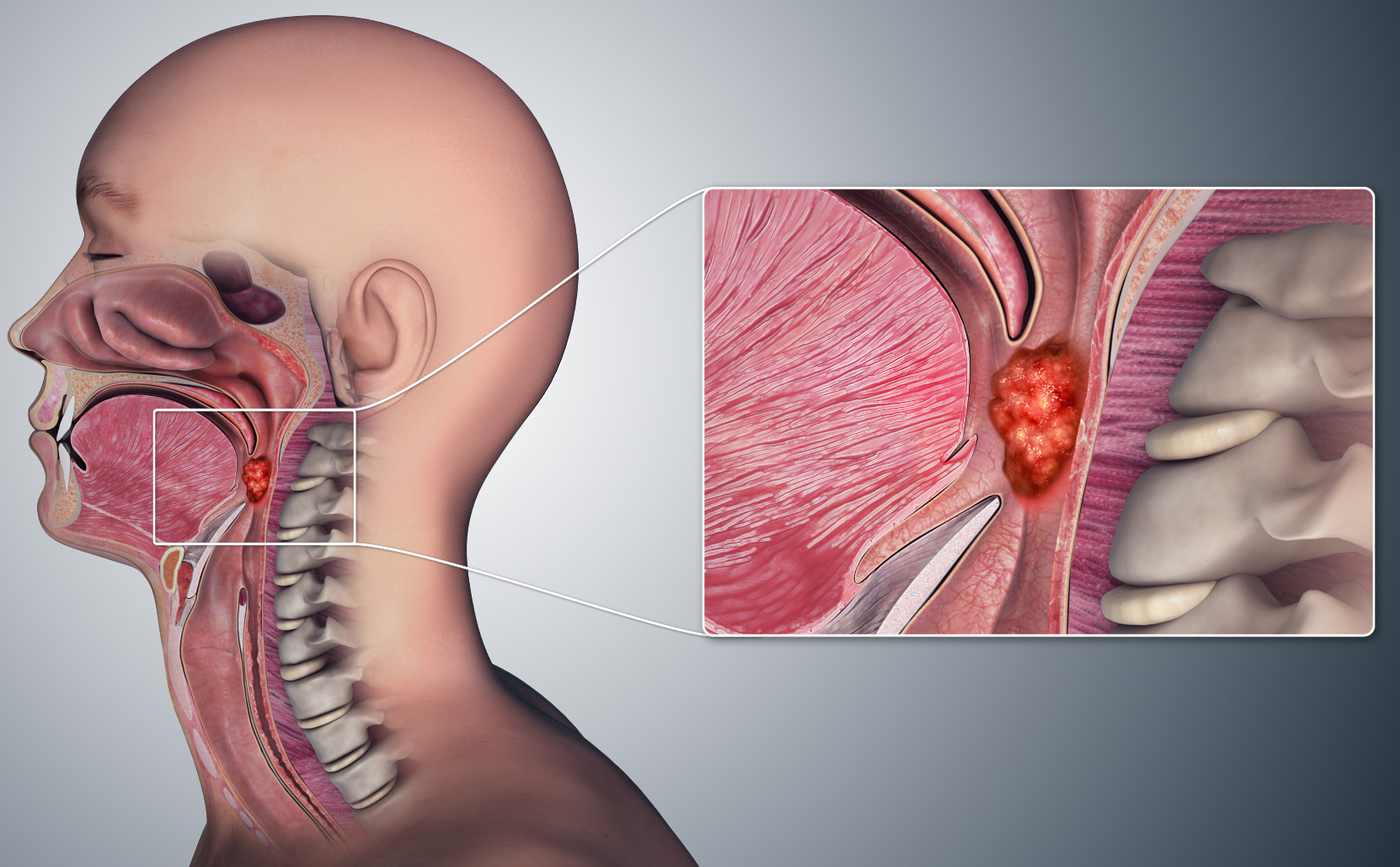

Ji concentrated on two specific parts of the radiotherapy treatment plan: contouring and outcome image prediction. She focused the first part of her presentation on outcome image prediction and explained that deep learning has become a common tool for medical image synthesis/prediction in recent years. “Many biologically motivated models have been proposed to model the growth or diffusion of tumor cells in human bodies,” Ji said, adding that such efforts are meant to improve image prediction explainability. She thus wanted to develop a biologically guided deep learning method for post-radiotherapy image outcome prediction that uses pre-radiotherapy images and radiotherapy plan information in the context of oropharyngeal cancer (see Figure 2). “We’d like to figure out what’s going to happen to the patient after the course of treatment,” she said.

To do so, Ji introduced a simple reaction-diffusion mechanism that models tumor growth and therapeutic dynamics, with terms for both proliferation and the spread of malignancy. She then hypothesized that a radiotherapy-induced change in standardized uptake value can be modeled with a modified reaction-diffusion PDE that accounts for spatial dose and response to radiotherapy. To verify her hypothesis and learn the response operator and model coefficients, Ji designed a hybrid neural network model and tested it with patient data from a study of 64 oropharyngeal cancer patients who had received intensity-modulated radiation therapy as part of a Duke clinical trial. Two-dimensional high-uptake PET slices served as independent samples of real data.

“What our model can actually do is break down how different terms from the original embedded PDE model can represent the whole dynamics,” Ji said. This allowed her to visualize the way in which the diffusion, proliferation, and response terms contribute to tumor shrinkage throughout the treatment process. Ultimately, the learned model passed the gamma test with high scores, which confirmed its value to the medical imaging community.

Next, Ji returned to the concepts of contouring and image segmentation. Despite the existence of a variety of auto segmentation tools (e.g., thresholding, clustering, classic machine learning, and neural networks), manual delineation reigns supreme. “In practice, the clinical gold standard that medical practitioners actually use is manual delineation,” Ji said. “They are still drawing where the tumors are.” This persistence is partly due to several issues with traditional deep neural network (DNN) models. “Although you can see nice results from image segmentation tools, there’s a lack of interpretability of what the neural network and black box models are actually doing,” Ji continued. “It’s difficult for doctors to explain to patients why we trust the model, and there’s a lack of trust and confidence as a result.”

Ji considered a brain tumor segmentation dataset of 369 subjects with low- and high-grade gliomas and the corresponding images from four standard magnetic resonance imaging (MRI) scans. She sought to determine whether the results were pulling contributions from all four images in the set or relying on a subset of only one or two images. In short, she wanted to utilize a DNN to identify the contributions of each image.

Ji embedded a neural ODE into the process of transitioning from input images to output segmentation. Neural ODEs—which can be sufficiently learned by black box ODE solvers and the adjoint method—have several advantages; for instance, they are flexible when learning irregularly sampled time series and require constant memory during training, which minimizes memory cost.

“We assume that the deep feature extraction in a DNN-based glioma segmentation can be modeled by a continuous process,” Ji said. She used a contribution map (and corresponding contribution factor and curve) to compare the contribution of one particular image to others in the set. “This tool helps us evaluate the contributions of each individual MRI input in the segmentation mask,” Ji said.

Ultimately, Ji’s neural ODE model revealed that two of the four images in the original set of glioma scans provided close results to the ground truth, while the other two offered minimal input; the contribution curve affirmed this assessment. Such a result opens the door to practitioner experimentation with the number of images that influence the segmentation process. “This is a visualization tool,” Ji said. “Hopefully we can help the medical practitioners realize that you may not need all four images for some tasks.”

About the Author

Lina Sorg

Managing editor, SIAM News

Lina Sorg is the managing editor of SIAM News.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.