Inspired by Nature: Dynamic Graphs and Their Applications

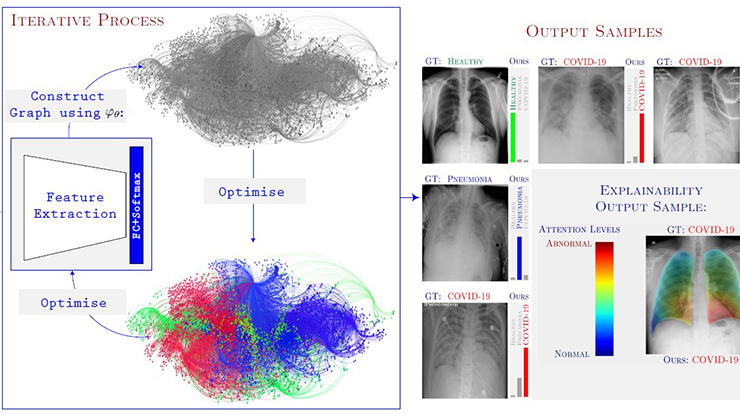

Graphs are powerful mathematical structures that have been the subject of study for at least 300 years. But in the contemporary era of constant and capacious data collecting, graphs must now be able to change with the surrounding world. Dynamic graphs—networks whose properties are a function of time—arise naturally in wide-reaching applications, from political science to satellite communication. These beautiful objects support both a rich theory and, with increased computing power, tractable algorithms. Figure 1 offers a small sample of dynamic networks.

![<strong>Figure 1.</strong> Four diverse contexts in which dynamic networks naturally arise. <strong>1a.</strong> A NASA satellite network with ever-changing connectivity as the Earth proceeds in its orbit. The vertices are satellites or relays and the edges indicate the paths of possible signals. <strong>1b.</strong> A developing mouse embryo, where each cell is a vertex. The heterogeneous edges represent physical proximity, chemical signaling, and lineage. <strong>1c.</strong> A commuter network of the New York City metropolitan area. The vertices are counties and the edges signify the populations that commute from one county to another. These population flows change over time. <strong>1d.</strong> A “parse tree” for a dynamic network. This presentation can help researchers analyze dynamic networks that arise in theoretical computer science. Figure 1a courtesy of [10], 1b courtesy of [2], 1c courtesy of [6], and 1d courtesy of [2].](/media/eabgomju/figure1.jpg)

Static Graph Theory Is Insufficient

It is tempting to treat dynamic graphs as simply a collection of static graphs in a particular order; after all, the standard notation and definitions for these objects are exactly that. We can construct a small example to test whether static graph theory can adequately explain dynamic graphs.

Figure 2 illustrates a discrete-time dynamic network whose four vertices form a directed cycle. At even timesteps, the cycle is clockwise; at odd timesteps, it is counterclockwise. Formally, \(V \triangleq \{ v_1, v_2, v_3, v_4 \}\) is our vertex set, with the top left corner as \(v_1\) and labels proceeding in a clockwise direction. At timestep \(t \in \mathbb{N}\), \(E_{t} \subseteq V \times V\) represents the edges. We define \(E_t\) as

\[E_{t} \triangleq \begin{cases} \left\{ (v_1, v_2), (v_2, v_3), (v_3, v_4), (v_4, v_1) \right\} & t \text{ odd} \\ \left\{ (v_2, v_1), (v_3, v_2), (v_4, v_3), (v_1, v_4) \right\} & t \text{ even.} \end{cases}\]

![<strong>Figure 2.</strong> An alternating dynamic network. This discrete-time dynamic network is a directed cycle that moves clockwise at odd timesteps and counterclockwise at even timesteps. A smiley face marks the starting position of our game. Figure courtesy of [2].](/media/eodnrqjf/figure2.jpg)

Let’s play a game: place a smiley face on the dynamic network and move it along one edge of the graph at every timestep (see Figure 2). We can define a dynamical system where \(x_{t} \in V\) is the position of the smiley face at timestep \(t\). Then \(x_{t} \in N^{t - 1}(x_{t - 1})\), where \(N^{t - 1}(u) \triangleq \{ v \colon (u, v) \in E_{t - 1} \}\), i.e., the neighborhood of vertex \(u\) at timestep \(t - 1\). Given this setup, we ask the following question: If we place the smiley face on the first vertex (such that \(x_{1} \triangleq v_1\)), which vertices can it reach?

In static graph theory, each graph is strongly connected; without other tools, we may want to conclude that the smiley face can reach all vertices. But if we play this game for several steps, we see that the smiley face is restricted to \(v_1\) for all odd timesteps and \(v_2\) for all even timesteps. Indeed, we note that the neighborhood for each vertex consists of just one other vertex and then proceed by induction (an exercise left to the reader).

This example illustrates the maxim \(\scriptstyle{\textrm{LOCAL}} \neq \scriptstyle{\textrm{GLOBAL}}\). In short, local (i.e., static) graph properties cannot reliably predict global (i.e., dynamic) properties. To answer our earlier question, we must introduce a slew of new definitions. In particular, we define dynamic connectivity by specifying that two vertices are dynamically connected if one can reach the other at all timesteps. We can leverage this definition to conclude that our example is a dynamically disconnected graph. For full details, see [10].

When Is Static the Same as Dynamic?

Reinventing graph theory from scratch in a dynamic context would be incredibly challenging. Although there are currently no general necessary and sufficient conditions to link static and dynamic network properties, these settings do coincide under certain conditions.

![<strong>Figure 3.</strong> A frame of the basketball game with the constructed passing network. Green circles with Latin letters are offensive players, while red circles with Greek symbols are defensive players. Figure courtesy of [2].](/media/es4l5zw1/figure3.jpg)

It is important to understand that dynamic networks are inherently directed because we generally take the arrow of time for granted; in other words, we assume that we cannot travel backwards in time in dynamic networks. Because of this property, certain static graph algorithms work essentially unchanged in the dynamic setting. For example, Dijkstra’s algorithm to find the shortest path1 between vertices works with a mild generalization [11].

Beyond Dijkstra’s algorithm, the addition of self-loops is another way to bridge the gap between static and dynamic properties. If we reanalyze the problem from the previous section with the network in Figure 2 and also assume that every vertex has an edge to itself at every timestep, then suddenly our network is dynamically connected; the smiley face can reach any vertex from any other vertex by taking some timesteps to wait at its current location. This observation gives rise to a general theorem: a dynamic network with self-loops is fully dynamically connected if the network is strongly connected at every timestep [10]. By adding self-loops, the static property correctly predicts the dynamic one.

In fact, the pattern is even more general than that; with the addition of self-loops, we can align a variety of static and dynamic properties [1], like a notion of diameter, connectivity results in Erdős-Rényi graphs [7-9], and graph clustering [4, 5]. Although there is no general theorem as of yet, we believe that the addition of self-loops enables the conversion of almost any static property into a dynamic one.

Machine Learning and Basketball

As a passionate Blue Devil, I eagerly anticipate the Duke University basketball season each year.2 Even such a wildly popular sport cannot escape dynamic graph theory.

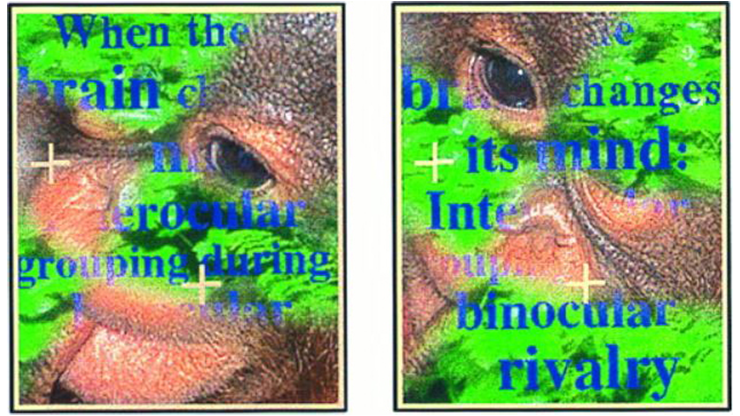

My collaborators and I conducted a project that began with data from an array of cameras atop Duke's Cameron Indoor Stadium; in particular, we used a sequence of player positions at a rate of 24 frames per second.3 We then constructed passing networks for each frame: starting with a complete graph where the offensive players are nodes, we removed edges if a defensive player occluded the straight line of sight between a pair of offensive ones. Figure 3 depicts a passing network for a single frame.

![<strong>Figure 4.</strong> Sample library of graphs for a possession in a basketball play, descending in order of frequency from left to right and top to bottom. Figure courtesy of [2].](/media/mupbb0n4/figure4.jpg)

Next, we generated a “library” of possible passing configurations that provides a sort of lexicon for basketball plays (see Figure 4); this allowed us to utilize natural language processing (NLP) techniques—rather than computer vision—to study this data. Based on this passing network library, we constructed individual tokens for each graph and strung together sentences. Using the well-known transformer model [12] in sequence-to-sequence mode, we could guess possible future trajectories for each basketball player, producing accurate near-future predictions in practice [3].

This result is surprising, as it seems odd that a core NLP technique can predict geometric data, especially since the neural networks that we employed for NLP purposes had poor graph reasoning skills. However, the dynamic networks allowed us to extract “semantic” information from basketball plays. The subtle distinction is that we did not feed geometric data or graphs directly into an NLP system; instead, we used dynamic networks as an intermediate representation to generate tokens, which are squarely an NLP competency. The result is a system that can produce accurate predictions for geometric data (though not on a long enough timescale to predict a perfect bracket).

Conclusions

From NASA scientists to hardcore basketball fans, dynamic networks have an unsung wide appeal. In this contemporary era where we have more data than capacity to analyze it, dynamic networks will continue to be a foundational structure with which to study the changing world around us.

1 Dynamic paths are formally called journeys.

2 I assume that this enthusiasm is relative to the mathematical population; compared to the set of all Duke fans, I am decidedly below average.

3 This data was generously provided by SportVU.

Devavrat Vivek Dabke delivered a minisymposium presentation on this research at the 2023 SIAM Conference on Computational Science and Engineering (CSE23), which took place in Amsterdam, the Netherlands. He received funding to attend CSE23 through a SIAM Student Travel Award. To learn more about Student Travel Awards and submit an application, visit the online page.

SIAM Student Travel Awards are made possible in part by the generous support of our community. To make a gift to the Student Travel Fund, visit the SIAM website.

References

[1] Cleveland, J., Hylton, A., Short, R., Mallery, B., Green, R., Curry, J., … Freides, O. (2022). Introducing tropical geometric approaches to delay tolerant networking optimization. In 2022 IEEE aerospace conference (AERO). Big Sky, MT: Institute of Electrical and Electronics Engineers.

[2] Dabke, D.V. (2023). On systems of dynamic graphs: Theory and applications [Ph.D. thesis, Princeton University]. DataSpace at Princeton University. Retrieved from https://dataspace.princeton.edu/handle/88435/dsp010z709070k.

[3] Dabke, D.V., & Chazelle, B. (2021). Extracting semantic information from dynamic graphs of geometric data. In Complex networks and their applications X: Proceedings of the tenth international conference on complex networks and their applications (pp. 474-485). Studies in computational intelligence (Vol. 1073). Cham, Switzerland: Springer Nature.

[4] Dabke, D.V., & Dorabiala, O. (2023). A novel method for vertex clustering in dynamic networks. In Complex networks and their applications XII: Proceedings of the twelfth international conference on complex networks and their applications (pp. 445-456). Studies in computational intelligence (Vol. 1142). Cham, Switzerland: Springer Nature.

[5] Dabke, D.V., & Dorabiala, O. (2024). Vertex clustering in diverse dynamic networks. PLOS Complex Syst., 1(4), e0000023.

[6] Dabke, D.V., Karntikoon, K., Aluru, C., Singh, M., & Chazelle, B. (2023). Network-augmented compartmental models to track asymptomatic disease spread. Bioinform. Adv., 3(1), vbad082.

[7] Dorabiala, O., & Dabke, D.V. (2024). Vertex clustering in diverse dynamic networks. PLOS Complex Syst., 1(4), e0000023.

[8] Erdős, P., & Rényi, A. (1959). On random graphs. Publ. Math. Debrecen, 6, 290-297.

[9] Erdős, P., & Rényi, A. (1960). On the evolution of random graphs. Publ. Math. Instit. Hungarian Acad. Sci., 5, 17-61.

[10] Hylton, A., Short, R., Cleveland, J., Freides, O., Memon, Z., Cardona, R., … Mallery, B. (2022). A survey of mathematical structures for lunar networks. In 2022 IEEE aerospace conference (AERO). Big Sky, MT: Institute of Electrical and Electronics Engineers.

[11] Szcześniak, I., & Woźna-Szcześniak, B. (2023). Generic Dijkstra: Correctness and tractability. In NOMS 2023-2023 IEEE/IFIP network operations and management symposium. Miami, FL: Institute of Electrical and Electronics Engineers.

[12] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., … Polosukhin, I. (2017). Attention is all you need. In U.V. Luxburg, I. Guyon, S. Bengio, H. Wallach, R. Fergus, S.V.N. Vishwanathan, & R. Garnett, Advances in neural information processing systems 30: 31st international conference on neural information processing systems (NIPS 2017). Long Beach, CA: Curran Associates.

About the Author

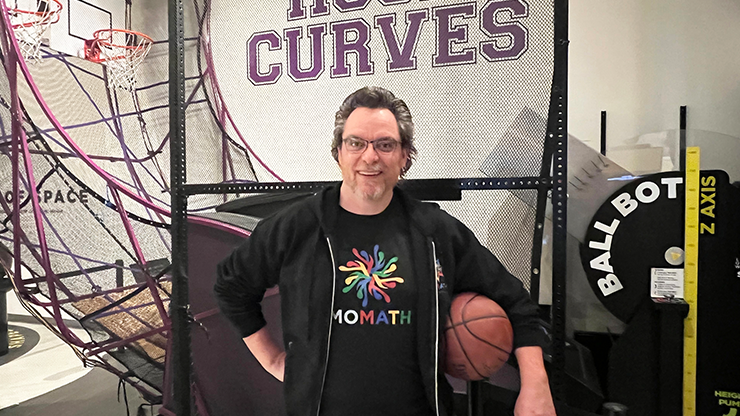

Devavrat Vivek Dabke

Principal quantitative scientist, Level Ventures

Dev Dabke is a mathematician by training and the principal quantitative scientist at Level Ventures, a preeminent venture capital fund manager in New York City. His research focuses on dynamic networks, machine learning (ML), and ML infrastructure. Dabke earned his Ph.D. in applied mathematics from Princeton University and his B.S. from Duke University, making him a fierce Blue Devil.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.