On Fairness and Foundations in Machine Learning

Machine learning (ML) is revolutionizing many aspects of society, but its widespread adoption raises concerns about transparency, fairness, and trustworthiness. An increasing reliance on ML in critical domains like healthcare and criminal justice necessitates a thorough examination of the fairness and reliability of these systems. Traditional ML algorithms often prioritize overall accuracy, potentially leading to inequitable outcomes for specific subgroups within the data. For instance, an algorithm that is trained on a dataset with an imbalanced representation of different demographics may exhibit biased predictions towards certain groups. To address this issue, we must develop techniques that ensure fairness and mitigate bias.

Fairness Improvement in Nonnegative Matrix Factorization

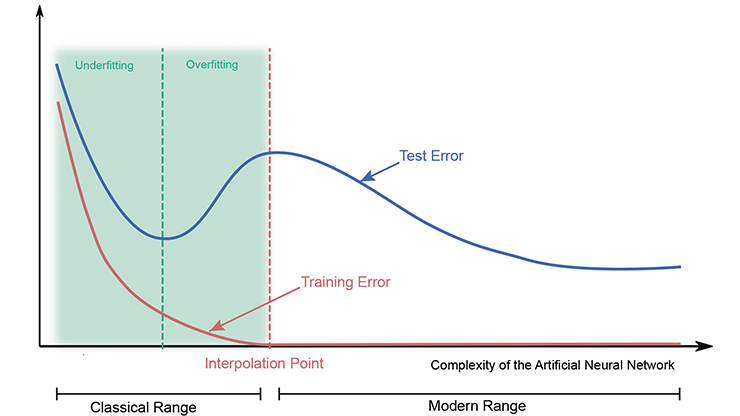

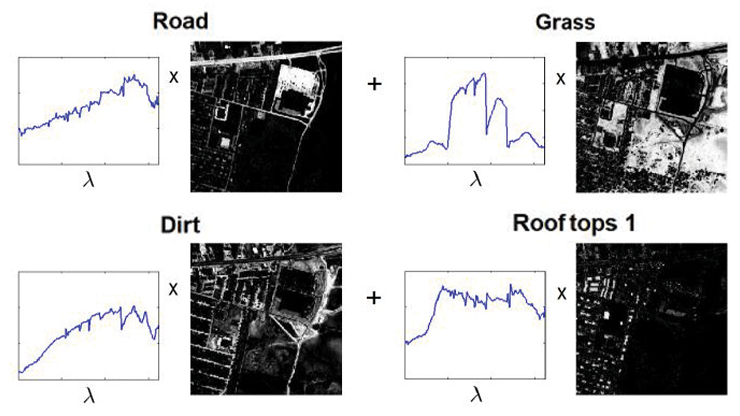

Full ML transparency requires understanding the algorithmic decision-making process and ensuring that these decisions are interpretable and justifiable. Nonnegative matrix factorization (NMF) is a ML technique that identifies latent themes or patterns in large datasets. Given a nonnegative matrix \(\mathbf{X} \in \mathbb{R}^{m \times n}_{\geq 0}\) (e.g., a matrix that represents data samples as rows and features as columns) and a desired lower dimension \(r\) (also called a nonnegative rank), NMF aims to decompose \(\mathbf{X}\) into a product of two lower-dimensional nonnegative matrices: (i) the representation matrix \(\mathbf{W} \in \mathbb{R}^{m \times r}_{\geq 0}\) and (ii) the dictionary matrix \(\mathbf{H} \in \mathbb{R}^{r \times n}_{\geq 0}\). The goal is for these factors to approximately explain the data, as in

\[\mathbf{X} \approx \mathbf{WH}.\]

We often interpret the rows of \(\mathbf{H}\) as topics that capture underlying patterns within the data. Each row of \(\mathbf{W}\) then provides an approximate representation of the corresponding data sample in the lower-dimensional space that the topics span.

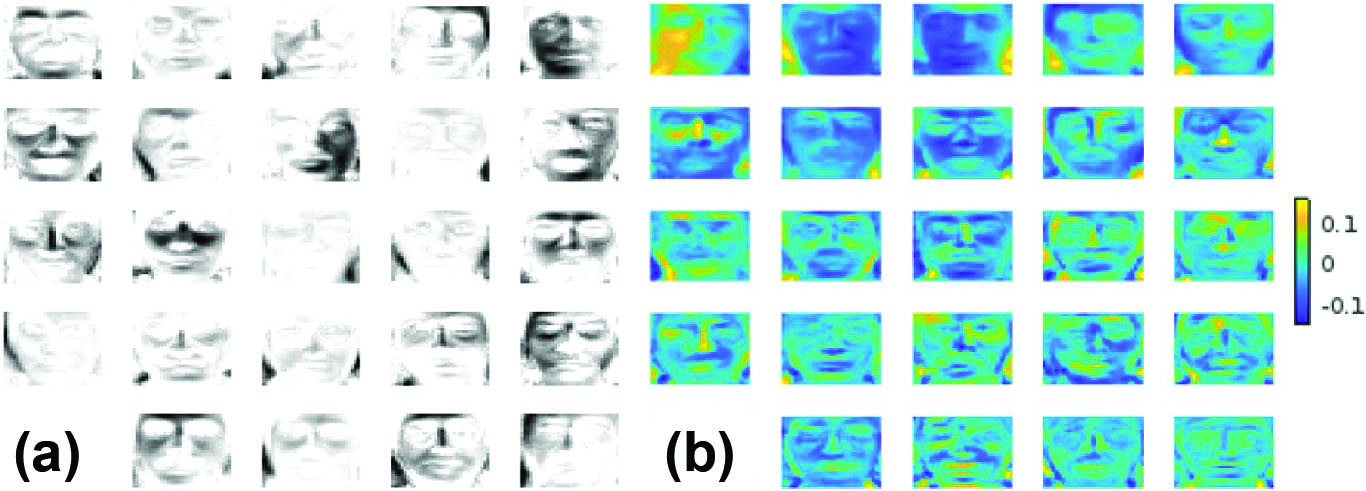

Because of the imposed nonnegativity, NMF learns interpretable building blocks of the data. For example, a 1999 seminal paper considers data that consist of (vectorized) images of human faces [3]. The “topics” then comprise parts of the face—eyes, noses, ears, and mouths—and the representation vectors encode the way in which those parts rebuild each face. In Figure 1a, we mimic this experiment and run a simple NMF (with rank \(r=25\)) on the Yale Face Database. This method is in contrast to other approaches such as principal component analysis, where the components do not have the same interpretability as “parts” of the face (see Figure 1b).

The most common formulation for NMF approximations uses the Frobenius norm to measure reconstruction error:

\[\text{argmin}_{\mathbf{W}\in \mathbb{R}^{m \times r}_{\geq 0}, \, \mathbf{H}\in \mathbb{R}^{r \times n}_{\geq 0}} ||\mathbf{X} - \mathbf{WH}||^2_F. \tag1\]

Many numerical optimization techniques can help us find local minima for the NMF problem in \((1)\), such as alternating minimization—i.e., solving a nonnegative least squares problem in one variable while fixing the other and repeating—or multiplicative updates, which serve as an (entrywise) projected gradient descent algorithm [1, 4].

A Look at Fairness

![<strong>Figure 2.</strong> An example of inequitable results on synthetic data when nonnegative matrix factorization is applied to the entire population. The relative reconstruction error of each group is a function of rank. Shading indicates the standard deviation over 10 trials. Figure courtesy of [2].](/media/jvtfaeqc/figure2.jpg)

We now consider a novel perspective on NMF [2]. Like many objective functions, NMF seeks to minimize the total reconstruction error — which implies that the method provides low approximation error on average. But because it sums over the entire population, NMF is also agnostic to groups within the population that may have different sizes or complexities. For instance, simply scaling a single data point by an enormous magnitude will force the NMF factors to account for that scale. Although we can handle this straightforward example by normalizing the data points, its incidence highlights an important phenomenon that also occurs in populations with large majority groups or groups with high complexity, which are much harder to address.

As a simple motivating synthetic example, we generate a data matrix \(\mathbf{X} \in \mathbb{R}_{\geq 0}^{m \times n}\) as \(\mathbf{X} = \mathbf{W} \mathbf{H}\), where \(\mathbf{W} \in \mathbb{R}_{\geq 0}^{m \times r}\) and \(\mathbf{H} \in \mathbb{R}_{\geq 0}^{r \times n}\) are sampled from a uniform distribution over \([0, 1)\). We consider three groups: (i) large size with low complexity (\(m_1=1000\), \(n=20\), and \(r_1=3\)), (ii) medium size with low complexity (\(m_2=500\), \(n=20\), and \(r_2=3\)), and (iii) small size with high complexity (\(m_3=250\), \(n=20\), and \(r_3=6\)). Even in this comparatively unextreme and simple case, each group experiences vastly different relative errors, defined as \(\| \mathbf{G} - \mathbf{W} \mathbf{H}\|/\|\mathbf{G}\|\) for subdata \(\mathbf{G}\) (see Figure 2). This discrepancy may lead to unfairness for several reasons. If we employ a method like NMF for topic modeling to understand a set of data, some groups within the population will be less explained than others. And if we utilize NMF for classification or feature extraction, those underrepresented groups may experience far worse predictions, classifiers, allocation of resources, and so forth.

Towards a Fairer NMF Formulation

Although there are many sources of bias and inequity in ML, we focus on the lack of fairness in NMF that stems from an imbalance in group size and/or group complexity [2]. To overcome such imbalances, we propose an approach called Fairer-NMF, with a fairer objective function that is defined as

\[\min_{\mathbf{W} \in \mathbb{R}^{m \times r}_{\geq 0}, \, \mathbf{H} \in \mathbb{R}^{r \times n}_{\geq 0}} \quad \max_{\ell \in \{1, \cdots, L\}} \left\{ \frac{\|\mathbf{X}_{\ell} - \mathbf{W}_{\ell}\mathbf{H}\| - E_{\ell}}{\|\mathbf{X}_{\ell}\|} \right\}. \tag2\]

Here, \(\mathbf{X}_{\ell}\) is the data matrix for group \(\ell\), \(\mathbf{W}_{\ell}\) is the representation matrix for group \(\ell\), \(\mathbf{H}\) is the common dictionary matrix, and \(E_{\ell}\) is the estimated optimal (nonnegative) rank-\(r\) reconstruction error for group \(\ell\). Each part of this modified objective seeks to mitigate the unfairness from different sources. The normalization—via the norm of \(\mathbf{X}_{\ell}\)—attempts to account for groups of dissimilar sizes or magnitudes, and the subtraction of \(E_{\ell}\) in the numerator addresses the varying complexity of the groups. For instance, if group \(\ell\) has low complexity (meaning that \(E_{\ell}\) is small), then the objective incurs a greater penalty if the learned factors do not explain that group well (i.e., the numerator will be larger). The numerator thus attempts to “renormalize” each group’s complexities. A reasonable choice for \(E_{\ell}\) could be

\[E_\ell = \mathbb{E}_{\mathbf{X}_{\ell}^*}\|\mathbf{X}_{\ell} - \mathbf{X}_{\ell}^*\|,\]

where the expectation is taken over the \(\mathbf{X}_{\ell}^*\) that we obtain from a specific randomized implementation of rank-\(r\) NMF on \(\mathbf{X}_{\ell}\). We can empirically approximate this quantity by averaging over NMF outputs for each individual group.

This formulation aims to minimize the maximum relative reconstruction loss across all groups, balancing for size and adjusting for group complexity to ensure an impartial representation. Ultimately, we hope that our method will allow for a more equitable explanation of data, prediction, and classification outcomes in the context of the entire population.

Readers may wonder why we do not individually perform NMF on each group in the population, thus eliminating the need to rebalance the overall objective. It turns out that this approach typically does not yield desirable outcomes, especially for small groups. In most learning applications, small groups still share a lot of information and similarities with the rest of the population; as such, learning about them within the entire population is far better than extracting a group and working with much less informative data. And in some cases, the goal of the learning task is to determine the correlations and interactions between groups, which means that we must consider them all together. Of course, identifying such groups within the population might itself be an unfair task that introduces bias. All of these considerations are important on an application by application basis.

![<strong>Figure 3.</strong> The application of Fairer-NMF to the synthetic data matrix. <strong>3a.</strong> Fairer-NMF loss with the alternating minimization scheme. <strong>3b.</strong> Fairer-NMF relative error with the same scheme. Figure courtesy of [2].](/media/blvjkbmj/figure3.jpg)

Numerical Experiments

We will now present several experiments that utilize Fairer-NMF [2]. First, we run Fairer-NMF on the same simple synthetic data from Figure 2. Due to the method’s construction, the loss—i.e., the term that is optimized in \((2)\)—is the same for all three aforementioned groups in this case (see Figure 3a). Given this unsurprising result, we can also ask about the relative reconstruction error \((\|\mathbf{X} - \mathbf{W} \mathbf{H}\|_F / \|\mathbf{X}\|_F)\) for each group (see Figure 3b). When compared to Figure 2, our approach does appear to provide what is perhaps a fairer relative error, especially for higher ranks.

![<strong>Figure 4.</strong> Losses and relative errors for the 20 Newsgroups dataset. <strong>4a.</strong> Standard nonnegative matrix factorization (NMF) loss applied to the data. <strong>4b.</strong> Fairer-NMF loss (alternating minimization scheme). <strong>4c.</strong> Standard NMF relative error. <strong>4d.</strong> Fairer-NMF relative error (alternating minimization scheme). Figure courtesy of [2].](/media/g2yhes2n/figure4.jpg)

Next, we showcase our technique on the 20 Newsgroups dataset: a common benchmark dataset for document classification, clustering, and topic modeling that contains six groups of news data on six different topics. This dataset exemplifies a population that comprises a large corpus of text that reflects individuals’ opinions about specific subjects, reviews of products, and a variety of other materials; the goal is to understand the data at a high level. Figure 4a shows that standard NMF produces very different loss values for each group in the data. In Figure 4b, Fairer-NMF produces equitable losses for all groups as designed.

Interestingly, the standard NMF relative errors appear to be more “equitable,” in the sense that they are closer to one another than in the Fairer-NMF variant (see Figures 4c and 4d). For the latter, the “Sale” group experiences much lower relative error than the other groups because of the objective function’s design; the “Sale” group had the highest standard loss (likely due to complexity), and equation \((2)\) forced a boosting of the group, as intended. This action may or may not be fairer — an inconsistency that affirms that “fair” is highly dependent on application, and loss functions should be designed to suit the situation at hand. We believe that mathematicians have a duty to highlight possible inequities and present understandable options for end users, and these results move us towards that intention.

To conclude, Fairer-NMF is a promising approach that addresses bias in NMF, ultimately promoting equitable data representation across different groups. Here, we highlighted the importance of fairness in ML to pave the way for the development of more equitable and trustworthy algorithms. But while Fairer-NMF does drive the research community closer to fairer ML, we must remember that fairness is highly context dependent. As such, we should carefully consider the choice of fairness criteria and potential tradeoffs based on the application in question. Further research is needed to address limitations, such as a priori knowledge of group partitions.

References

[1] Bertsekas, D.P. (1995). Nonlinear programming. Nashua, NH: Athena Scientific.

[2] Kassab, L., George, E., Needell, D., Geng, H., Nia, N.J., & Li, A. (2024). Towards a fairer non-negative matrix factorization. Under review.

[3] Lee, D.D., & Seung, H.S. (1999). Learning the parts of objects by non-negative matrix factorization. Nature, 401(6755), 788-791.

[4] Lee, D.D., & Seung, H.S. (2001). Algorithms for non-negative matrix factorization. In NIPS’00: Proceedings of the 14th international conference on neural information processing systems (pp. 535-541). Denver, CO: MIT Press.

About the Authors

Erin George

Ph.D. candidate, University of California, Los Angeles

Erin George is a Ph.D. candidate in mathematics at the University of California, Los Angeles. Their research is in machine learning (ML) theory and fair ML.

Lara Kassab

Assistant adjunct professor, University of California, Los Angeles

Lara Kassab is an assistant adjunct professor in the Department of Mathematics at the University of California, Los Angeles. She earned her Ph.D. in mathematics from Colorado State University in 2021. Kassab’s research focuses on mathematical data science.

Deanna Needell

Professor, University of California, Los Angeles

Deanna Needell is a professor of mathematics, the Dunn Family Endowed Chair in Data Theory, and executive director of the Institute for Digital Research and Education at the University of California, Los Angeles. She is the recipient of an Alfred P. Sloan Research Fellowship, a U.S. National Science Foundation Faculty Early Career Development Program (CAREER) award, and the IMA Prize in Mathematics and its Applications. Needell is a 2024 SIAM Fellow and a fellow of the American Mathematical Society.

Related Reading

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.