Preserving Data Security with Federated Learning, Differential Privacy, and Sparsification

In today’s increasingly connected world, the growing number of advanced technologies within the Internet of Things (IoT) is raising significant concerns about data privacy and security. “The IoT is a network of physical devices, vehicles, home appliances, and other items embedded with sensors, software, and network connectivity that enables them to collect and exchange data,” Rui (Zoey) Hu of the University of Nevada, Reno said. “As the IoT market keeps increasing, we need an efficient and secure method to handle the large amounts of sensitive data.”

Indeed, the IoT comprises a myriad of technologies—including smartphones, GPS trackers, home security systems, electronic wearables, and the like—that are ubiquitous in everyday life. Every time these devices send data to the cloud (where it is stored on remote servers), they incur an expensive communication cost and introduce the risk of privacy leakage. During the 2024 SIAM Conference on Mathematics of Data Science, which is currently taking place in Atlanta, Ga., Hu explored the integration of federated learning (FL), differential privacy (DP), and sparsification to protect sensitive data and safeguard user privacy.

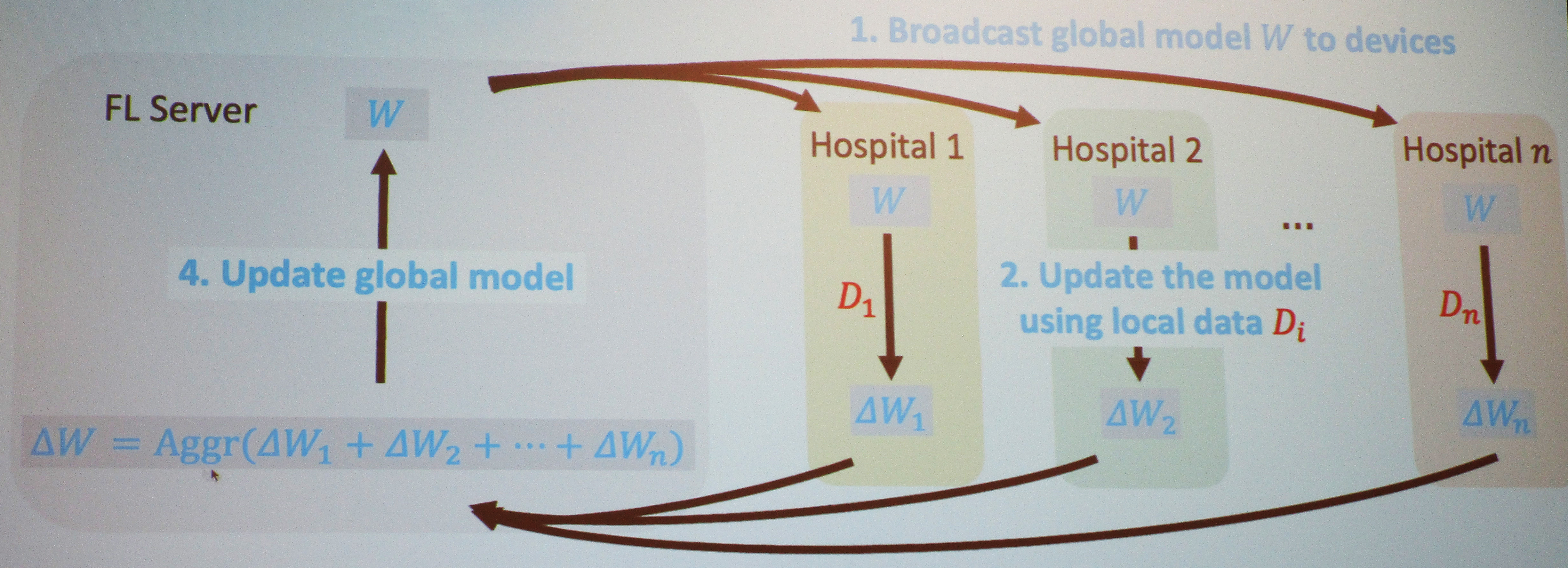

In the last decade, FL has become an important model training technique that addresses some of the privacy concerns about existing machine learning (ML) frameworks. This method collaboratively trains a ML model across multiple IoT devices without exchanging raw data with the cloud server, reducing both the chance of privacy leakage and the computational costs of raw data transmission. It can also be applied in a group setting and is a good solution when institutions that handle sensitive data—such as hospitals—do not wish to share their data but still want to work together to create powerful models.

For example, consider a hospital network that seeks to train a global model via an FL server (see Figure 1). First, the server would broadcast a shared model to all devices in the network; the hospitals would next use their own local data to update the model and transmit this update to the servers in the form of model parameters, which contain far less sensitive information than raw data. The servers would then update the global model accordingly. “You get a new or updated global model for the next round of training,” Hu said, all while retaining local data. The process continues until the global model converges.

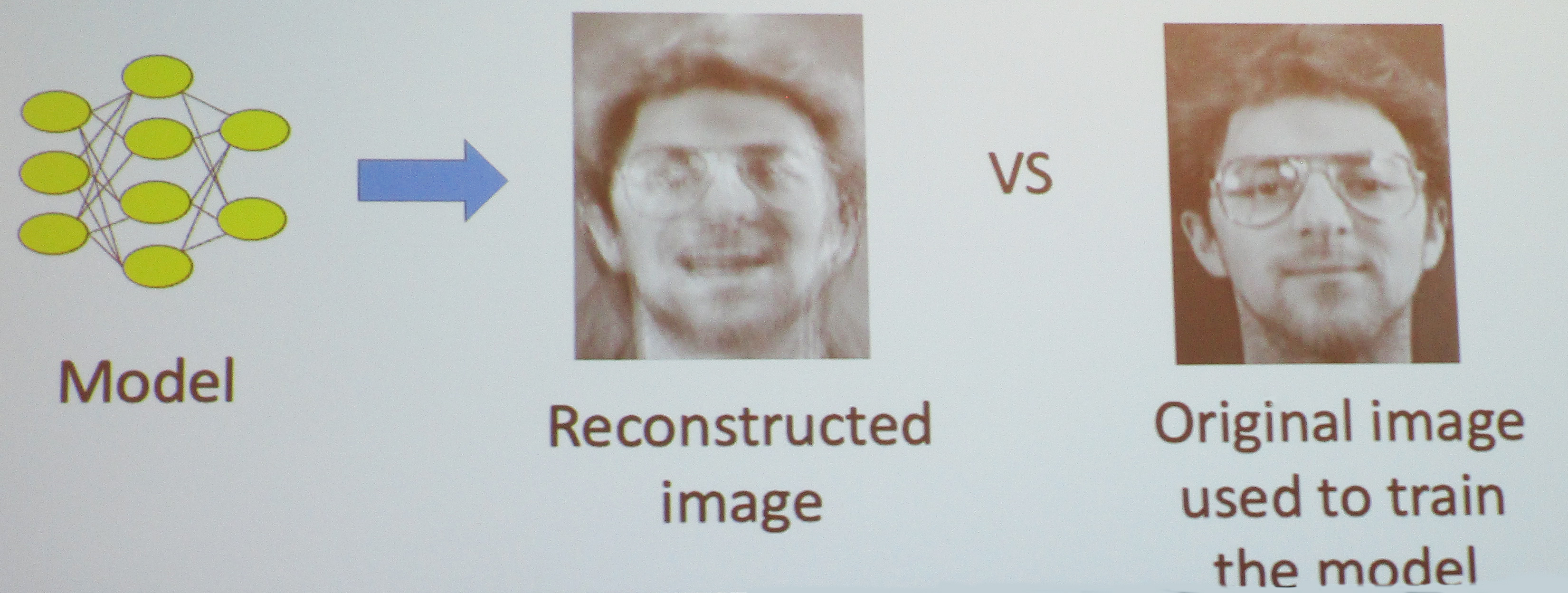

Despite its successes, FL is not a perfect solution to privacy protection. During privacy inference attacks, bad actors use model parameters to reconstruct the data that trained the original model — data that may consist of private facial images or other sensitive material (see Figure 2). Attackers can take the form of malicious outsiders or even network participants (i.e., another hospital or the server itself) who are simply curious about other contributors’ private data.

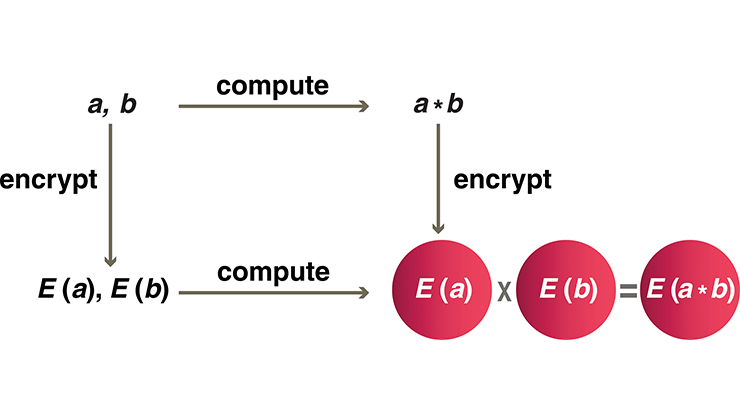

The inclusion of DP within the FL framework helps to thwart such attacks. “The goal is to ensure that the trained model is indistinguishable, regardless of whether a particular data owner participates in FL,” Hu said. “If an attacker gets the training model, they can’t distinguish whether your data was used to train the model, so they don’t know anything about the data.” DP-FL involves two neighboring datasets that differ by at most one user’s data, upon which two slightly different models are trained. The distribution of these models should be quite similar because a close resemblance obscures the data’s impact, preventing the attacker from recovering and leveraging it. This setup achieves a rigorous privacy guarantee.

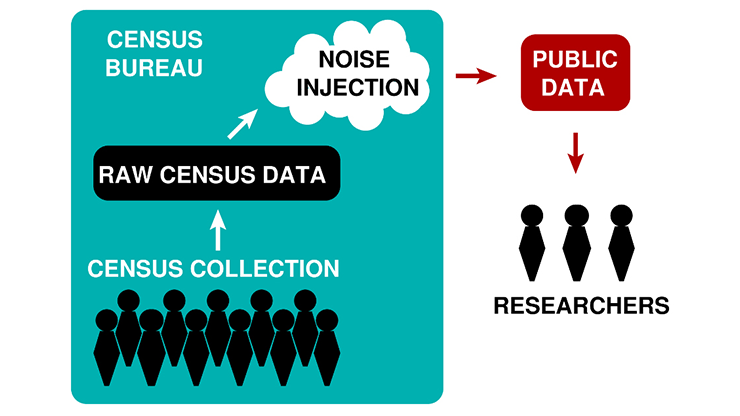

Hu explained that a series of meticulous theoretical analyses underlie the concept of DP. Of these, the Gaussian mechanism is especially popular in the ML world. For DP-FL, Hu utilizes Gaussian noise to perturb the values of the model parameters before the device sends the local model updates—which are now protected by random noise—to the server. “If the server is curious about privacy, it has no way to infer anything about your local training data,” she said.

While an increasingly large amount of noise correlates with a smaller loss of privacy, it also degrades model utility in what is called the privacy-utility tradeoff. “If you add too much noise to a system, that gives a bad utility,” Hu said. Although much of the existing literature attempts to reduce the noise magnitude for each model parameter, Hu wants to reduce the overall size of the noise — and thus the dimension of the model. To do so, she applies sparsified model perturbation with DP-FL. Sparsifying the model prior to the addition of noise eliminates some of the parameters from the update without sacrificing utility. There are two different methods of sparsification: rand-\(k\) sparsification and top-\(k\) sparsification. The rand-\(k\) sparsifier randomly selects \(k\) coordinates to keep, while the top-\(k\) sparsifier retains the top \(k\) coordinates with the largest absolute values.

Hu demonstrated the results of both sparsifiers on multiple datasets. Top-\(k\) sparsification exhibited a superior performance by increasing accuracy by nine percent (versus rand-\(k\)’s five percent) and reducing uplink communication costs. Finally, Hu noted that DP-FL with sparsification also helps to prevent Byzantine attacks, which occur when a trusted machine in a distributed system sends malicious information (rather than actual results) to the server.

About the Author

Lina Sorg

Managing editor, SIAM News

Lina Sorg is the managing editor of SIAM News.

Related Reading

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.