Taming the Chaos of Computational Experiments

Today’s computational experiments form the basis of scientific simulations and data analysis methods that will fuel future scientific advancements, engineering-based designs and evaluations, government policy decisions, and much more. For this reason, it is crucial that we—as computational scientists—discuss the importance of best practices in experimentation.

The ease with which we can repeat experiments is both a blessing and a curse. We can change our codes with just a few keystrokes and run them repeatedly with different random initializations and conditions. However, the flexibility of rapid iteration often leads to disorganized workflows, undocumented changes, and unreproducible results. What advice should we follow? What type of training should we provide for our students? How can we ensure that computational science maintains the necessary rigor?

As part of this discussion, I hope that we also consider the importance of involving all authors in computational experiments. While each author need not necessarily write their own code and run it independently, everyone should understand the experiments — the inputs, codes, individual outputs, and summary results. Although this strategy will not guarantee infallibility, it exponentially lowers the probability of issues going unnoticed. Scientists frequently feel pressure to publish and produce “results” and may rely on shortcuts, such as the use of artificial intelligence, to “help” with certain aspects of coding or analysis. Together, coauthors can support one another to ensure that their experiments are rigorous and scientifically valid. These principles are important for the intellectual standing of our field, not to mention individual reputations [2].

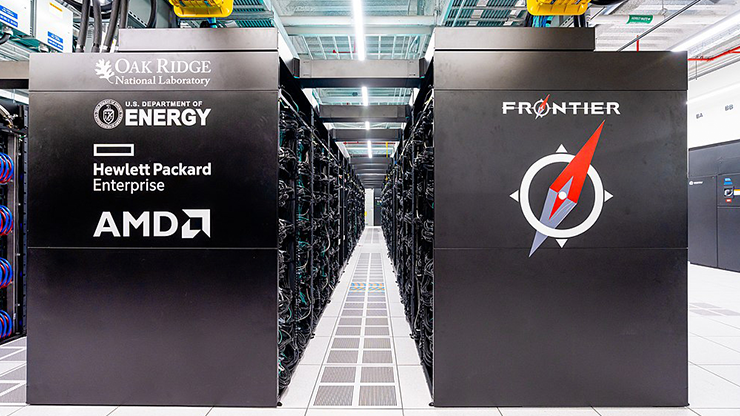

In this article, I will share seven pragmatic practices for improving the replicability, validation, reproducibility, and usability of computational experiments. I present these suggestions—which are designed to be adaptable for situations that range from Python code on a laptop to high-performance C++ code on a supercomputer—in rough order of implementation difficulty.

I recognize that religiously following these practices is not necessarily easy. As I was preparing this text, I was humbled to discover that I had failed to heed my own advice and was having trouble reproducing some recent experiments! Moreover, I hope that you won’t agree with everything I have to say; I would be delighted to inspire collective discussion and debate on how to increase the utility of computational science research for ourselves and the world at large.

With that in mind, here are my recommendations.

Use Version Control (Preferably Git)

Version control is essential. It allows you to track changes in your code and reference any past versions that have been “checked in” to the version-controlled repository. This is generally done via hashes or named tags, and Git is the recommended tool.

Online versions of Git—such as GitHub, GitLab, Bitbucket, and Codeberg—enable collaboration and sharing. GitHub stands out due to its integration with Overleaf for paper writing, while Codeberg is unique because it is run by a nonprofit.1 I strongly discourage Google Drive or Dropbox for versioning code, as they lack the granularity and traceability of Git. If you’re new to Git, the free Pro Git book is an excellent starting point [1].

Separate Experiments From Figures

A common pitfall in computational experiments is the direct generation of figures from experimental runs. I’m sure we’ve all written codes that do exactly this, but I urge you to instead save the raw data that can later be translated into a figure.

Of course, the desire to immediately generate a figure is quite natural. We often begin with ad hoc experiments to informally test our methods, and it is normal to want to visualize the results in some way. But these initial ad hoc experiments do become formal at some point, and I encourage you to save the data that you used to create your figure — perhaps even in a greater level of detail than the figure provides.

This type of separation allows you to recalculate metrics (e.g., median versus mean), adjust visual elements (e.g., axis labels), and generate subsets of results for different audiences (see Figure 1).

Personally, I recommend tools like PGFPLOTS for figure generation, which integrates well with LaTeX and ensures reproducibility of visuals.

Create Human-digestible Logs

In addition to saving data files for the production of figures, I advocate for saving detailed, human-digestible logs of your experiments by tooling your scripts and codes to print regular status messages. I address logs in more detail in the next point, but here I want to stress that you and your coauthors should be able to read through these records to better understand your computational experiment.

Ultimately, the logs serve as artifacts for your experiment that persist even if you can never reproduce it exactly. In large language models, for instance, the fact that floating-point addition is not strictly associative means that different parallel summation orders in different runs can yield slightly different final results.

Log Details of Individual Runs

Besides being digestible by humans, your logs should include the necessary details to reproduce individual experiments. I highly recommend saving the random seed for every run, not just the first one. Otherwise, you might find yourself in a situation where you must complete 75 runs before you can redo the 76th.

For this reason, each experimental run should produce a unique, identifiable log that includes the following:

- Git hashes for code and data (and diff logs if you don’t commit before every run)

- Random seeds

- Input parameters

- Fine-grained metrics like error after each iteration

- Timestamps.

Use unique identifiers (e.g., script name plus timestamp) to name artifacts, which will enable spot-checking and ensure the traceability of each run. Ideally, these artifacts should become part of your repository, forming a complete record of your computational experiments.

Use Scripts for Automation

Automation is your future self’s best friend. Scripts allow you to rerun experiments after code changes, perform parameter sweeps, incorporate feedback from collaborators and/or referees, and apply the same methods to new datasets or scenarios. They also make it easier to share your work with coauthors and reviewers. A well-organized set of scripts can preprocess raw input data, recreate individual runs, regenerate all runs, collate results, and generate figures.

Separate and Organize Your Project Components

A typical computational project includes multiple components:

- Datasets: Inputs for your experiments, ideally with README files and scripts that describe the original data sources and preprocessing.

- Codes: Implementations of your algorithms, ideally reusable and well-documented with a potential life beyond the paper in question. You will likely also have other codes for methodology comparisons.

- Scripts: Scripts to perform experiments that are specific to the paper or study, often involving multiple runs and configurations.

- Outputs: Various outputs of the computational experiments, such as (i) logs: numerous human-readable summaries from individual experiments; (ii) raw data: machine-readable data files from individual experiments; and (iii) summaries: aggregated results used in figures and tables.

- Paper: A publication wherein the computational experiments act as supporting evidence for a certain finding.

Avoid the temptation to keep everything in a single folder. Instead, organize the materials and consider using multiple Git repositories if some components (e.g., datasets or software packages) are valuable beyond the current project. If nothing else, consider keeping the paper in its own repository, as you can much more easily link to the online Overleaf editor.

Share Your Code, Data, and Experimental Logs

Even if your code isn’t perfect, sharing it is invaluable. I highly recommend Randall LeVeque’s 2013 SIAM News article titled “Top Ten Reasons to Not Share Your Code (and why you should anyway)” [3]. In this piece, LeVeque develops an analogy between publishing code and publishing proofs of mathematical theorems. There are plenty of good reasons not to share a mathematical proof — for instance, it might be too ugly to show anyone else! Of course, we would never publish an important math theorem without a proof, and the same should be true for computational experiments.

LeVeque further contends that “[w]hatever state it is in, the code is an important part of the scientific record and often contains a wealth of details that do not appear in the paper, no matter how well the authors describe the method used” [3]. There is much to be learned even without running the code, and I heartily agree with LeVeque’s claim that the ability to examine code is typically more valuable than the ability to rerun it exactly.

I would also argue for the importance of sharing the input data and output logs of computational experiments. Too often, preprocessing of the input data is undocumented. Output logs are invaluable to determining the success (or failure) of experimental reproduction and can provide insights that the summary data might not capture.

Sharing codes, data, and experimental logs is beneficial for science and fosters transparency, collaboration, and scientific progress. But you don’t have to be noble! Sharing likewise encourages the broad citation of your own work because it allows others to understand your methods more deeply, build upon your research, and compare their techniques to yours.

Final Thoughts

These seven practices are not just about making your work reproducible — they’re about making it credible, collaborative, and impactful (see Figure 2). They help your coauthors validate results, enable your future self to revisit past experiments, and inspire trust in your findings as the foundation for further studies.

I presented these ideas during an invited talk at the 2025 SIAM Conference on Applications of Dynamical Systems in Denver, Colo., this past May, after which several attendees commented on the lack of value that is placed on extra work (in the short term) to ensure reproducibility and easy access to codes. As such, I would advocate for promotion letter writers to evaluate software contributions and stress the importance of such work. If you have benefited from the availability of someone else’s data, code, and results, be sure to provide appropriate citations and perhaps even send a note to acknowledge their influence on your own work.

Whether you’re a graduate student starting your first project or a seasoned researcher managing a large team, these principles can help you tame the chaos of computational experiments. Let’s make reproducibility not just an aspiration, but a habit.

1 Many thanks to Gautam Iyer of Carnegie Mellon University for introducing me to Codeberg.

References

[1] Chacon, S., & Straub, B. (2014). Pro Git (2nd ed.). New York, NY: Apress.

[2] Dubner, S.J. (Host), & Kulman, A. (Producer). (2024, January 10). Why is there so much fraud in academia? [Audio podcast episode]. In Freakonomics Radio. Renbud Radio. https://freakonomics.com/podcast/why-is-there-so-much-fraud-in-academia.

[3] LeVeque, R.J. (2013, April 2). Top ten reasons to not share your code (and why you should anyway). SIAM News, 46(3), p. 8.

About the Author

Tamara G. Kolda

Consultant, MathSci.ai

Tamara G. Kolda is a consultant under the auspices of her California-based company, MathSci.ai. She previously worked at Sandia National Laboratories. Kolda specializes in mathematical algorithms and computational methods for data science, especially tensor decompositions and randomized algorithms. She is a SIAM Fellow, co-founded and was editor-in-chief of the SIAM Journal on Mathematics of Data Science, and currently serves as SIAM’s Vice President for Publications and chair of the SIAM Activity Group on Equity, Diversity, and Inclusion.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.