The Emerging Utility of Graphons in Applied Math

Graphs and networks are omnipresent in modern science. In their simplest form, graphs consist of three components: (i) A set of vertices, (ii) a set of edges that connect the vertices, and (iii) weights that are assigned to each edge and describe the strength of the connection between vertices. We utilize graphs to characterize almost any system with connections between two or more objects, such as social networks, chemical reactions, food webs, and neurons in the brain. The use of networks to understand complex processes in the brain has even led to theoretical advancements in neural networks, which form the foundation for the machine learning innovations that are currently transforming our society.

In applied mathematics, the question of robustness often arises in the context of networks. Robustness results for network problems help maintain the applicability of developed theories, as such results can guarantee that conclusions still hold under slight model variations that might originate from noisy measurements or small factors that are neglected during the modeling process. Traditional classifications of network robustness permit perturbations to a graph’s edge or weight structure, but what about robustness with respect to the addition or removal of vertices? For example, models of neural networks in the brain require graphs with many vertices, but the exact number of vertices is unknown. It would therefore be useful to have a theory that works for all sufficiently large graphs and accounts for some variation in the number of vertices — as long as the graphs adhere to a certain structure or generating rule.

Recent advances in graph theory have allowed applied mathematicians to tackle the problem of graph robustness over varying numbers of vertices. The main tool is a graphon: a symmetric function \(W:[0,1]^2 \to [0,1]\) that describes the limit of a sequence of graphs with an increasing amount of vertices that grow according to some rule. Even though they vary in size, we can consider sequences of graphs that converge to a graphon as members of the same family with shared structural characteristics.

Graph Families From Graphons

Graphons provide a rule for the generation of two distinct graph types: deterministic weighted graphs and simple random graphs. First, we fix \(n \geq 1\) and discretize the interval \([0,1]\) into evenly spaced points \(x_j = (j - 1)/n\) for \(k = 0,\dots,n\). The \(\{x_j\}_{j = 1}^n\) serve as \(n\) vertices of an undirected graph, to which we endow an edge structure by using a graphon in one of the following two ways:

- Weighted deterministic graphs: Between any two vertices \(x_j\) and \(x_k\), assign an edge of weight \(W(x_j,x_k)\)

- Simple random graphs: Between any two vertices \(x_j\) and \(x_k\), assign an undirected edge of weight \(1\) with probability \(W(x_j,x_k)\).

This rule implicitly assumes that there is no edge if \(W(x_j,x_k) = 0\).

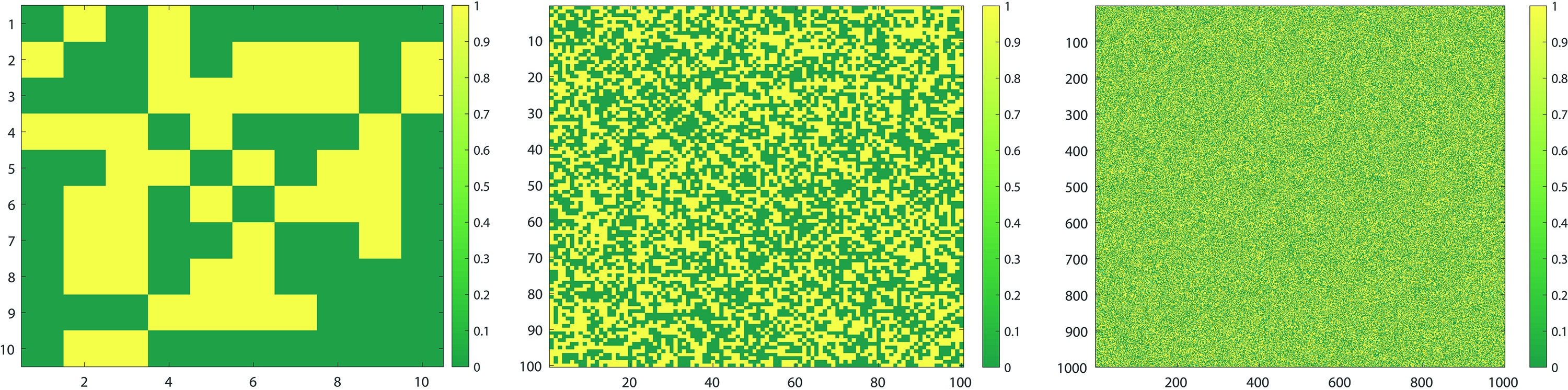

Since the deterministic graphs are simply a discretization of the original graphon, a familiarity with Riemann sums can provide a sense of intuition about their “convergence” to the generating graphon as \(n \to \infty\). Although the random graphs are quite different on the superficial level because their edge weights are entirely binary, we can similarly achieve convergence to the generating graphon as \(n \to \infty\) in a probabilistic sense. The convergence of graphs to graphons does not occur through typical function or vector norms, but rather through the convergence of the operator norm of the associated adjacency matrices. This operator convergence is advantageous, as it means that functional analytic properties of the graphon—such as the spectrum—approximate those of both the deterministic and random graphs that it generates for large \(n\). A proper mathematical formalism of these concepts is available in [2], but one can develop an intuition for the random graphs’ convergence to their graphons by examining the pixel plots of the associated adjacency matrices. For example, Figure 1 contains pixel plots for random graphs from the Erdős–Rényi graphon \(W(x,y) = 1/2\) for \(n = 10,100,\) and \(1000\). The visual blending of colors in the plot for large \(n\) resembles the uniform graphon that generated it.

A History of Coupled Oscillators

Graphs and dynamics have long come together in the study of coupled oscillators. In this setting, theoretical results from graph theory help researchers understand the synchronization behavior of collective oscillation patterns. In fact, previous synchronization studies reveal a longstanding presence of graphons in the theory of coupled oscillators — often without explicit mention [6]. While early applications focused primarily on graphons as limits of deterministic graphs, research within the last decade has employed graphons to understand the dynamics of coupled oscillators on random graphs [3]. The result is a mature and well-developed research program that uses graphons to understand coupled oscillators on large, random networks.

Predicting Patterns on Random Networks

Informed by the success of graphons in the study of coupled oscillators, we have initiated an investigation into pattern formation on random networks [1]. This work seeks to understand the emergence of inhomogeneous patterns from featureless background states—i.e., Turing bifurcations—on random networks. We utilize converging sequences of random graphs that are generated from a graphon to predict (up to small variations) the emerging small-amplitude patterns in networked dynamical systems on large graphs. By applying techniques from bifurcation theory to a limiting deterministic graphon equation, our results ultimately provide information for high-probability pattern formation across large random networks. We are currently conducting a follow-up investigation into the persistence of patterned solutions over large random networks that are separate from the bifurcation regime; this is joint work with Ph.D. student Jackson Williams from George Mason University, who presented a related poster at the 2023 SIAM Conference on Applications of Dynamics Systems in Portland, Ore., this May.

Graphons and Neural Networks

Data scientists also utilize graphons to quantify the robustness of graph neural networks (GNNs). GNNs are variations of standard neural networks that process data that can be represented on a graph and thus contain layers that incorporate graph structure. One important property of GNNs is transferability — the ability to transport a trained GNN between different graphs and maintain performance without retraining it. A simple example stems from recommender systems. Consider a platform that organizes users into a graph structure that weights connections according to their similarities. The goal is to use a GNN to leverage knowledge of one user’s interests and provide recommendations for other similar users. The number of users constantly varies as some sign up for the platform while others end their subscriptions. We certainly do not wish to retrain the network every time the number of users changes; instead, we simply want to train a single GNN that can be transferred between the user networks with varying numbers of vertices.

A 2020 study aimed to employ graphons to capture GNNs’ transferability [5]. This work provides a GNN architecture and proves bounds on its output between deterministic graphs that were generated by the same graphon — importantly, these bounds vanish as the graph grows in size. The researchers also demonstrate performance on movie recommendation data and citation networks. A recent collaboration sought to strengthen these results by presenting an explicit two-layer GNN architecture that can be transferred—without retraining—between sequences of graphs that converge to a graphon [4]. In particular, we allow for sequences of both deterministic and random graphs and demonstrate that the presence of a limiting graphon helps to overcome the curse of dimensionality that often arises in similar theoretical outcomes for neural networks. This effort provides the first analytical results of the minimum network sizes for transferable GNNs that achieve a fixed output within a desired error tolerance, along with an explicit architecture that does not require any training.

Outlook

Graphons are only just beginning to find their niche within applied mathematics. These powerful tools capture the similarities of differently-sized networks and retain a strongly developed underlying theory that practitioners can exploit to better understand problems in dynamics, pattern formation, and neural network approximation. Further applications of graphons have arisen in game theory and other fields that value network robustness.

References

[1] Bramburger, J., & Holzer, M. (2023). Pattern formation in random networks using graphons. SIAM J. Math. Anal., 55(3), 2150-2185.

[2] Lovász, L. (2012). Large networks and graph limits. In American Mathematical Society colloquium publications (Vol. 60). Providence, RI: American Mathematical Society.

[3] Medvedev, G.S. (2014). The nonlinear heat equation on W-random graphs. Arch. Ration. Mech. Anal., 212, 781-803.

[4] Neuman, A.M., & Bramburger, J.J. (2023). Transferability of graph neural networks using graphon and sampling theories. Preprint, arXiv:2307.13206.

[5] Ruiz, L., Chamon, L.F.O., & Ribeiro, A. (2020). Graphon neural networks and the transferability of graph neural networks. In NIPS’20: Proceedings of the 34th international conference on neural information processing systems (pp. 1702-1712). Vancouver, BC: Curran Associates Inc.

[6] Wiley, D.A., Strogatz, S.H., & Girvan, M. (2006). The size of the sync basin. Chaos, 16(1), 015103.

About the Author

Jason Bramburger

Assistant professor, Concordia University

Jason Bramburger is an assistant professor of mathematics at Concordia University in Canada. His research focuses on dynamical systems in the context of pattern formation, wave propagation, spatiotemporal chaos, and data science.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.