The NIST Big Data Interoperability Framework

The term “big data” describes the massive amounts of data that are available in the networked, digitized, sensor-laden, and information-driven world. Such data can overwhelm traditional technical approaches, and its continued growth is outpacing scientific and technological developments in data analytics. To help advance ideas in its Big Data Standards Roadmap, the National Institute of Standards and Technology (NIST) created an open community-based Big Data Public Working Group (NBD-PWG). The group aims to develop a secured reference architecture that is both vendor-neutral and technology- and infrastructure-agnostic. The goal is to enable stakeholders (data scientists, researchers, etc.) to perform analytics processing for their given data sources without worrying about the underlying computing environment.

After a multi-year effort, NIST produced nine volumes of special publications under the NIST Big Data Interoperability Framework (NBDIF), a collaboration between NIST and more than 90 experts from over 80 industrial, academic, and governmental organizations. Figure 1 displays the relationship between the NBDIF volumes, all of which have specific focuses.

Big Data Definitions and Taxonomies

Common terms and definitions are critical building blocks for new technologies. This is especially true in the context of big data, which both technical and nontechnical communities tend to describe with “V” terms: volume, velocity, variety, variability, volatility, veracity, visualization, and value. Providing a consensus-based vocabulary allows stakeholders to establish a common understanding of big data. Therefore, NBDIF has defined several terms that are important to the big data industry:

- Big data consists of extensive datasets—primarily in the characteristics of volume, velocity, variety, and/or variability—that require a scalable architecture for efficient storage, manipulation, and analysis

- Big data paradigm consists of the distribution of data systems across horizontally coupled, independent resources to achieve the scalability that is needed to efficiently process extensive datasets

- Data science is the methodology for the synthesis of useful knowledge directly from data through either a process of discovery or hypothesis formulation and hypothesis testing

- Data scientist is a practitioner who has sufficient knowledge in the overlapping regimes of business needs, domain knowledge, analytical skills, and software and systems engineering to manage the end-to-end data processes in the analytics life cycle.

To fully comprehend the effect of big data, one should examine the granularity of the following components: data elements, related data elements that are grouped into a record that represents a specific entity or event, records that are collected into a dataset, and multiple datasets. Understanding big data characteristics is challenging because the use of parallel big data architectures is based on the interplay of performance, cost, and time constraints on end-to-end system processing. Four fundamental drivers determine the presence of a big data problem:

- Volume refers to extensive datasets available for analysis to extract valuable information

- Velocity refers to the measure of the rate of data flow, typically in real-time-like streaming data

- Variety refers to the need to analyze data from multiple repositories, domains, or types

- Variability refers to changes in a dataset, whether in the data flow rate, format/structure, and/or volume, thus impacting analytic processing.

Big Data Use Cases and Requirements

A use case is a typical high-level application that extracts requirements or compares usage across fields. For example, NIST created a use case template to develop a consensus list of big data requirements across all stakeholders. To do so, it evaluated the requirements of 51 submitted use cases. The group then extracted 437 specific requirements, aggregated 35 general requirements, and identified seven categories: data source, data transformation, capability, data consumer, security and privacy, life cycle management, and “other.” These seven categories helped formulate the big data reference architecture.

Big Data Reference Architecture and Interfaces

The NIST Big Data Reference Architecture (NBDRA) is a high-level conceptual model that aims to facilitate the understanding of big data’s operational intricacies. It does not represent the architecture of a specific big data system; instead, NBDRA is a tool for describing, discussing, and developing system-specific architectures via a common framework of reference. The model is not tied to any specific vendor products, services, or reference implementations, nor does it define prescriptive solutions that inhibit innovation.

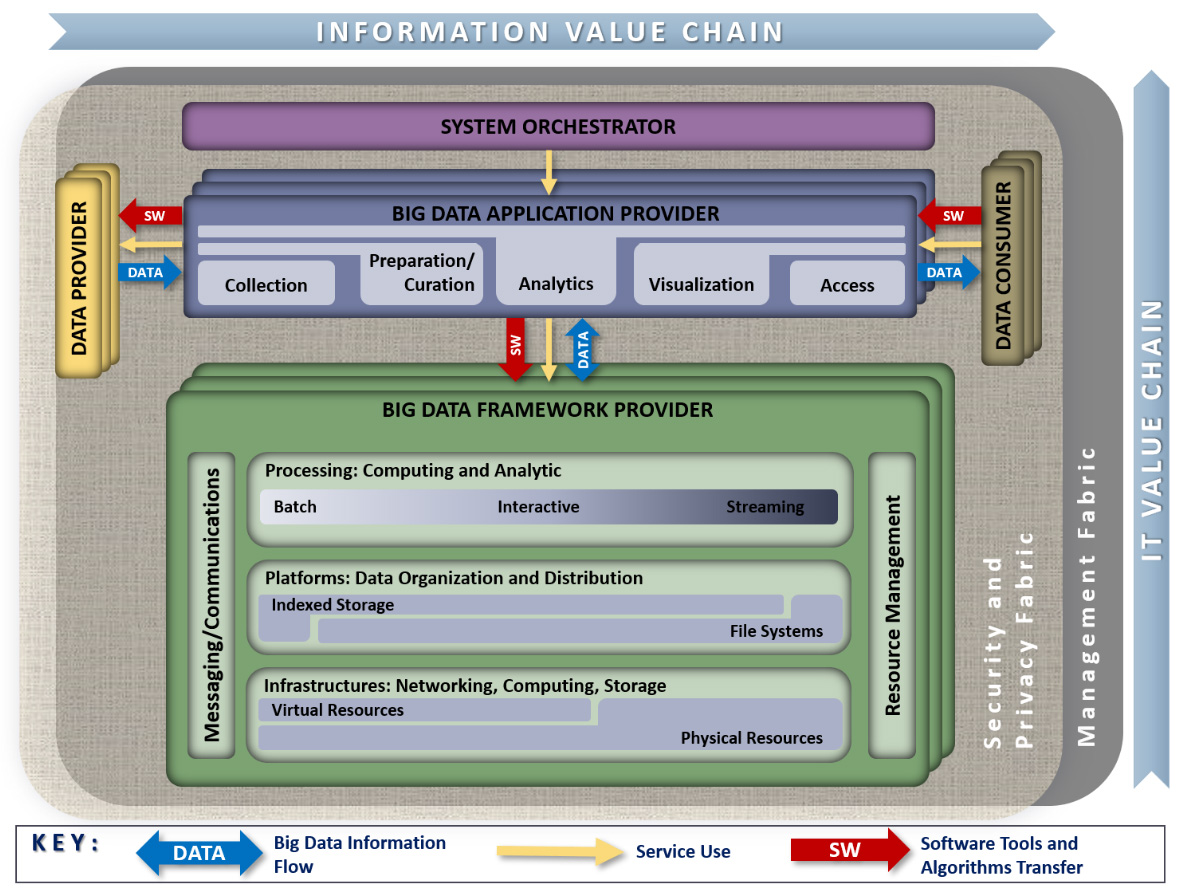

NBD-PWG identified its key architectural components based on requirements of the derived use cases plus nine industry submissions of big data architectures to develop a reference architecture that is vendor-neutral and technology- and infrastructure-agnostic. NBDRA comprises five logical functional components that are connected by interoperability interfaces. Two fabrics envelop these factors and represent the interwoven nature of both resource management and security and privacy with all five components (see Figure 2). These fabrics provide services and functionality to the five main roles in areas that are specific to any big data solution.

NBDIF provides a well-defined interface specification to support the application programming interfaces between NBDRA components and provision big data systems with hybrid and multiple frameworks. The service specification is based on OpenAPI, a resource-oriented architecture that provides a general form of interfaces that are independent of the framework or computer language in which the services are specified. As a result, NBDRA can enable instantiation to support multiple big data architectures across different application domains.

Big Data Security and Privacy

The security and privacy fabric is a cross-cutting, fundamental aspect of NBDRA. It represents the intricate, interconnected nature and ubiquity of security and privacy throughout NBDRA’s main components. Common security and privacy concerns for all items include authentication, access rights, traceability, accountability, threat and vulnerability management, and risk management.

Additional security and privacy considerations exist at the interface levels between NBDRA components, which include the following components:

- Interface between the data provider and big data application provider

- Interface between the big data application provider and data consumer

- Interface between the big data application provider and big data framework provider

- Internal interface within the big data framework provider

- Inside system orchestrator.

Security and privacy measures are becoming increasingly critical with the escalation of big data generation and utilization via public cloud infrastructure that is built with the help of various hardware, operating systems, and analytical software. The surge in streaming cloud technology necessitates extremely rapid responses to security issues and threats [1].

Big Data Standards Roadmap

NIST’s Big Data Standards Roadmap provides a vision for the future of big data. It assesses the current technology landscape and identifies available standards to support present and future big data domain needs from an architecture and functionality perspective. NBDIF has established a set of criteria that evaluates whether standards meet the NBDRA general requirements:

- Facilitate interfaces between NBDRA components

- Facilitate the handling of data with one or more big data characteristics

- Represent a fundamental function that one or more NBDRA components must implement.

As most standards represent some form of interface between components, one can identify a partial collection of such standards based on the NBDRA components.

Big Data Adoption and Modernization

The adoption of big data systems is not yet uniform throughout all industries or sectors, and some common challenges across all industries could further delay this process. A report by the U.S. Bureau of Economic Analysis and McKinsey Global Institute [3] suggests that the most apparent barrier to leveraging big data is access to the data itself. The report identifies a definite relationship between the ability to access data and the potential to capture economic value across all sectors and industries.

Strategies to modernize big data may include cost estimation, workforce allocation, project prioritization, technology selection, transition plans, road mapping, and implementation timelines. In addition, those who are making governance decisions about technology should pay special attention to business models and data requirements. The resulting resolutions can help inform future strategies.

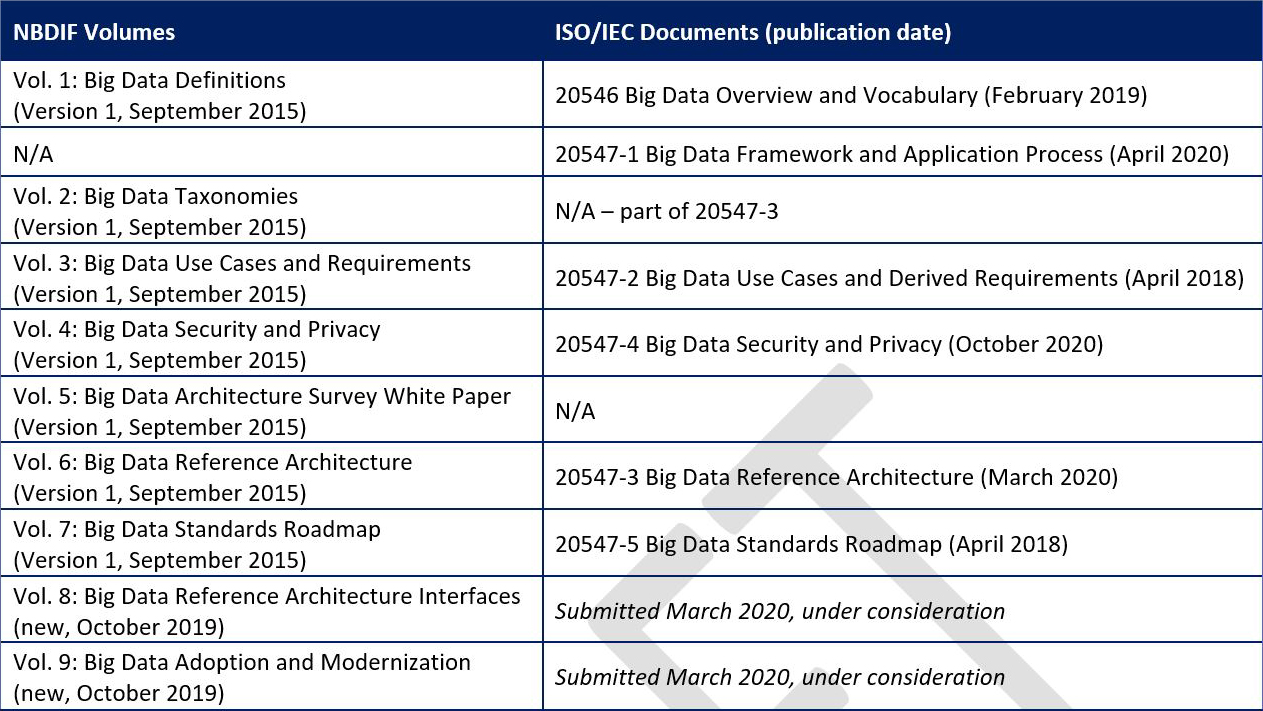

NBDIF as an International Standard

In late 2015, NIST submitted the initial version of seven volumes of NBDIF to the Joint Technical Committee of the International Organization for Standardization and the International Electrotechnical Commission (ISO/IEC JTC 1) for standards development consideration. More than 200 experts from over 25 countries reviewed and contributed to the content. As a result, five of the seven original NBDIF volumes are now part of the ISO/IEC standards. Figure 3 depicts the mapping between NBDIF volumes and ISO/IEC documents.

NBD-PWG Next Steps

With big data’s compound annual growth rate at 61 percent and its ever-increasing deluge of information, the collective sum of world data is projected to grow from 33 zettabytes (ZB, \(10^{21}\)) in 2018 to 175 ZB by 2025 [2]. Such a rich source of information requires a massive analysis that can effectively bring about much insight and knowledge discovery.

NBD-PWG is exploring how to best extend NBDIF for the packaging of scalable analytics as services in order to meet rapid information growth. Regardless of the underlying computing environment, these services would be reusable, deployable, and operational for big data analytics, high-performance computing, and artificial intelligence machine learning applications.

References

[1] Cloud Security Alliance. (2013, April). Expanded top ten big data security and privacy challenges (Big Data Working Group). Retrieved from https://downloads.cloudsecurityalliance.org/initiatives/bdwg/Expanded_Top_Ten_Big_Data_Security_and_Privacy_Challenges.pdf.

[2] Coughlin, Tom. (2018, November 27). 175 Zettabytes by 2025. Forbes. Retrieved from https://www.forbes.com/sites/tomcoughlin/2018/11/27/175-zettabytes-by-2025/#587370055459.

[3] Manyika, J., Chui, M., Brown, B., Bughin, J., Dobbs, R., Roxburgh, C., & Byers, A.H. (2011, May 1). Big data: The next frontier for innovation, competition, and productivity. McKinsey Global Institute. Retrieved from https://www.mckinsey.com/business-functions/mckinsey-digital/our-insights/big-data-the-next-frontier-for-innovation.

About the Author

Wo Chang

Digital Data Advisor, National Institute of Standards and Technology (NIST) Information Technology Laboratory

Wo Chang is the digital data advisor for the National Institute of Standards and Technology (NIST) Information Technology Laboratory. His responsibilities include promoting a vital and growing big data community at NIST with external stakeholders in commercial, academic, and governmental sectors.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.