The Operator is the Model

Modeling of physical processes is the art of creating mathematical expressions that have utility for prediction and control. Historically, such models—like Isaac Newton’s dynamical models of gravity—relied on sparse observations. The late 20th and early 21st centuries have witnessed a revolutionary increase in the availability of data for modeling purposes. Indeed, we are in the midst of the sensing revolution, where the word “sensing” is used in the broadest meaning of data acquisition. This proliferation of data has caused a paradigm shift in modeling. Researchers now often use machine learning (ML) models (under the umbrella of artificial intelligence) to analyze and make sense of data, as evidenced by the explosive popularity of large language models that rely on deep neural network technology. Because these models are typically vastly overparametrized (i.e., the number of weights is massive, in the billions or even trillions), an individual weight does not mean much. To guarantee efficient human-machine correspondence, we must extract human-interpretable models through which we can make our own sense of the data.

Koopman operator theory (KOT) has recently emerged as the primary candidate for this task. Its key paradigm is that the operator is the model [9, 10]. Namely, KOT assumes the existence of a linear operator \(U\)—essentially an infinite-dimensional matrix—such that any observation \(f\) of system dynamics \(U\) enables the prediction of the time evolution to the next observation \(f^+\) via the equation

\[f^+=Uf,\tag1\]

where \(f\) is a function on some underlying state space \(M\). Modelers must then find a finite number of observables that are useful for prediction and control. Rather than ask, “If position and momentum are observable, what equations describe their dynamical evolution?”, we instead inquire, “Given the available data, what observables parsimoniously describe their dynamics?”. This change of setting—from dynamics on the state space to dynamics on the space of observables \(\mathcal{O}\)—inspired a new modeling architecture that takes \(\mathcal{O}\), rather than the state or phase space, as its template.

The resulting approach finds use in a variety of applied contexts, such as fluid dynamics, autonomy, power grid dynamics, neuroscience, and soft robotics. The theory relies on a beautiful combination of operator-theoretic methods, geometric dynamical systems, and ML techniques.

History

Driven by the success of the operator-based framework in quantum theory, Bernard Koopman made a proposal in the 1930s to treat classical mechanics in a similar way; he suggested using the spectral properties of the composition operator that is associated with dynamical system evolution [5]. But it was not until the 1990s and 2000s that researchers realized the potential for wider applications of the Koopman operator-theoretic approach [10]. In the past decade, the trend of applications has continued. Earlier work emphasized the utilization of Koopman theory to find finite-dimensional models from data [9]. These models exist on invariant subspaces of the operator that are spanned by eigenfunctions. Finding an eigenfunction \(\phi\) of the operator that is associated with a discrete-time, possibly nonlinear process yields a reduced model of the process whose dynamics are governed by \(\phi^+=\lambda \phi\); as such, the result is a potentially reduced order but linear model of the dynamics. The level sets of the eigenfunction on the original state space yield geometrically important objects like invariant sets, isochrons, and isostables [8]. This outcome led to a realization that geometrical properties can be learned from data via the computation of spectral objects, thus initiating a strong connection between ML and dynamical systems communities that continues to grow. The key notion that drives these developments is the idea of representing a dynamical system as a linear operator on a typically infinite-dimensional space of functions.

However, it is interesting to invert this question and start from the operator, rather than the state-space model; \(U\) is the property of the system, but does it have a finite-dimensional (linear or nonlinear) representation? We formalized the concept of dynamical system representation in 2021 [10]. Instead of starting with the model and constructing the operator, we construct the finite-dimensional linear or nonlinear model from the operator. Doing so facilitates the study of systems with a priori unknown physics, like those in soft robotics [2, 4].

Operator Representations

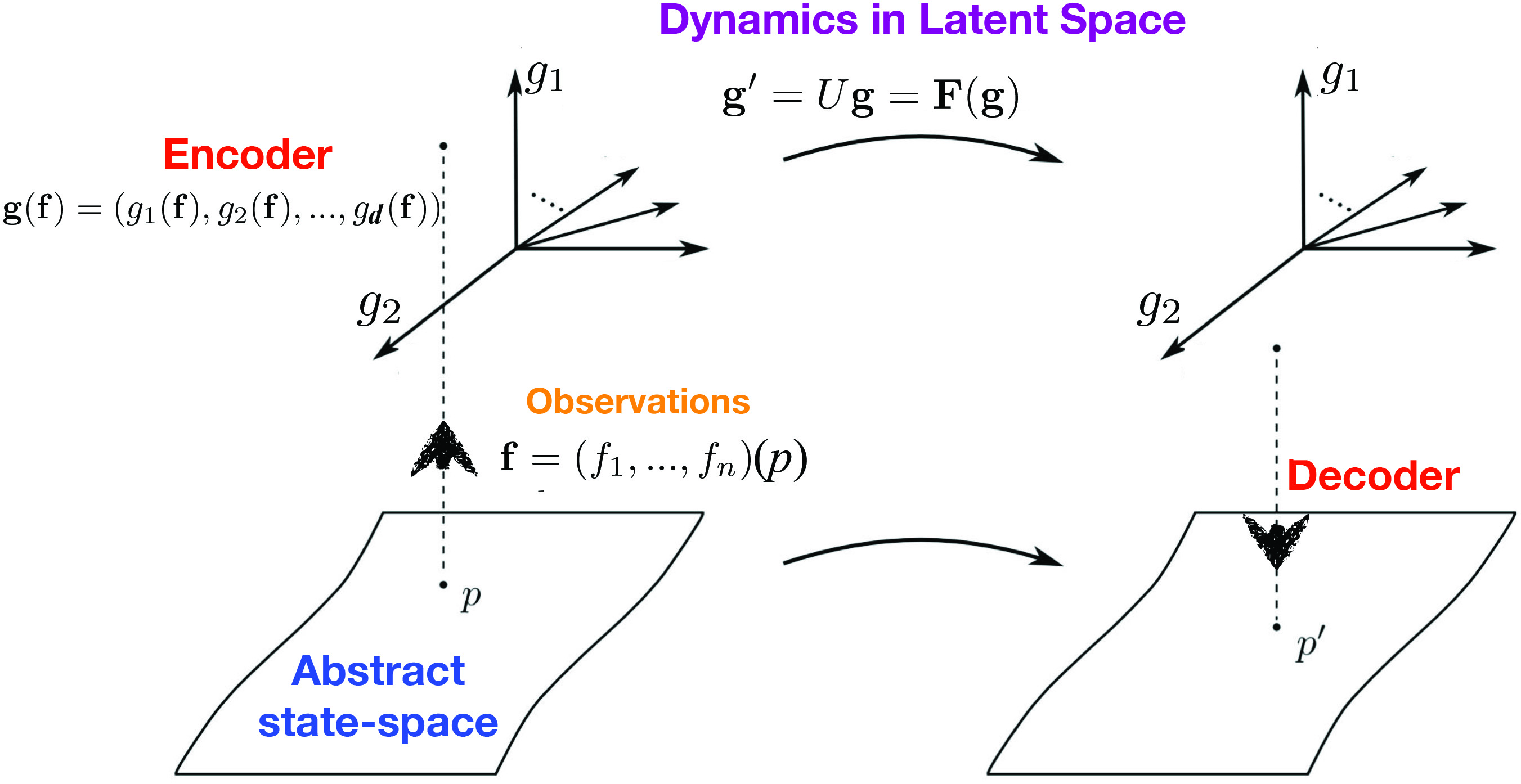

The modeling exercise usually begins with a catalogue of available observations in vector \(\mathbf{f}=(f_1,...,f_n)\). We can organize \(n\) different streams of data into the \(n\times m\) matrix \([\mathbf{f}(1),...,\mathbf{f}(m)]\), where \(m\) is the number of “snapshots” of observations (in ML parlance, the observables are “features”). For simplicity, we assume that these snapshots are taken at regular time intervals and sequentially organized into columns. Assuming that the dynamics are evolving on some underlying state space \(M\) (that we might not know) according to a potentially unknown mapping \(\mathbf{x}(k+1)=T(\mathbf{x})\), the Koopman (composition) operator \(U\) is defined by \(U\mathbf{f}=\mathbf{f}\circ T\). Then, \(\mathbf{f}(k+1)=U\mathbf{f}(k)\). Is there an \(n\times n\) matrix \(A\) such that \(U\mathbf{f}=A\mathbf{f}\)? This is indeed the case when \(\mathbf{f}\) is within the span of \(n\) (generalized) eigenfunctions of \(U\) [10]. Methodologies to find eigenfunctions include spectral methods [9] and (extended) dynamic mode decomposition [6, 13]. We could also ask a more general question: Is there a map \(\mathbf{F}:\mathbb{C}^n\rightarrow \mathbb{C}^n\) and observables (functions) \(\mathbf{g}:X\rightarrow \mathbb{C}^d\) such that

\[U\mathbf{g}=\mathbf{F}(\mathbf{g}),\tag2\]

where \(X\) is some latent space and \(\mathbf{g}=\mathbf{g}(\mathbf{f})\)? Typically, \(d\geq n\). A simple example of finding a nonlinear representation of the Koopman operator is available in [11].

Figure 1 provides a graphical representation of the modeling process architecture. If we take our original observables \(\mathbf{f}\) and set \(\mathbf{g}=\mathbf{f}\), it may be impossible to find an \(\mathbf{F}\) that satisfies \((2)\). In this case, the observations do not provide us with a “closure” — i.e., we cannot uniquely predict the next state of observations based on the current state, but a more sophisticated “embedding” \(\mathbf{g}\) might do the job. Interestingly, the architecture is similar to the transformer architecture of large language models [11].

The problem of finding \((\mathbf{F},\mathbf{g})\) in \((2)\) is called the representation eigenproblem [10]. A rigorous result exposes how the nature of the representation depends on the spectrum of the Koopman operator; finite linear representations are possible if the operator has a discrete spectrum, while finite nonlinear representations—which pertain to infinite dimensional invariant subspaces—are needed when the spectrum of the operator is continuous [10].

One approach to solving the representation problem utilizes the standard neural network architecture to minimize

\[(\beta^*,\gamma^*)=\min\limits_{\beta,\gamma}||\mathbf{g}_\beta(k+1)-\mathbf{F}_\gamma(\mathbf{g}_\beta(k))||, \tag3\]

where some or all of the components \(g_j, F_k\) are parametrized by neural networks with weights \(\gamma,\beta\). This approach can be used in combination with the concept of parenting in learning, in that domain experts can suggest some key observables. Experts in classical dynamics would likely propose \(\sin \theta\) as a good observable for learning rigid pendulum dynamics, for instance, but would presumably use neural networks or time-delay observables to learn appropriate observables for a soft pendulum whose physical laws are hard to derive [2, 4]. This tactic enables a mixture of human-prescribed and machine-learned observables.

Extensions and Relationships to Other Machine Learning Methods

Koopman-based ML of dynamical models is particularly suitable for an extension to control systems [8]. Another applicable extension is to ML for general nonlinear maps between different spaces; random dynamical systems have also been treated in the framework of the stochastic Koopman operator [9, 10].

Researchers have drawn multiple connections between “pure” Koopman operator-based methodologies and other ML techniques. The version of the framework that has a predefined set of observables is conceptually equivalent to the kernel methods that are popular in ML. One can view the class of autoregressive integrated moving average (ARIMA) models as a subset of Koopman-based methods, and deep learning can help identify effective observables and make connections to transformer architectures that are common in large language models.

Furthermore, a well-known methodology of reinforcement learning (RL) has connections to KOT modeling. However, there is a fundamental difference in the approaches to optimal control with KOT versus RL; specifically, the exploration strategy in RL can lead to dangerous scenarios. In the KOT approach, the model is formed first to ensure the execution of only safe scenarios. We then specify a cost function to enable optimization of the task while simultaneously preserving safety. KOT methodologies also typically require orders-of-magnitude fewer executions of learning tasks than RL.

Because of their explicit treatment of the time dimension, Koopman operator models are well suited to handle causal inference [12]. For example, we can use Koopman control models to answer counterfactual questions such as “What if I had acted differently?” In fact, generative Koopman control models help to overcome obstacles in the development of autonomous systems that exhibit human-level intelligence — robustness, adaptability, explainability (interpretability), and cause-and-effect relationships. The methodology has now penetrated most dynamics-heavy fields and inspired recent advances in soft robotics [2, 4] and game modeling [1]; Koopman operators even furthered the study of neural network training [3, 7]. These successes are due to the effectiveness of developed ML algorithms as well as the depth of the underlying theory that enhances interpretability (which is prevalent in applied mathematics but absent from some ML approaches).

Despite the aforementioned progress, there is still much to do. The current decade promises to be an exciting one for this growing set of data-driven artificial intelligence methodologies that will boost the discovery of models of dynamical processes.

An expanded version of this article is available online [11].

References

[1] Avila, A.M., Fonoberova, M., Hespanha, J.P., Mezić, I., Clymer, D., Goldstein, J., … Javorsek, D. (2021). Game balancing using Koopman-based learning. In 2021 American Control Conference (ACC) (pp. 710-717). IEEE Control Systems Society.

[2] Bruder, D., Fu, X., Gillespie, R.B., Remy, C.D., & Vasudevan, R. (2020). Data-driven control of soft robots using Koopman operator theory. IEEE Trans. Robot., 37(3), 948-961.

[3] Dogra, A.S., & Redman, W. (2020). Optimizing neural networks via Koopman operator theory. In Advances in neural information processing systems 33 (NeurIPS 2020) (pp. 2087-2097). Curran Associates, Inc.

[4] Haggerty, D.A., Banks, M.J., Kamenar, E., Cao, A.B., Curtis, P.C., Mezić, I., & Hawkes, E.W. (2023). Control of soft robots with inertial dynamics. Sci. Robot., 8(81), eadd6864.

[5] Koopman, B.O. (1931). Hamiltonian systems and transformation in Hilbert space. Proc. Nat. Acad. Sci., 17(5), 315-318.

[6] Kutz, J.N., Brunton, S.L., Brunton, B.W., & Proctor, J.L. (2016). Dynamic mode decomposition: Data-driven modeling of complex systems. Philadelphia, PA: Society for Industrial and Applied Mathematics.

[7] Manojlović, I., Fonoberova, M., Mohr, R., Andrejčuk, A., Drmač, Z., Kevrekidis, Y., & Mezić, I. (2020). Applications of Koopman mode analysis to neural networks. Preprint, arXiv:2006.11765.

[8] Mauroy, A., Mezić, I., & Susuki, Y. (Eds.) (2020). The Koopman operator in systems and control: Concepts, methodologies, and applications. In Lecture notes in control and information sciences (Vol. 484). Cham, Switzerland: Springer Nature.

[9] Mezić, I. (2005). Spectral properties of dynamical systems, model reduction and decompositions. Nonlin. Dyn., 41, 309-325.

[10] Mezić, I. (2021). Koopman operator, geometry, and learning of dynamical systems. Not. Am. Math. Soc., 68(7), 1087-1105.

[11] Mezić, I. (2023). Operator is the model. Preprint, arXiv:2310.18516v2.

[12] Pearl, J. (2019). The seven tools of causal inference, with reflections on machine learning. Commun. ACM, 62(3), 54-60.

[13] Williams, M.O., Kevrekidis, I.G., & Rowley, C.W. (2015). A data-driven approximation of the Koopman operator: Extending dynamic mode decomposition. J. Nonlinear Sci., 25, 1307-1346.

About the Author

Igor Mezić

Professor, University of California, Santa Barbara

Igor Mezić is a professor of mechanical engineering at the University of California, Santa Barbara. His work encompasses the operator theoretic approach to dynamical systems and incorporates elements of geometric dynamical systems theory and machine learning. Mezić applies such mathematical methods in various contexts.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.