Theory Guides the Frontiers of Large Generative Models

Modern generative machine learning (ML) and artificial intelligence (AI) models are large in every sense of the word. For example, OpenAI’s Generative Pre-trained Transformer 3 (GPT-3) language model from 2020 contains 175 billion trainable parameters. The 2023 GPT-4 model cost at least $100 million to train [3], with more opaque but similarly important energy costs. Although it is difficult to measure the scope of data that trains such models—as well as any associated ethical and legal issues—their collective significance is evident in multiple high-profile lawsuits that have followed the models’ commercialization [1].

While these models are data hungry, they may no longer be data bottlenecked. In 2022, a Google DeepMind group observed that the “compute budget” (aggregate floating-point operations) yields a linear tradeoff between the selection of “more data” versus “more parameters” in frontier models [2]. The team harnessed this observation to train a smaller model named Chinchilla that was competitive with large language models (LLMs) like Gopher and GPT-3. And earlier this year, the language model DeepSeek-R1 caused waves in the AI community for being open weight and having a parameter count that is several scales smaller than GPT-4 — making it less expensive to query and fine-tune while exhibiting a similar performance.

How do we begin to comprehend this paradigm? A guiding principle of scientific computing is that the incorporation of domain-specific knowledge can accelerate a model. Landmark algorithms of the 20th century, such as the fast Fourier transform and fast multipole method, follow this principle by exploiting additional properties of the class of problems to accelerate naive computation in applications such as density functional theory or n-body problems. These accelerations are transformative in science and engineering and have facilitated the solvability of many intractable real-life scenarios. A persistent quest for applied mathematicians in ML is to understand the feasibility of similar breakthroughs.

Theory Versus Practice for Optimization in Machine Learning

Courtney Paquette of McGill University and Google Research explored this topic during her CAIMS/SCMAI-PIMS Early Career Award Lecture at the Third Joint SIAM-CAIMS Annual Meetings (AN25), which took place this summer in Montréal, Québec, Canada. Paquette’s talk focused on her efforts to predict the behavior of large models that are trained via stochastic gradient descent (SGD). While her work may be classified as optimization theory, there are important distinctions. Paquette argues that mathematicians in this area of research need to understand the way in which traditional optimization theory contrasts with practical ML. In practice, noise is everywhere; training data have sources of noise or measurement error, initialization for iterative schemes that optimize parameter sets \(\theta\) usually start with randomly initialized weights \(\theta_0\), and the iterations often involve random sampling of the training data (as with SGD).

The stochasticity in SGD refers to this random sampling to evaluate the gradient of the objective function that updates \(\theta_k\):

\[\theta_{k+1} = \theta_k - \gamma \frac{\sum_{i\in\mathcal{B}_k} \nabla f_i(\theta_k)}{\beta}. \tag1\]

Here, \(\gamma>0\) is the chosen stepsize (i.e., learning rate) and \(\mathcal{B}_k\) is the subset of the training data that is selected at each step. Choosing the sample size \(\beta = |\mathcal{B}_k|=1\) is called streaming SGD; if one opts to use all data at each step, then \(\beta=n\) reduces to traditional gradient descent of a loss that is summed over the losses of each datum: \(f(\theta) = \frac{1}{m} \sum_{i=1}^m f_i(\theta)\).

To develop theory, Paquette and her collaborators focus on the following loss function that corresponds to linear regression: given data \(x_i \in \mathbb{R}^n\) (arranged as rows in matrix \(X \in \mathbb{R}^{m \times n}\)) and associated scalar output \(b_i \in \mathbb{R}\), find parameter vector \(\theta \in \mathbb{R}^n\) for which the loss \(\tfrac{1}{2m} || X\theta - b ||^2 = \tfrac{1}{2m} \sum_{i=1}^m (x_i^T \theta - b_i)^2\) is minimized. While readers may recognize that the exact solution is \(\theta^* = (X^T X)^{-1} X^T b\) with mild assumptions on \(X\), the purpose of Paquette’s work is not to find a closed-form expression, but to understand the behavior of iterates \(\theta_k\) in a way that can be generalized to predict SGD behavior in more complicated settings.

Often, researchers conduct this analysis by taking a limit of \(\gamma \to 0\), which reduces \((1)\) to a gradient flow. One of the novelties of Paquette’s work [4] follows the infinite data paradigm, which keeps \(\gamma\) finite and instead studies a stochastic process over a long training sequence. This process occurs by taking a joint limit \(n \to \infty\) and \(d \to \infty\), with \(d/n \to r\): a ratio of model parameters \(d\) to training data \(n\). Paquette and her collaborators achieve this by embedding the discrete process of \(k \in \{0,1,...\}\) into a continuous-time stochastic process with \(t \in \mathbb{R}^+\); they can then analyze the stochastic process through its low-order moments via the so-called “universality” properties of random matrix theory. The chief result is that in this setting and with some additional assumptions, the SGD iterates converge to a scalar function \(P(t)\):

\[\mathcal{P}(t) = \mathscr{P}(\Theta_{\gamma t}^{\mathrm{gf}}) + \int_0^t \gamma^2 h_2(t,s) \mathcal{P}(s) ds, \quad h_2(t,s) = \int_0^\infty x^2 e^{-2\gamma t x} d \mu(x). \tag2\]

Paquette describes \(\mathscr{P}(\Theta_{\gamma t}^{\mathrm{gf}})\) as the gradient flow process. The measure \(d \mu(x)\) is the spectrum of \(H\), the Hessian of the objective and the data-generating process — which could be an exotic object in general. But when dealing with finite data, this spectrum is a sum of point masses at eigenvalues: \(d\mu(x) = \tfrac{1}{d} \sum_{i=1}^d \delta(x - \lambda_i)\). Additionally, the integral reduces to a sum over the eigenvalues of \(H\). In the case of a linear least squares problem, this Hessian is \(H=X^T X\), or the squared singular values of the data matrix \(X\).

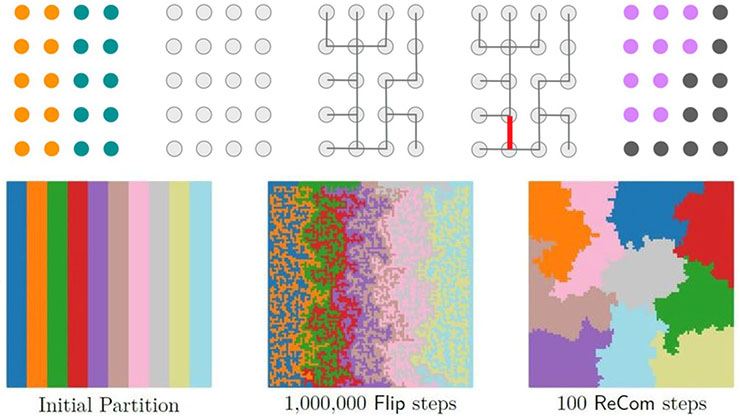

![<strong>Figure 1.</strong> Courtney Paquette and her colleagues developed theory that predicts the resulting behavior of training an image classifier via stochastic gradient descent (SGD). The observed “risk”—a normed residual on unseen data—is tracked closely by an a priori calculation that pertains to the spectrum of Hessian matrix \(H=X^T X\). <strong>1a.</strong> Theoretical predictions match the loss on varying training data sizes \(n\) on the CIFAR-5m dataset. <strong>1b.</strong> Similar agreement occurs for different learning rates \(\gamma\) when one conducts training on the Modified National Institute of Standards and Technology database (MNIST) dataset. Figure courtesy of Courtney Paquette; Figure 1b adapted from [5].](/media/mkujx2up/figure1.jpg)

To summarize, Paquette’s recipe for an a priori prediction of the loss curve is to first estimate the eigenvalues of \(X^T X\), then solve the scalar integral equation \((2)\) in any fashion. Curves such as those in Figure 1 result. While computing the spectrum of \(X^T X\) can be challenging, approaches for (i) updating the spectrum with streaming data or (ii) using the spectrum’s asymptotic behavior when \(X\) is the result of a known data generating process may improve the outlook. For example, the Marchenko-Pastur distribution is the limiting measure for data that is sampled independently from a process with fixed, finite variance, which yields useful analytical results.

Paquette confirmed that this theory matches well in practical settings, as evidenced in Figure 1 for varying dataset sizes and learning rates \(\gamma\). She sees good agreement in theoretical loss curves with realized results over a wide range of finite data sizes.

Compute Efficiency and Compute Optimality

Predicting the loss curves of an SGD-trained model may seem like a cute parlor trick, but this approach to theory can provide useful guidance before the start of the training process. By embedding the training process into a stochastic process, Paquette’s work assists with an intelligent choice of stepsize:

\[\gamma_* = \left( \frac{r}{2} \int_0^\infty \frac{x^2}{x-\lambda_{\mathrm{min}}} d\mu(x)\right)^{-1},\]

which depends on the minimal Hessian eigenvalue \(\lambda^{-}\). Figure 2 illustrates this outcome for the so-called isotropic features model by sampling various values of \(r\) and learning rate \(\gamma\). For a range of values \(r\), the convergence rate for prescribed \(\gamma^*\) (gray solid line) is reasonably close to a choice that provides an optimal convergence rate; stepsizes that are far from optimal may result in no convergence at all. The overparameterized regime where \(r > 1\) exhibits increasingly narrow bands of stepsizes that predict any type of convergence; here, \(\gamma^*\) is particularly useful.

![<strong>Figure 2.</strong> Average-case convergence rates as a function of stochastic gradient descent with stepsize \(\gamma\) in two regimes of \(r=d/n\). <strong>2a.</strong> Given underparameterized models when \(0< r <1\), a broad range of stepsizes result in fast convergence during training. <strong>2b.</strong> In the overparameterized regime, the intervals of stepsize for which convergence is achieved are progressively narrow with increasing \(r\). Figure adapted from [4] and courtesy of Courtney Paquette.](/media/wy3et4vr/figure2.jpg)

What about the notion of training optimization on a fixed compute budget? During her AN25 lecture, Paquette explained some key points of her work to analyze a minimal mathematical training model that incorporates data complexity \(\alpha\), target complexity \(\beta\), and parameter count \(d\) to analyze a one-pass SGD training process [6]. She and her colleagues represent compute cost as a simple product \(\mathrm{flops}=kd\)—where \(k\) is the number of iterations of the optimization algorithm and \(d\) is the number of model parameters—and perform an experiment that runs SGD for \(k\) iterations with varying \(d\). Plotting loss as a function of flops rather than iterations generates a family of parameterized curves (see Figure 3). With fixed \(d\), the use of compute (flops) past a certain turning point yields diminishing returns. This result occurs at various compute levels, as smaller models utilize fewer flops per iteration. If a practitioner has a sense of \(\alpha\) and \(\beta\) for their training data, they can decide on the model size \(d\) that is appropriate for their compute budget. And if one can adaptively expand their model size \(d\), they may schedule a growing \(d\)—i.e., a dynamically expanding model—such that a minimal number of flops can achieve a given loss by targeting and remaining near the training frontier. Figure 3b illustrates the complexity and research opportunities of even a simple model when capturing different types of behavior.

![<strong>Figure 3.</strong> Given a mathematical model of a training process with controllable parameters \(\alpha\) and \(\beta\) and model size \(d\), a linear power law frontier exists between the loss and flops (compute) that enables the analysis of several “phases” of behavior. <strong>3a.</strong> Varying loss curves under model capacity determine the compute-optimal curve. <strong>3b.</strong> Phase diagram of different asymptotic behaviors. Figure adapted from [6] and courtesy of Courtney Paquette.](/media/ytaezac4/figure3.jpg)

Many scientists feel that we are at a critical juncture of math, science, and society when it comes to the development of LLMs and other generative tools. The continued improvement of large models is reaching a point of diminishing returns, where the thoughtless approach of “more data, more parameters, more compute” will soon become prohibitively expensive. Regardless of the prospective impact of AI, mathematical theory will retain its important role in this space to help us understand the data and energy efficiency of such models. While theory largely pertains to least squares problems—a far cry from the highly nonlinear models in practice—Paquette affirms that this area of research is surprisingly applicable. Despite the remaining mysteries that surround the success of large generative models, this kind of theory provides a solid ground as the field continues to advance.

References

[1] Davis, T., & Rajamanickam, S. (2022, December 1). Ethical concerns of code generation through artificial intelligence. SIAM News, 55(10), p. 6.

[2] Hoffmann, J., Borgeaud, S., Mensch, A., Buchatskaya, E., Cai, T., Rutherford, E., ... Sifre, L. (2022). Training compute-optimal large language models. In NIPS’22: Proceedings of the 36th international conference on neural information processing systems (pp. 30016-30030). New Orleans, LA: Curran Associates Inc.

[3] Knight, W. (2023, April 17). OpenAI’s CEO says the age of giant AI models is already over. Wired. Retrieved from https://www.wired.com/story/openai-ceo-sam-altman-the-age-of-giant-ai-models-is-already-over.

[4] Paquette, C., Lee, K., Pedregosa, F., & Paquette, E. (2021). SGD in the large: Average-case analysis, asymptotics, and stepsize criticality. In Proceedings of the 34th conference on learning theory (pp. 3548-3626). Boulder, CO: Proceedings of Machine Learning Research.

[5] Paquette, C., Paquette, E., Adlam, B., & Pennington, J. (2024). Homogenization of SGD in high-dimensions: Exact dynamics and generalization properties. Math. Program., 1-90.

[6] Paquette, E., Paquette, C., Xiao, L., & Pennington, J. (2024). \(4+3\) phases of compute-optimal neural scaling laws. In Advances in neural information processing systems 37 (NeurIPS 2024) (pp. 16459-16537). Vancouver, Canada: Curran Associates Inc.

About the Author

Manuchehr Aminian

Associate professor, California State Polytechnic University

Manuchehr Aminian is an associate professor in the Department of Mathematics and Statistics at California State Polytechnic University, Pomona. His research interests include mathematical modeling, partial differential equations, and mathematical methods in data science.

Related Reading

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.