Topological Deep Learning: An Emerging Paradigm in Data Science

In 2024, artificial intelligence (AI) finally had its Nobel Prize moment, as both the Physics and Chemistry categories honored researchers who developed and utilized AI technologies. AI has profoundly changed the landscape of various industries over the past decade by helping organizations make data-driven decisions and gain a competitive edge in fields such as business, healthcare, finance, marketing, and social media analysis. This year’s Nobel acknowledgement brought desired mainstream recognition to these advances.

The preeminent driving force behind AI is deep learning (DL), which itself is a subset of machine learning (ML). DL techniques train artificial neural networks that have multiple layers (hence the term “deep”) to learn complex patterns and representations from data. Its transformative success in various fields has spurred its popularity in research domains like computer science, statistics, and mathematics, all of which address AI and ML in some capacity.

While ML and DL primarily involve concepts from calculus, linear algebra, probability theory, and optimization, their mathematical foundations are built upon advanced mathematical ideas from geometry, topology, algebra, and combinatorics. For example, ML and DL algorithms frequently utilize geometric measure theory and the Wasserstein metric. And in recent years, the intersection of DL and topology has given rise to a promising field called topological deep learning (TDL). By integrating principles from both disciplines, TDL offers a novel approach for researchers who seek to analyze, learn, and understand intrinsically complex datasets — particularly those with high-dimensional, high-order, and nonlinear structures that cannot be effectively described by more conventional physical and statistical methods.

![<strong>Figure 1.</strong> The framework of topological deep learning (TDL). Figure courtesy of JunJie Wee and partially adapted from [1].](/media/kqep4o3a/figure1.jpg)

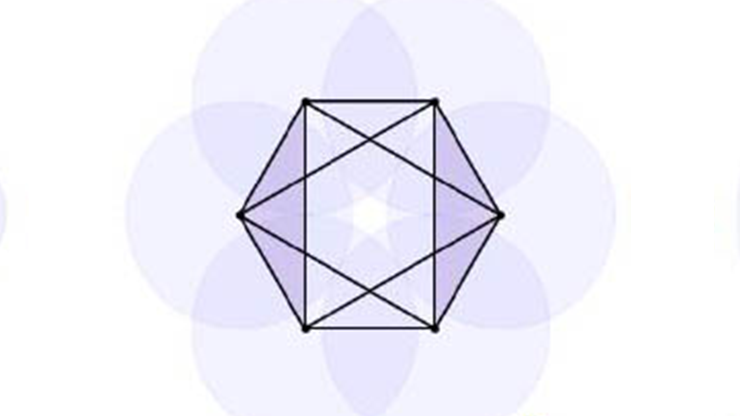

TDL is rapidly becoming a major paradigm in data science. At its core, the field combines the expressive power of deep neural networks (DNNs) with the mathematical rigor of topology. Unlike traditional ML methods that often struggle to capture the underlying geometric and structural properties of data, TDL leverages topological features and representations to overcome this limitation. When the term was first coined in 2017 [1], it primarily denoted the integration of topological features within the input pipeline of a DNN (see Figure 1). However, TDL is rapidly evolving and currently consents to a broader scope, encompassing a collection of ideas and methodologies that pertain to the use of topological concepts in DL. One can employ TDL methods via either observational or interventional modes [8]. In an observational capacity, these methods enhance the comprehension of existing DL models and their topological formulations. In an interventional role, they empower DL architectures to handle data that are supported by topological concepts.

Central to TDL is the notion of persistence homology [2, 7]: an algebraic topology technique [9] that uses filtration to bridge abstract topology and intricate geometry, resulting in a multiscale analysis of the shape of data. More specifically, persistent homology identifies and quantifies topological features in terms of their topological invariants, which may be regarded as loops, voids, and higher-dimensional structures within datasets. By incorporating persistence homology into DL frameworks, TDL can extract meaningful topological signatures from data and exploit them for various ML tasks — such as classification, regression, clustering, and anomaly detection. It can also implement and/or incorporate topological structures and topological spaces (domains) in DNNs, activation functions, loss functions, and sophisticated DL architectures like transformers, autoencoders, and reinforcement learning models, ultimately leading to topological transformers [3] and simplicial neural networks [8]. In fact, the 2024 Nobel Prize in Chemistry cited AlphaFold2, an AI model from Google DeepMind that uses transformers to predict the structures of hundreds of millions of proteins.

TDL models offer greater interpretability than black box DL models. TDL algorithms extract topological signatures that provide intuitive insights about the underlying structure of data, thereby facilitating better understanding and interpretation of model predictions [1]. Additionally, TDL is inherently robust to noise and can handle high-dimensional datasets more effectively than traditional methods. By focusing on the intrinsic topological structure of data, the field is able to uncover essential patterns and relationships that may be obscured by noise or irrelevant features. Moreover, TDL models can capture fundamental geometric and topological properties that allow them to generalize well to unseen data [4, 10].

![<strong>Figure 2.</strong> An arXiv preprint used topological deep learning to forecast emerging SARS-CoV-2 dominant variants in May 2022; its predictions were later confirmed by the World Health Organization two months later. Figure incorporates the arXiv preprint (later published as [4]) and World Health Organization weekly update release and is courtesy of the author.](/media/cpmnmrau/figure2.jpg)

TDL finds broad applicability across various domains, including biology, chemistry, materials science, neuroscience, social networks, and computer vision [12, 13]. Its versatility enables it to analyze diverse data types and address a wide range of real-world problems, such as disease diagnosis, data compression, image recognition, computer vision, neuroscience, drug design, chip design, and graph analysis [12]. Arguably, the most compelling demonstrations of TDL’s advantages over other existing methods are its victories in the Drug Design Data Resource Grand Challenge, an annual competition series in computer-aided drug design [10, 11]; its role in the discovery of SARS-CoV-2 evolution mechanisms, like natural selection via infectivity strengthening [5] and antibody resistance [14]; and the successful forecasting of emerging SARS-CoV-2 dominant variants BA.2 [6], BA.4, and BA.5 [4] (see Figure 2).

Despite its promise, TDL is still a relatively young field with several challenges and opportunities for further research. Key areas for future exploration include the effective integration of domain-specific knowledge and constraints to enhance the performance and interpretability of TDL models. Additionally, the desire to evolve TDL methods underscores the necessity of profound advancements in topological theories. This direction encompasses the examination of topological formulations beyond homology—such as Laplacian and Dirac—as well as the extension of topological domains to include cell complexes, path complexes, directed flag complexes, directed graphs, hypergraphs, hyperdigraphs, cellular sheaves, knots, links, and curves. Such a holistic approach would ensure the continued relevance and effectiveness of mathematics in tackling complex, real-world problems and boosting innovation in data-driven research.

In conclusion, TDL stands as a promising paradigm for learning, analyzing, and comprehending complex datasets. By amalgamating the rich mathematical theory of topology with the expressive capabilities of DL, TDL presents a distinctive approach to data analysis with advantages in robustness, interpretability, and applicability across diverse domains. Its use as a cutting-edge tool that can work alongside traditional ML methods holds the potential to unveil novel solutions to challenging problems and propel advancements in the realms of AI, mathematics, and data science. Much like how partial differential equations have shaped the landscape of applied mathematics for decades, TDL stands poised to influence generations of future mathematicians.

References

[1] Cang, Z., & Wei, G.-W. (2017). TopologyNet: Topology based deep convolutional and multi-task neural networks for biomolecular property predictions. PLoS Comput. Biol., 13(7), e1005690.

[2] Carlsson, G. (2009). Topology and data. Bull. Amer. Math. Soc., 46(2), 255-308.

[3] Chen, D., Liu, J., & Wei, G.-W. (2024). Multiscale topology-enabled structure-to-sequence transformer for protein–ligand interaction predictions. Nat. Mach. Intell., 6(7), 799-810.

[4] Chen, J., Qiu, Y., Wang, R., & Wei, G.-W. (2022). Persistent Laplacian projected Omicron BA.4 and BA.5 to become new dominating variants. Comput. Biol. Med., 151(5748), 106262.

[5] Chen, J., Wang, R., Wang, M., & Wei, G.-W. (2020). Mutations strengthened SARS-CoV-2 infectivity. J. Mol. Biol., 432(19), 5212-5226.

[6] Chen, J., & Wei, G.-W. (2022). Omicron BA. 2 (B. 1.1. 529.2): High potential for becoming the next dominant variant. J. Phys. Chem. Lett., 13(17), 3840-3849.

[7] Edelsbrunner, H., & Harer, J. (2008). Persistent homology — A survey. In J.E. Goodman, J. Pach, & R. Pollack (Eds.), Surveys on discrete and computational geometry: Twenty years later (pp. 257-282). Contemporary mathematics (Vol. 453). Providence, RI: American Mathematical Society.

[8] Hensel, F., Moor, M., & Rieck, B. (2021). A survey of topological machine learning methods. Front. Artif. Intell., 4, 681108.

[9] Kaczynski, T., Mischaikow, K., & Mrozek, M. (2004). Computational homology. In Applied mathematical sciences (Vol. 157). New York, NY: Springer.

[10] Nguyen, D.D., Cang, Z., Wu, K., Wang, M., Cao, Y., & Wei, G.-W. (2019). Mathematical deep learning for pose and binding affinity prediction and ranking in D3R Grand Challenges. J. Comput. Aided Mol. Des., 33(1), 71-82.

[11] Nguyen, D.D., Gao, K., Wang, M., & Wei, G.-W. (2020). MathDL: Mathematical deep learning for D3R Grand Challenge 4. J. Comput. Aided Mol. Des., 34(2), 131-147.

[12] Papamarkou, T., Birdal, T., Bronstein, M.M., Carlsson, G.E., Curry, J., Gao, Y., … Zamzmi, G. (2024). Position: Topological deep learning is the new frontier for relational learning. In Proceedings of the 41st international conference on machine learning. Vienna, Austria: Proceedings of Machine Learning Research.

[13] Townsend, J., Micucci, C.P., Hymel, J.H., Maroulas, V., & Vogiatzis, K.D. (2020). Representation of molecular structures with persistent homology for machine learning applications in chemistry. Nat. Commun., 11(1), 3230.

[14] Wang, R., Chen, J., & Wei, G.-W. (2021). Mechanisms of SARS-CoV-2 evolution revealing vaccine-resistant mutations in Europe and America. J. Phys. Chem. Lett., 12(49), 11850-11857.

About the Author

Guo-Wei Wei

MSU Research Foundation Distinguished Professor, Michigan State University

Guo-Wei Wei is an MSU Research Foundation Distinguished Professor at Michigan State University. His research explores the mathematical foundations of bioscience and data science.

Related Reading

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.