Tradeoffs in Differential Privacy and Learning From Smartphone Data

When providing services like next word prediction, autocorrect, autocomplete, and spellcheck, the developers of mobile devices must balance tradeoffs of utility and privacy while addressing traditional concerns with algorithm runtime and communication efficiency. During the 2024 SIAM Conference on Mathematics of Data Science (MDS24), which took place last October in Atlanta, Ga., Jelani Nelson of the University of California, Berkeley, delivered an invited presentation that introduced the theoretical underpinnings of differential privacy. He discussed this technique’s ability to inform and analyze protocols that allow a server to learn statistics (like word frequency) while still retaining tunable control of user privacy.

Nelson’s talk covered a striking breadth of ideas, including statistics, combinatorics, projective geometry, algorithm design, and asymptotic analysis. These disparate approaches all come together nicely to establish state-of-the-art methods with provable bounds.

What Does “Privacy” Mean, Exactly?

Certain conveniences in modern smartphones that we take for granted—such as spellcheck or next word prediction when texting—are actually nuanced mathematical problems due to the complex structure of human languages. As with most machine learning tasks, the successful solution of these problems requires huge volumes of training data — in this case, text messages.

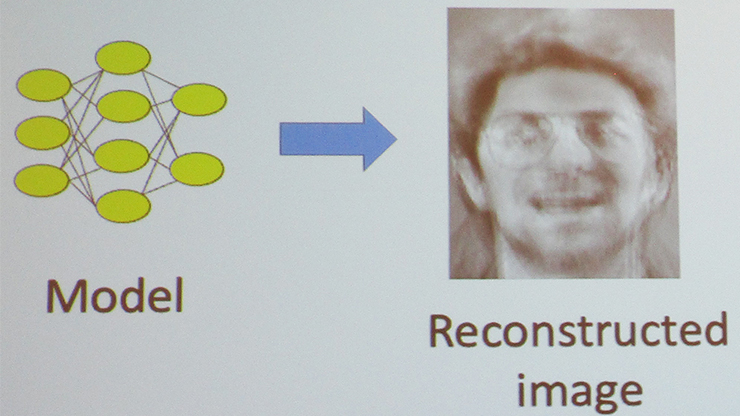

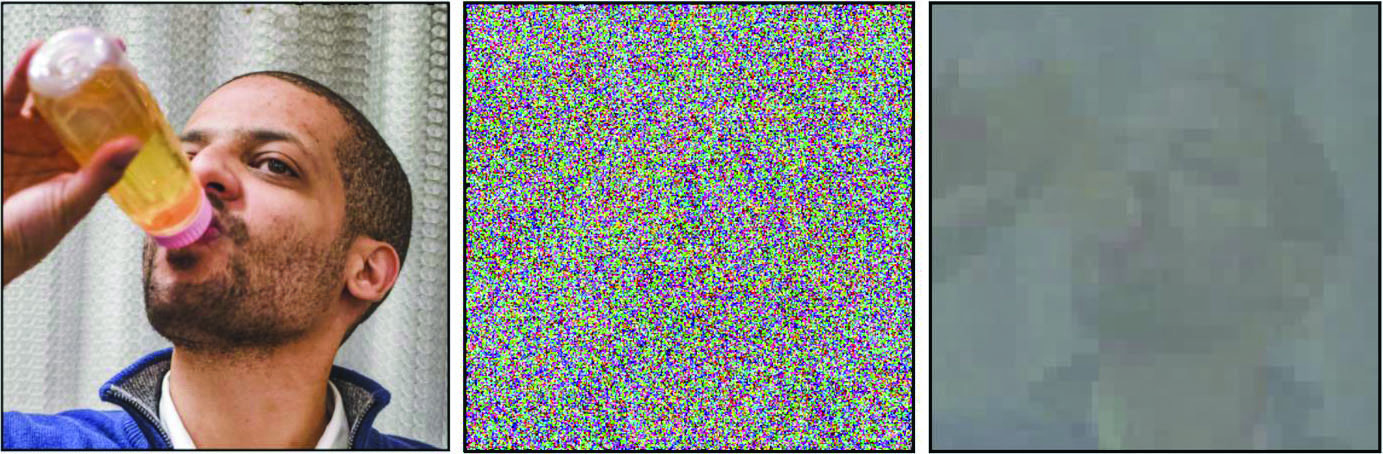

When trying to protect user privacy, adding noise to the data might seem like a logical first step. However, the use of noise may not be as foolproof as it initially seems; in fact, the very definition of “noise” depends on the context of the situation, and too much of it can spoil the data’s usability. Nelson utilized sample images to demonstrate that “adding noise” is surprisingly subtle, which means that approaches to anonymization, privacy, or reconstruction might not necessarily be straightforward. For example, averaging many noisy images of the same scene or performing wavelet denoising on a single noisy image can still recover some aspects of the information (see Figure 1).

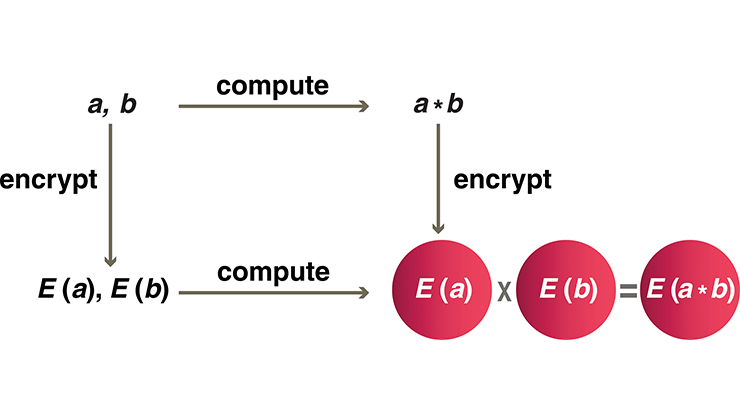

While the study of database privacy spans multiple decades, the introduction and uptake of differential privacy as a framework has only commenced in the past 20 years [2]. One common variant of this framework is local differential privacy:

\[\frac{P(M_i=M: x_i=x)}{P(M_i=M:x_i=x')}\le e^\varepsilon,\]

where \(x_i\) is the data held by the \(i\)th device. For all devices \(i\) and possible messages \(M\), this definition states that the ratio of probabilities of finding a given message when replacing the underlying data should be bounded by a number that is ideally close to \(1\). More broadly, the likelihood of seeing an output to a query should not change significantly based on its inclusion or exclusion (relating to the factor \(\varepsilon\)). An algebraic manipulation can make this definition look more like an “analysis-style” bound between log-likelihood functions. When a procedure satisfies this inequality with \(\varepsilon=0\), all of the probabilities that it expresses are the same; as a result, the message does not depend on the user’s data whatsoever and the protocol is perfectly private. Similarly, small positive values represent minute changes in probability.

This framework is powerful for several reasons. For instance, a given system can be provably \(\varepsilon\)-differentially private for some \(\varepsilon\), protocols may allow for tunable parameters that affect \(\varepsilon\), or practical algorithms can be benchmarked for an estimated \(\varepsilon\). While \(\varepsilon \ll 1\) is desirable, Nelson explained that tech companies often have systems with an \(\varepsilon\) on the order of \(2\) to \(8\) (or more!) to maintain good utility.

Protocols and Tradeoffs

To prelude his discussion of protocols at MDS24, Nelson outlined five interrelated priorities that protocols should ideally address: (i) privacy; (ii) utility, which is some measure of how much the analyst is able to learn about the data; (iii) communication between the device and server; (iv) server compute time; and (v) device compute time. Protocols span a range of sophistication levels, with various tradeoffs. For example, naive protocols obtain lower bounds; a protocol for which devices send the server a uniform random selection of predetermined messages (or no messages at all) would optimize privacy and device compute time but yield no utility.

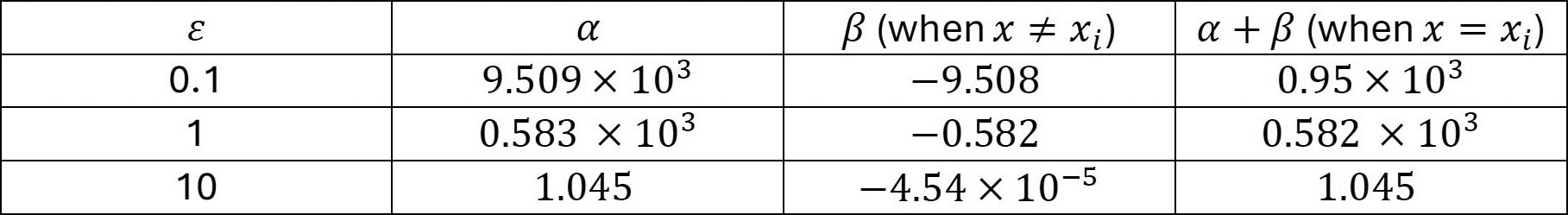

In 1965, Stanley Warner introduced a fundamental protocol called randomized response to address noncooperative survey participants [4]. Consider a set of words \(\{1, ..., k\}\) (knowing that we can map words to integers) and suppose that a device’s message contains \(x=1\). Given this protocol, the device sends the word to the server with probability \(e^{\varepsilon}p\); any other word \(2,…,k\) is sent with probability \(p\), where the exact \(p\) is calculated in terms of \(k\) and \(\varepsilon\). The server then accumulates the distribution by adding \(\alpha+\beta\) for the communicated \(x=x_i\); it accumulates a negative value \(\beta\) for the estimated frequency of all other words. The specific choices of \(\alpha\) and \(\beta\) ensure that the accumulation in expectation for this system is \(1\) for \(x_i\) and \(0\) otherwise, leading to a linear system that results in \(\alpha=\frac{e^{\varepsilon+k-1}}{e^\varepsilon -1}\) and \(\beta=\frac{-1}{e^\varepsilon -1}\). Figure 2 provides a sampling of parameters.

This system’s simplicity and relatively low compute costs certainly make it appealing, though Nelson commented that the loss in utility for a given \(\varepsilon\) is provably “terrible.” Rapid advances in this field are leading to more sophisticated protocols—such as subset-based methods [5] or the Hadamard Response privatization scheme [1]—that can be analyzed under the framework of so-called combinatorial designs. While these newer protocols implement more sophisticated strategies, the fundamental principles of protocol analysis remain the same.

Projective Geometry and Preferred Messages

The work of Nelson and his colleagues utilizes ideas from finite projective geometry to implement a pair protocol called ProjectiveGeometryResponse (PGR), which also has a hybrid version (HPGR) [3]. In the context of differential privacy protocols for smartphones, finite fields enable parameter tuning and careful control over groups of words.

Motivated by a “meta approach” from the creators of the Hadamard Response [1], Nelson and his collaborators expand upon the original set of words \(\{1,...,k\}\) to introduce three new aspects. First, they construct a message space \(\mathcal{Y}\); for each message \(x\), they then construct a subset \(S_x \subset \mathcal{Y}\) with exactly \(s\) entries that serve as “preferred messages” for \(x\). Finally, a constraint over pairs of sets \(S_x\) and \(S_{x’}\) ensures that the entries have exactly \(\ell\) messages in common. If preferred sets allude to phrases with similar meanings, then the phrase \(^{``}\textrm{hi}''\) might have a set of preferred messages \(S_x=\{^{``}\textrm{hello,}'' \; ^{``}\textrm{greetings,}'' \;\)\(^{``}\textrm{salutations}''\}\), such that \(s=3\); however, the message space \(\mathcal{Y}\) may be an abstraction and not actually a set of strings. While this adds complexity to the process, the flexible construction of \(\mathcal{Y}\) and choice of \(s\) and \(\ell\) allow for optimization and new protocols that finely control utility, privacy, communication, and compute time.

Within this framework, Nelson’s group begins with a finite field \(\mathbb{F}_q\) that contains the numbers \(0\) through \(q-1\) (\(q\) prime), which are equipped with modular arithmetic [3]. Taking a tuple with \(t\) elements yields \(\mathbb{F}_q^t\), to which one can introduce an inner product \(\langle u, v \rangle\) that is the usual inner product modulo \(q\). Two points in \(\mathbb{F}_q^t\) belong to the same equivalence class if they lie on the same ray, and each equivalence has many points. One canonical way to select a representative, called a projective point, is with vectors whose first nonzero entry is \(1\). For example, the projective points in \(\mathbb{F}_q^3\) include all tuples of the forms \((1,y_2,y_3)\), \((0,1,y_3)\), or \((0,0,1)\). It is then possible to associate words and messages with projective points in a unique way that avoids the assignment of parallel elements in \(\mathbb{F}_q^t\). Preferred sets \(S_x\) are constructed through the constraint of elements \(u\) that satisfy \(\langle x, u \rangle \equiv 0 \, ( \textrm{mod} \: q)\). \(S_x\) is thus an orthogonal subspace to \(x\), which serves as a tidy computational approach.

![<strong>Figure 3.</strong> A collection of schemes with proven communication costs in terms of user-server communication, utility loss (reconstruction of a word frequency distribution), and server runtime as a function of dictionary size \(k\), number of users \(n\), privacy parameter \(\varepsilon\), and prime \(q\) that underlies the field \(\mathbb{F}_q^t\). Figure adapted from [3].](/media/rlsnfrqp/figure3.jpg)

The punchline is an algorithm that gives an explicit recipe for the choice of \(s\), \(\ell\), and \(S_x\) and parameters \(q\) and \(t\) for the field \(\mathbb{F}_q^t\), which results in provable values for communication, utility loss, and server runtime. The hybrid version of this algorithm also decouples the privacy parameter \(\varepsilon\) from utility loss and server time; the choice of \(q\) instead provides an explicit tradeoff between these two factors (see Figure 3). Although PGR and HPGR both take a small hit in server time, they offer improved prefactors for utility loss. Nelson noted that while computer science often fixates on big-O bounds, prefactors are important for practical computations, and a factor of two can indeed be a valuable improvement.

The breadth of topics in this field of study—and particularly in the application of projective geometry—was perhaps the most surprising aspect of Nelson’s presentation at MDS24. Because the large-scale uptake of machine learning and artificial intelligence requires knowledge of topics such as linear algebra, optimization, statistics, algorithms, and ethics, the applied mathematics community is currently endeavoring to educate the next generation of professionals and academics with a multidisciplinary perspective. While there are no easy solutions, Nelson’s success with tools from the realm of theoretical mathematics stands as a parallel to this challenge and emphasizes the many significant functionalities of applied math.

References

[1] Acharya, J., Sun, Z., & Zhang, H. (2019). Hadamard Response: Estimating distributions privately, efficiently, and with little communication. In Proceedings of the twenty-second international conference on artificial intelligence and statistics (pp. 1120-1129). Okinawa, Japan: Proceedings of Machine Learning Research.

[2] Dwork, C. (2008). Differential privacy: A survey of results. In M. Agrawal, D. Du, Z. Duan, & A. Li (Eds.), Theory and applications of models of computation (TAMC 2008) (pp. 1-19). Lecture notes in computer science (Vol. 4978). New York, NY: Springer.

[3] Feldman, V., Nelson, J., Nguyen, H., & Talwar, K. (2022). Private frequency estimation via projective geometry. In Proceedings of the 39th international conference on machine learning (pp. 6418-6433). Baltimore, MD: Proceedings of Machine Learning Research.

[4] Warner, S.L. (1965). Randomized response: A survey technique for eliminating evasive answer bias. J. Am. Stat. Assoc., 60(309), 63-69.

[5] Ye, M., & Barg, A. (2018). Optimal schemes for discrete distribution estimation under locally differential privacy. IEEE Trans. Inf. Theory, 64(8), 5662-5676.

About the Author

Manuchehr Aminian

Associate professor, California State Polytechnic University

Manuchehr Aminian is an associate professor in the Department of Mathematics and Statistics at California State Polytechnic University, Pomona. His research interests include mathematical modeling, partial differential equations, and mathematical methods in data science.

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.