When Artificial Intelligence Takes Shortcuts, Patient Needs Can Get Lost

A patient checks into the hospital emergency room, where staff rush her to the intensive care unit (ICU). The radiologist feeds her chest X-ray into an artificial intelligence (AI) program,1 which reports that she likely has pneumonia. Another patient with glaucoma visits his ophthalmologist, who takes a routine image of his retinas. The AI that analyzes the image determines that the patient also has type 2 diabetes.

Both of these conclusions turn out to be correct, but for entirely coincidental reasons. ICU patients are far more likely to have pneumonia than other hospital patients, and type 2 diabetes is more prevalent in older people. In other words, the computer model took a shortcut by drawing an inference based on incidental details rather than actual diagnostic factors. To exacerbate the situation, when medical workers require AI systems to control for these details, the models fail to identify actual medical problems from images.

“AI seems to catch patterns about our data acquisition across multiple domains and use them in prediction,” Judy Wawira Gichoya, a radiologist and professor at Emory University, said. “Some of those things are visible, and some are invisible. When AI calibrates to the scanner model or social demographic characteristics like race, and those are not things that radiologists can see [in the images], it’s very difficult to figure out whether this shortcut is helpful or harmful.”

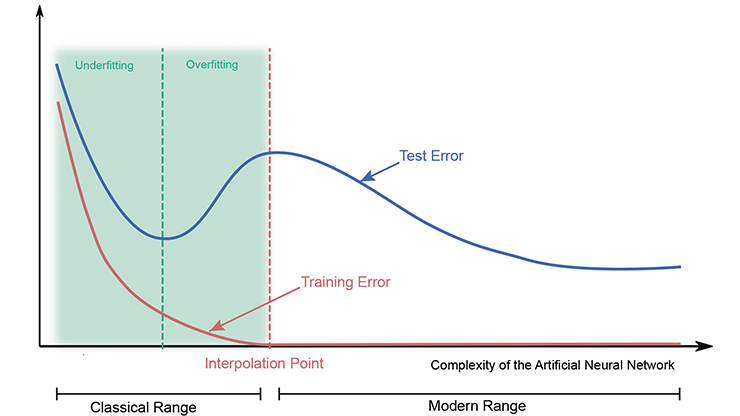

During a session about AI biases at the 2025 American Association for the Advancement of Science (AAAS) Annual Meeting, which took place in Boston, Mass., this February, Gichoya explained that AI shortcuts—much like normal human shortcuts—are meant to save time. “We rely on shortcuts to deal with information overload, [like] when we have to study for a test or when our brains have to process a lot of things,” she said. “AI algorithms are trained to mirror the function of the brain, especially neural networks.”

Maryellen Giger, a medical physicist at the University of Chicago and moderator of the AAAS session, noted that while “bias” has certain connotations in the context of human experience, it is also a statistical concept. AI models identify patterns within training data and utilize these patterns—based on probability—to infer something about the system that was not part of the training. When the models identify patterns that are irrelevant or tangential to the process of medical diagnosis, the resulting bias is difficult to overcome and leads to potentially troublesome shortcuts.

Human limitations contribute to these shortcuts as well. “In medicine, we need to acknowledge that the problem with shortcuts is that there are some things we don’t know,” Gichoya said. Sometimes human practitioners are unsure of a diagnosis, either due to ambiguous test results or because a patient is experiencing a rare condition. “80 percent of the work in developing a good AI algorithm is data,” Giger added, citing the venerable computer adage “garbage in, garbage out.” “If you don’t have the reference standard, it’s not representative of the intended population.”

AI of the Beholder

Ocular diseases are a growing concern around the world, in part because improved global access to medical care is resulting in more diagnoses. While this shift means that treatment is also possible in previously inaccessible locations, the total burden on healthcare systems is increasing.

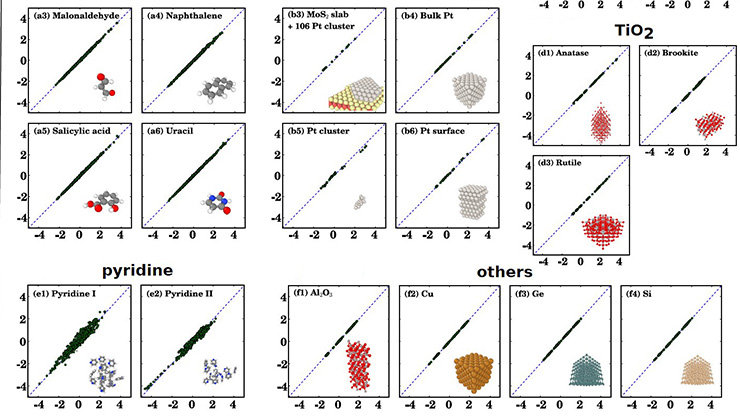

In 2020, medical researchers developed a groundbreaking diagnostic technique called oculomics that uses images of retinas to identify ophthalmological disorders such as macular degeneration as well as a number of other medical conditions, including cardiovascular disease and dementia [3]. These so-called fundus photographs are noninvasive, relatively inexpensive, and now a routine part of eye exams in places that have access to the necessary equipment (see Figure 1). In recent years, medical AI researchers have proposed that computers learn this process, as a large number of fundus images are available for training models — much more data than in many other medical subfields. In fact, published studies have claimed that AI models can determine whether patients have conditions like cardiovascular disease or diabetes, and even identify risk behaviors such as tobacco smoking, simply based on these fundus images [4].

But when those same models control for a patient’s age, the accuracy drops to no better than random chance. It turns out that the algorithms were using age as a shortcut because all of the conditions and risks that they identified from retinal images increase strongly with age. And while oculomics is an extremely promising premise, another complicating factor is that humans themselves are not very good at diagnosing disease from fundus photos. AI learns best from data that has already been interpreted by humans; it also tends to succeed at things we do well and perform poorly at things we do not do so well. Without proper human guidance, it is likely to create its own shortcuts.

“We hope that we can train the model to correct for age and still get the right answer in terms of downstream diseases,” Jayashree Kalpathy-Cramer, a professor of ophthalmology at the University of Colorado who also spoke in the same AAAS session, said. “But you have to be careful about making sure that you’re correcting for all of these potential confounders when looking at diseases that are hard for humans to assess. A human cannot look at someone’s eye and say how old they are, whether they’re a smoker, whether they’re male or female, or what their risk of disease is.”

Show Your Work

Just as medical practitioners learn useful shortcuts based on experience, computers can be “taught” with reinforcement that rewards proper conclusions and penalizes the selection of wrong decision-making criteria. Much like the need to show one’s work on a math test, proper AI training requires both the “right” answer and the correct steps to find it. However, arriving at this outcome necessitates knowledge of an algorithm’s specific criteria, which is not always straightforward — particularly when most AI systems are black boxes.

Gichoya cited a famous example of a problem-solving shortcut from the early 1900s: a horse named Clever Hans whose trainer claimed that he could do math, when in reality he was just very good at discerning cues from the audience. Of course, the stakes of medical AI shortcuts are much higher than Clever Hans’ interpretation of human reactions. “What is the model actually learning?” Kalpathy-Cramer asked. “[AI] models are not very transparent, so it’s hard to know exactly what information they’re using to come up with their answers.”

To make matters worse, algorithms are built for efficiency — which sounds good in principle but can be problematic in practice. “When you remove one shortcut, the model just moves to another [because] most datasets appear to have more than one shortcut,” Gichoya said.

Shortcuts and the Giraffe Problem

Radiology researcher Karen Drukker, who works with Giger at the University of Chicago to develop medical AI tools, noted in her own AAAS talk that present-day medical images are not designed for taking measurements. Rather, they offer qualitative data that human practitioners can interpret after years of training via medical school or other intensive practices. For instance, because a human can sufficiently learn to identify a healthy hand from a single image, medical education focuses on teaching students to identify diseased hands. However, this means that computer training data is overwhelmingly dominated by pictures of hands with various conditions, which makes it more difficult for AI models to learn the markers of a healthy hand. “If it involves radiation, you can’t just scan healthy hands,” Drukker said. “For rare diseases, [lack of data] is even more of a problem.”

Drukker pointed out that more data is available than is being utilized, as hospitals and other institutions do not regularly share data with each other — leaving researchers with training materials that have a heavy statistical bias. Additionally, researchers who build medical AI models generally adapt them from systems that analyze images on the web: stock photos, wildlife pictures, and so forth (among other more serious issues, this practice has led to a preponderance of giraffes in AI-generated “art” [1]).

“Data augmentation techniques [are] where you pretend you have more data than you actually have to try to overcome these limitations,” Drukker said. “There are a lot of applications that seem promising, but they have to live up to the high standards in healthcare. Groups are looking into generating synthetic data, but of course those can also introduce their own bias. If you have biased synthetic data, then your algorithm is going to learn things from that biased data.”

This sort of statistical bias is worrisome enough, but—as Gichoya noted—researchers in the U.S. are currently facing repercussions under the Trump administration for studying human biases around gender, race, national origin, sexuality, and so on. The cancelation of grants from the National Institutes of Health and other vital sources has imposed further setbacks [2].

“Black women continue to die even when there are better treatments for the diseases that they die from,” Gichoya said, citing her own work in health equity as a healthcare practitioner. “We understand that some [drivers] are systemic racism and unconscious bias, but if we use models that are able to pick up patterns instead of blaming them, we can improve outcomes for everyone. We’re never going to avoid the messiness of real-world data, but as long as we have a human in the loop, the radiologist or doctor could say, ‘I need to be more careful when I’m relying on model predictions.’”

1 This is technically machine learning rather than true “artificial intelligence,” but standard practice equates these two terms, so I defer to the more common language.

References

[1] Francis, M.R. (2020, January 27). The threat of AI comes from inside the house. SIAM News, 53(1), p. 8.

[2] Lazar, K., & Kowalczyk, L. (2025, March 7). NIH abruptly terminates millions in research grants, defying court orders. STAT News. Retrieved from https://www.statnews.com/2025/03/07/nih-terminates-dei-transgender-related-research-grants.

[3] Wagner, S.K., Fu, D.J., Faes, L., Liu, X., Huemer, J., Khalid, H., … Keane, P.A. (2020). Insights into systemic disease through retinal imaging-based oculomics. Transl. Vis. Sci. Technol., 9(2), 6.

[4] Zhou, Y., Chia, M.A., Wagner, S.K., Ayhan, M.S., Williamson, D.J., Struyven, R.R., … Keane, P.A. (2023). A foundation model for generalizable disease detection from retinal images. Nature, 622, 156-163.

About the Author

Matthew R. Francis

Science writer

Matthew R. Francis is a physicist, science writer, public speaker, educator, and frequent wearer of jaunty hats. His website is https://bowlerhatscience.org.

Related Reading

Stay Up-to-Date with Email Alerts

Sign up for our monthly newsletter and emails about other topics of your choosing.